Welcome back to another exciting edition of "Will it Alteryx?" In this installment I'll be looking at Parquet, a columnar storage format primarily used within the Hadoop ecosystem. While Parquet is growing in popularity and being used outside of Hadoop, it is most commonly used to provide column-oriented data storage of files within HDFS and sometimes as a storage format for Hive tables.

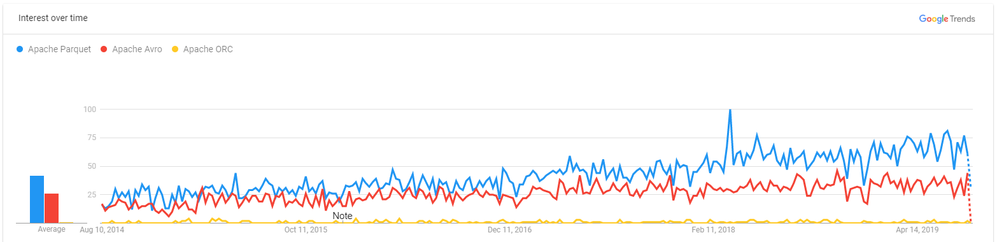

Interest in Parquet has rapidly surpassed both ORC and Avro formats.

Interest in Parquet has rapidly surpassed both ORC and Avro formats.

A column-oriented data storage format organizes tables by column rather than row. This can provide for much more efficient querying by applications which are looking for specific values rather than entire records. It can also provide other benefits such as encoding and compressing files. As an example, I took a 2 MB CSV file and converted it to a parquet file which was almost 40% smaller in file size.

Parquet storage format typically provides significant savings in file sizes.

Parquet storage format typically provides significant savings in file sizes.

As more and more organizations are moving to the cloud, reducing file sizes can provide an immediate benefit in savings on storage costs. But, I know you are wondering how can we leverage parquet files in Hadoop with Alteryx when the HDFS Input only supports CSV or Avro file types?

The HDFS File Selection tool only allows Avro or CSV file types.

The HDFS File Selection tool only allows Avro or CSV file types.

Will it Alteryx?

There are a couple of ways to process parquet data with Alteryx. This is not meant to be an exhaustive list but to mention some of the methods.

- For Hive tables stored in parquet format, a few options exist which are covered in this Knowledge-Base article.

- If you are running on a Hadoop client machine (like an edge node), you can use Spark Code or Python Code to read the data into a DataFrame and then pass that to the Apache Spark Code tool or the Python tool in Designer.

- Example Spark code:

df = sqlContext.read.parquet("/hdfs_path/file.parquet")

- Example Python code using the PyArrow package:

Package.installPackages(['pyarrow'])

import pyarrow as pa

pa.hdfs.connect(host, port, username)

- However, most of us aren't running on a Hadoop client machine, so the following solution allows you to read parquet data from HDFS directly into Designer. This works via the WebHDFS or HttpFS restful interfaces to HDFS. Some Hadoop administrators might block this feature, or have it only accessible through a Knox Gateway. Please work with your Hadoop Administrator for details.

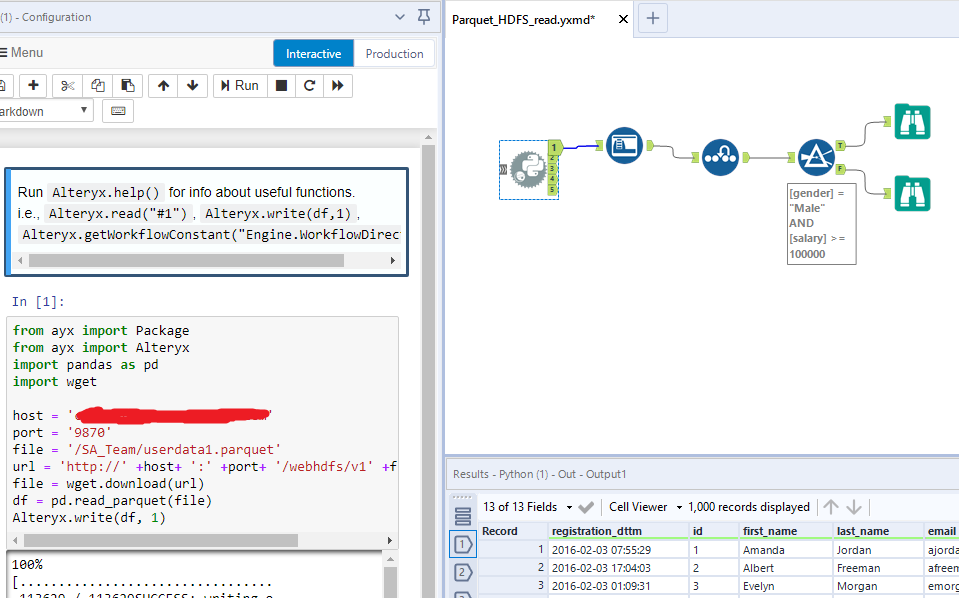

- In Designer, pull down the Python tool from the Developer category.

- In the Python tool code editor, insert the following code making modifications to match your environment. There are additional options for specifying credentials or a Kerberos token.

from ayx import Package

from ayx import Alteryx

Package.installPackages(['wget'])

import pandas as pd

import wget

host = 'hdfs.namenode.com'

port = '9870'

file = '/mydir/myfile.parquet' # the HDFS file path

url = 'http://' +host+ ':' +port+ '/webhdfs/v1' +file+ '?op=OPEN'

file = wget.download(url)

df = pd.read_parquet(file)

Alteryx.write(df, 1)

- The data set will be available at the "1" output anchor where additional tools can be added to build a workflow.

Reading parquet data from HDFS through the Python tool

Reading parquet data from HDFS through the Python tool

- Note, the "Package.installPackages" line requires Designer to be "Run as Administrator" and only needs to be executed one time.

Final Thoughts

Hopefully this gives you some ideas on how you can use Alteryx to process parquet data in your organization. If you have other ideas on how this can be accomplished please add them to the comments below.

If you have any technologies you would like to see explored in future installments of the "Will it Alteryx" series, please leave a comment below!

References