Engine Works

Under the hood of Alteryx: tips, tricks and how-tos.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Engine Works

- :

- Hardware Matters

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

I remember the first time I installed Alteryx at work. I was so excited after running the trial on my computer at home, but when it came time to put my newly acquired license to use, it wasn’t the same experience. Sure, it was fast; but nothing like it was on my own computer. Almost like I was watching it in slow motion.

Rightfully, I assumed it was the hardware. The IT department was kind enough (after months of negotiating) to allow me to have a desktop in addition to the laptop I already had. They called it a “development” machine because it had *8* GB of RAM and a sweet AMD dual core processor. Note the sarcasm. The year was 2014, quad core was pretty much the standard, and 8 GB of RAM wasn’t anything special. I tried to explain that on my machine at home this application ran much faster (four core and 32 GB of RAM), but there were costs associated with a “special order” and I couldn’t really quantify the cost to benefit ratio of the expense.

Thankfully after a few months of working that machine to the max, the hard drive just gave up. It didn’t take much longer for the next one to fail too. That’s the thing about hard disk drives – they’re a mechanical spinning disk prone to failure under long periods of heavy use. This particular machine was running 24/7. Constant use, plus poor ventilation and heat = faster failure rate.

So, now that I’ve had a few years of working with Alteryx and been lucky enough to experience it on a wide variety of machinery I can tell you without a doubt that hardware matters, a lot.

For the sake of demonstration, I got ahold of the file that was the subject of my first use case for Alteryx. Well, not the exact file but the current version. It’s a CSV containing all of the identifiers for healthcare providers in the US (NPPES Downloadable File). In its zipped format, it's only about 600 MB, but unzipped it is greater than 6 Gb. At 329 columns wide and 5.8 million rows long it was well over the limit of most self-service tools’ ability. However, Alteryx handled it without a problem.

Anyway, to recreate the difference in experience I had back in 2014, I got ahold of a laptop from a friend who works for a not-so-tech-savvy company. A Dell Latitude E5570 to be exact. It was the closest I could find to an elderly piece of equipment. While it does meet the “high performance” specs outlined by Alteryx (four core CPU, 16 GB of RAM and 500 GB of space on its primary drive) it really doesn’t give a high-performance experience.

The workflow I ran back in 2014 took the extracted CSV file, counted the number of unique identifiers per city/state and joined the count to each line’s state address. Pretty simple – three tools. Four if you count the Browse. When we ran it on his laptop it took 2 minutes and 49.667 seconds to complete.

Next up, we have a four core, 32 GB of RAM, and 500 GB on the primary drive. But this time the primary drive is an SSD.

It crushed this same workflow in just 1 minute and 4 seconds. More than two and a half times as fast. You could argue that the RAM was part of that (sorry I couldn’t take this one apart and make them even), but we ran a few more tests just to compare the impact of RAM.

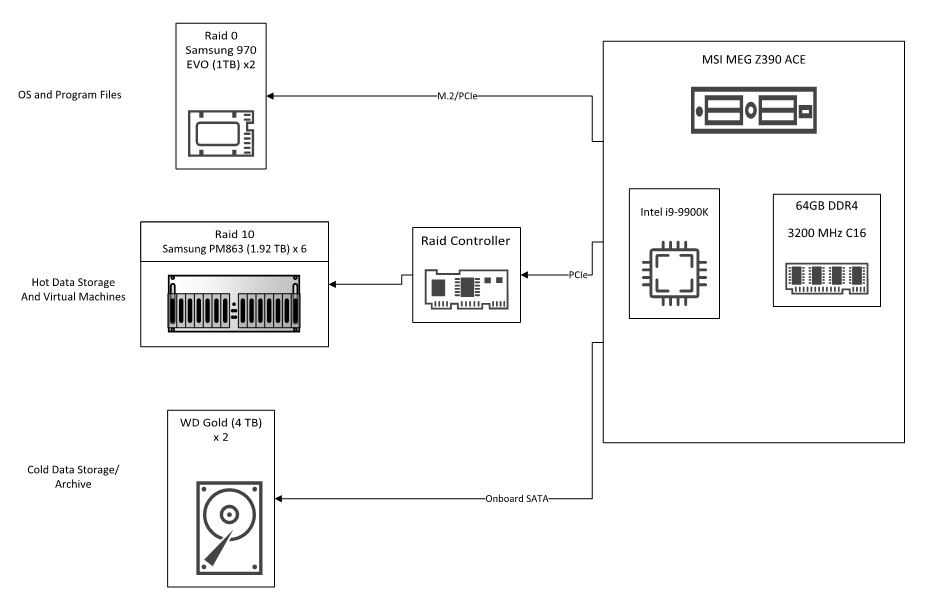

To study the impact of RAM and further prove the need for better hardware, we go to my daily driver (home computer). It’s 8 core, 64 GB of RAM, and 1 TB solid state (NVMe) connected via PCIe in a Raid 0 configuration where the OS resides. And just to be safe, we have a Raid 10 cluster of data center SSDs for data and file storage.

For this test we built a virtual machine, storing it on the Raid 10. It has four cores, 8 GB of RAM and a 200 GB drive for the operating system disk. All well below the specs of the last two machines and doesn’t meet the high-performance spec.

It could handle the same workflow in almost exactly 40 seconds. That’s more than four times faster.

Then, to really make a point I set up another virtual machine. This time just two cores and 4 GB of RAM. It gave impressive results, finishing up at 53.8 seconds. Still at least three times faster than the laptop with high-performance specs.

| CPU | CPU Clock | CPU Cores | Primary Drive | Primary Drive Type | Primary Drive Interface | Secondary Drive | RAM | WF Run Time | |

| Machine A | Intel i5-6440HQ | 3.5 Ghz | 4 | 500GB | Hard Disk Drive (7200 RPM) | SATA | 16 GB | 2:49 | |

| Machine B | Intel Xeon E3-1271v3 | 3.6 Ghz | 4 | 512 GB | Solid State Drive | SATA | 32 GB | 1:04 | |

| Machine C | Intel i9-9900K | 3.6 Ghz (OC @ 4.81 Ghz) | 8 | 1 TB | NVMe (Raid 0) | PCIe/M.2 (Raid 0) | 64 GB | 0:36 | |

| Machine D (Virtual) | Intel i9-9900K (virtual) | 3.6 Ghz | 4 | 200 GB | NVMe (Raid 0) | PCIe/M.2 (virtual SCSI) | Solid State - SATA - Raid 10 | 8 GB | 0:40 |

| Machine D (Virtual) | Intel i9-9900K (virtual) | 3.6 Ghz | 2 | 200 GB | NVMe (Raid 0) | PCIe/M.2 (virtual SCSI) | Solid State - SATA - Raid 10 | 4 GB | 0:53 |

Here’s why it matters:

The most commonly used interface between a hard drive and motherboard is called SATA III (serial AT attachment revision three). [*Fun fact: “AT” stands for advanced technology and was a term used by IBM for their second generation of personal computers (PC/AT).] SATA is a set of standard specifications; the third generation of which requires data be able to move at 6 Gbit/s. Sounds fast, right? That’s only in theory though. In reality, the speed at which the CPU can process incoming data/return processed data and the speed at which the drive can send/receive data are just as important.

Going back to the laptop; the hard disk drive uses a SATA III interface (the full 6Gbit/s). However, when we run a benchmark (test of actual performance) the drive can only read and write data at around 100 MB/s.

The second machine, with an SSD for the primary drive, is also using a SATA III interface. But, because of the SSD its benchmark was around 480 Mb/s.

And finally, the third machine (and its virtual machine). This one uses PCIe (M.2 to be exact) instead of SATA to interface the solid state for the OS and the motherboard. This version of PCIe has a theoretical transfer rate of 985 Mb/s per lane with four lanes, giving it a total theoretical read/write of 3,940 Mb/s. When we ran a benchmark from inside the virtual machine it was surprisingly good at roughly 1,200 Mb/s. And of course, directly on the machine itself (not the VM) was the best at around 2,700 Mb/s.

There were more tests done than just this, but to spare you the narrative I’ve summarized my recommendations...

Recommendations

CPUs

- Bigger is always better. Always.

- Avoid CPUs advertised as “low energy” or “energy efficient.” These are most commonly found in laptops and are meant to restrict processing power to extend battery life. Always look at the model number’s suffix to find these:

- If you’re using a virtual machine, Intel or AMD might be better based on other hardware factors. It’s hard to put a guideline around this. I’ve always had better luck with Intel though.

RAM

This is another “bigger is better” situation. However, if the rest of your hardware isn’t at the same level of performance it won’t matter.

Memory clock cycles (speed) and latency are the other factors to consider here. Clock cycles are the number of times each read and write can be done per second. It usually looks like this: 3200Mhz. Latency is a series of timings that indicate the delay between the RAM receiving a command and being able to use it. They’ll usually be listed like this: 16-18-18-36 or C16. There isn’t a specific rule about how to pair these up and there are dozens of possible configurations. It is also highly dependent on the motherboard and CPU specifications for it to be utilized 100%.

Drives

Avoid hard disk drives at all costs. As shown above, it will cripple your work. If your boss or IT department needs any further validation of that, point them to this.

Relative speeds of the same workflow:

- Hard disk drive: 1 Workflow in 2:49

- Solid state drive: 1 Workflow in 1:04

- M2: 1 Workflow in 0:40

This is NOT a situation where bigger is better, but also there isn’t a single size recommendation. It’s a ratio of size to data I/O performance that just depends on what you’re using. Most people won’t recognize the difference between a 500 GB and 1 TB, but a 500 GB to 4 TB would be noticeable if you’re having to retrieve data from that drive.

If you ever find yourself in the position of being able to custom build a machine and can’t decide on which combination of parts, check out userbenchmark.com. You can benchmark your own machine and then compare/build a better one from other users’ results.

Finally, don’t forget that an adequate cooling system is just as important!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.