Data Science

Machine learning & data science for beginners and experts alike.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Data Science

- :

- What's a Pipeline? An Overview of the New Python-b...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

The 2019.3 Beta includes a new predictive analytics application; Assisted Modeling, as well as a whole new palette of predictive tools to play with. These are the Python-based Machine Learning tools.

To get these tools you will need to:

- Join the Beta Program

- Download and install the 2019.3 Beta

- Download and install the Machine Learning Tools .yxi file.

With this installation, you will have access to five new tools; the Start Pipeline tool, the Transformation tool, the Classification tool, the Fit tool, and the Pyscore tool.

The way the Machine Learning tools work is a little different from the R-based Predictive tools you might already be familiar with. Instead of each tool containing code to train a separate model, the Machine Learning tools are designed to be used together to create a modeling “pipeline”.

In this context, a modeling pipeline refers to the steps needed to get your data from a raw input data format to a fully trained and useable model, including any encoding or transformation performed on the data to get it into a useable format. You can think about the Machine Learning tools like little Lego blocks to build out a model. Each tool can be used to perform an individual process, from identifying your target variable and marking the start of your pipeline (Start Pipeline tool) to combining all of your tools into a list of instructions and fitting the transformed data to a model (Fit tool). By stacking all of your lego blocks together, you end up with a fully fleshed-out tower with walls and a roof, not just the fancy spiral stairs. When your trained model object is created and output, it includes each of the steps in your built-out pipeline.

When you are building out a Machine Learning pipeline, the experience is similar to working with other Alteryx tools. Each tool passes metadata to the next tool, allowing you to configure downstream tools while accounting for any changes upstream tools might make to the data. However, when you run the workflow the Machine Learning tools do not actually execute processes in sequence on the canvas. Instead, the instructions each tool contains are actually passed down through the pipeline and executed in order as a single "to do list" by the Fit tool at the end of the pipeline.

With this overview in mind, let’s examine the pipeline architecture by examining each of the tools individually.

The Start Pipeline tool

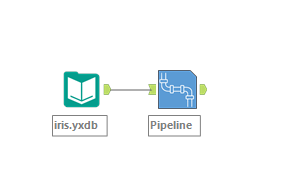

In the beta release, any Machine Learning Pipeline needs to start with the Start Pipeline tool (was that sentence as fun to read as it was to write?). This is the tool you feed your input data to, and where the Python-based machine learning process starts.

The configuration of the Start Pipeline tool is simple – all you need to do is specify your target variable. It is important to note here than in this beta release, the Machine Learning tools only support Classification models, so your target variable will need to be a categorical one.

The Transformation tool

This tool is used to modify your data into machine learning-usable formats. It includes the ability to select columns to include in your model, perform data typing, impute missing values, and perform one hot encoding for categorical variables. This tool includes a lot of important functionality for preprocessing your data, so expect to use it more than once in your pipeline.

In the Configuration window, you will need to Select a transformer. This will define the way you are using the tool.

Selecting Column selection as your transformer causes the transformation tool to behave like an Alteryx Select tool, allowing you to remove any variables that should not be included as predictor variables in your model.

Selecting Data Typing as your Transformer also causes the Transformation tool to behave like a Select tool (a different part of the Select tool), where you can adjust the data types of each of your variables.

The Missing value imputation transformer is where you can handle nulls in your dataset. This step is particularly cool with the pipeline architecture because it is a best practice in data science to impute values to any future data sets with values derived from your training data set. Because the imputation becomes a part of the pipeline, the values calculated with your training data set to replace nulls are saved with your model, and all values fed into the model in the future with nulls will use the values derived from your training data set.

Even though the Iris dataset does not have null values, I am doing to include this transformation step so that my resulting model is primed to handle any nulls fed into the model later.

The last transformer, One Hot Encoding, allows you to encode any categorical variables in your dataset, putting them into a format that the models in the Machine Learning tools (based on the Python package scikit-learn) can handle them correctly.

My dataset does not have any categorical variables other than the target variable, so I’ll skip this transformer in my pipeline.

An important thing to note about the Transformation tool is that it can only serve one purpose at a time. This means that if you need to select columns in your dataset and change data types for your incoming dataset, you will need to have two separate Transformation tools in your pipeline (this is why you should expect to have more than one Transformation tool in a pipeline).

This beta release is just the beginning for the Transformation tool. The developers are hard at work adding a variety of other preprocessing steps for use in a pipeline. Stay tuned. 🙂

The Classification tool

This is where the classification model choices live. In the beta release, the Classification tool includes logistic regression, random forest, and decision tree (with plans to add more).

Depending on which algorithm (model recipe) you select, different General and Advanced parameters will be populated in the Configuration window.

There should only be one Classification tool in a single pipeline stream, but you could split your pipeline into multiple streams to train multiple models.

The algorithms available in the Machine Learning tools will increase over time, with plans to add more classification algorithms and regression algorithms.

The Fit tool

The Fit tool is the final step in the pipeline process, and where you close off your pipeline. The output of a Fit tool is your model, including any transformation steps you made along the way. Nothing is required in the Configuration window, just add it to the end of your pipeline!

Pyscore

If you’d like to use your model on an unseen dataset, you will need to use the Pyscore tool! Here is where the pipeline concept might click for you if it hasn’t yet – when you feed data into the Pyscore tool, you want to feed in data in the same format that you fed it into the Start Pipeline tool. All of the preprocessing we performed with the Transformation tools are included in the model object output by the Fit tool.

The Pyscore tool doesn’t require any configuration, just hook up your data input to the “D” anchor, and the model to the “M” input anchor and click Run!

Hopefully, this example has made the architecture of the new Machine Learning tools clear! Pipelines are a really neat approach to predictive modeling in Alteryx that allow models to be neatly packaged with all of the preprocessing steps included. This means that when you share or deploy your model, data can be fed into it in the same format that you fed data into your Start Pipeline tool. No need to worry about sharing your preprocessing steps separately from a model object.

If you’re ready to try the shiny new Machine Learning tools out for yourself, please enroll in our beta program at beta.alteryx.com.

A geographer by training and a data geek at heart, Sydney joined the Alteryx team as a Customer Support Engineer in 2017. She strongly believes that data and knowledge are most valuable when they can be clearly communicated and understood. She currently manages a team of data scientists that bring new innovations to the Alteryx Platform.

A geographer by training and a data geek at heart, Sydney joined the Alteryx team as a Customer Support Engineer in 2017. She strongly believes that data and knowledge are most valuable when they can be clearly communicated and understood. She currently manages a team of data scientists that bring new innovations to the Alteryx Platform.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.