Data Science

Machine learning & data science for beginners and experts alike.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Data Science

- :

- Operationalizing an Alteryx Predictive Model with ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

You’ve blended your data. Cleansed it. Trained your model. Made more models. Tweaked them. Compared them. You’ve picked THE BEST model. It’s perfect. Now what? You need to get the rest of your organization using your model in a live production environment. There are several ways to deploy your R model with Alteryx (skip to the end for the Azure ML way).

Scheduler

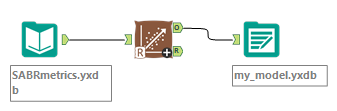

Save your trained model to a yxdb:

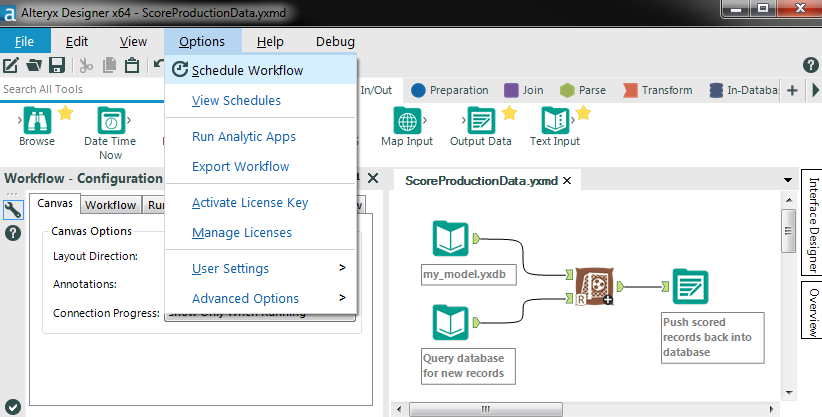

Create a workflow to score production data and schedule it to run at a regular interval of your choosing:

This method is simple and flexible - it will work for every Alteryx predictive algorithm and you never have to leave Designer. You can schedule the scoring to run locally on your laptop as frequently as desired, or schedule it to run on your Server where you can scale your hardware to meet workload capacity, redundancy, and availability requirements.

Oracle R Enterprise

Similar to the above approach with respect to scheduling, but in this scenario the data never leaves the database making the process more efficient and leveraging the database hardware. Alteryx currently supports the Logistic and Linear Regression algorithms for in-database predictive analytics. See below video:

Gallery

Both of the above approaches to scoring require Alteryx, but what if you want your consumers (whether in-house or the general public) to utilize the model you created in Alteryx to score their own data, whether or not those consumers have Alteryx themselves? The Alteryx Analytics Gallery allows you to do just this. With the simple addition of a few Interface Tools to your scoring workflow you can save as an Analytic App to your Gallery deployment. Your consumers then only need a browser to input their data (1-off or batch, depending on how you create your app) and get scored data back, using the Gallery server for the heavy lifting.

To take it a step further, expose your app with the Gallery API. Using the simple REST API your model is now available to query in real time by your production systems, third-party apps, iPhone apps, Tableau WDC, etc.

Azure ML

Microsoft has put out an R package that allows you to deploy an R function as a publicly available web service - pretty cool! This vignette gives a simple example function that accepts two numeric inputs and returns the sum. I wanted to take this concept further to deploy a function to accept an arbitrary number of predictor variables as inputs and return the scored result of a predictive model created in Alteryx. Here's how I did it.

Create workflow

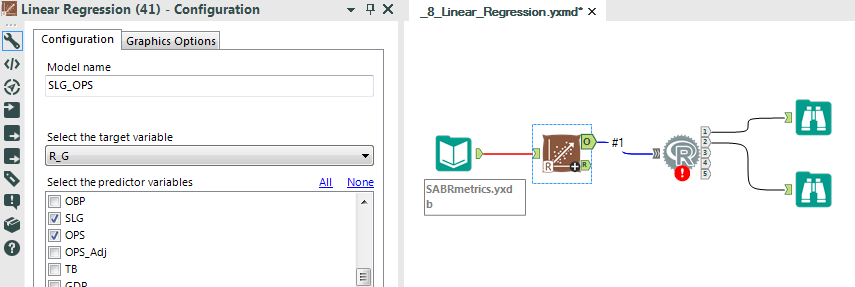

Train model and connect R tool to O (model object) output anchor. I started with the Linear Regression sample from Help > Sample Workflows.

Configure R tool

If you like you can follow along by copying and pasting the R code below into the R Tool configuration in your Alteryx workflow.

First we'll need to install the AzureML package. I adapted the following code from this Knowledge Base Article.

package_name <- "AzureML" # grab the alteryx repo altx.repo <- getOption("repos") # set your primary repo if you haven't already altx.repo["CRAN"] <- "http://cran.rstudio.com" options(repos = altx.repo) #check to see if the package we want is already installed # if it is, we will just load it, otherwise we will install it if(package_name %in% rownames(installed.packages())==FALSE){ install.packages(package_name) } require(package_name, character.only=TRUE)

Next we'll set our workspace ID and authorization token. This document contains clear instructions on how to obtain these values once you've created your free AzureML Studio account. [Replace the stars with your own credentials.]

myWsID <- '********************************' myAuth <- '********************************'

Now we need to read in our model created in the previous Linear Regression tool.

# read in model object the.model <- read.Alteryx("#1") mod.obj <- unserializeObject(as.character(the.model$Object[1]))

Here we create the function, using the model object, to score new data. In this particular case the function will take two inputs (the predictor variables selected when training the model: SLG and OPS) and return the predicted amount (R_G).

Now we'll deploy this function to AzureML as a web service.

Experienced R programmers might be a little thrown off by the use of "double" as a data type above, expecting perhaps "numeric" instead. These types seem to be specific to the AzureML API, with supported types listed in this document.

Finally we output the response info we got back from AzureML out of the R Tool output anchors. This will contain information that we'll need to ping the API (such as the API Location and Primary Key).

# output response info webservice <- response[[1]] endpoints <- response[[2]] write.Alteryx(webservice, 1) write.Alteryx(endpoints, 2)

Run the workflow and that's it! Your model is now available in the cloud, ready for whoever has the Key to utilize via REST.

I've attached a package that contains a macro that generalizes the above approach. It detects the predictor and target variables and their associated data types from the model object. The macro currently works with the Linear Regression algorithm (without any additional options selected, such as "Omit a model constant") but should be easily extensible to include additional options and work with more predictive algorithms by swiping code from the Score Tool.

Also included is a macro demonstrating how to query the deployed web service with the Download Tool and a workflow example of how to utilize both macros. This package was created with Alteryx 10.0, so you'll need the latest version plus the predictive tools to open it. Enjoy!

Acknowledgments

- Sean Lahman for the data used in the attached example

- Chris McHenry for his talk at the Denver R User Group

- Pretty R for the above R syntax highlighting

Sr Program Manager, Community Content

Neil Ryan (he/him) is the Sr Manager, Community Content, responsible for the content in the Alteryx Community. He held previous roles at Alteryx including Advanced Analytics Product Manager and Content Engineer, and had prior gigs doing fraud detection analytics consulting and creating actuarial pricing models. Neil's industry experience and technical skills are wide ranging and well suited to drive compelling content tailored for Community members to rank up in their careers.

Neil Ryan (he/him) is the Sr Manager, Community Content, responsible for the content in the Alteryx Community. He held previous roles at Alteryx including Advanced Analytics Product Manager and Content Engineer, and had prior gigs doing fraud detection analytics consulting and creating actuarial pricing models. Neil's industry experience and technical skills are wide ranging and well suited to drive compelling content tailored for Community members to rank up in their careers.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.