Maveryx Success Stories

Learn how Alteryx customers transform their organizations using data and analytics.STORIES WANTED

Showcase your achievements in the Maveryx Community by submitting a Success Story now!

SUBMISSION INSTRUCTIONS- Community

- :

- Community

- :

- Learn

- :

- Success Stories

- :

- Predicting and Trading on the Cryptocurrency Marke...

Predicting and Trading on the Cryptocurrency Markets using Alteryx

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

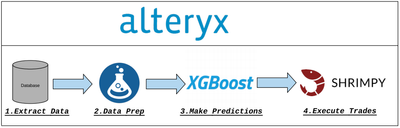

1. Extract Data:

- Our database is hosted on Google Cloud Platform and is a MySQL database

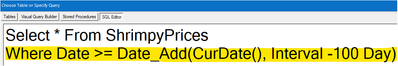

- For the predictive modeling, we will need to pull a "train" dataset, which will consist of all the data we collected relating to the cryptocurrency markets in the past, and we will limit the data to be from the past 100 days in order to keep the dataset to a reasonable size:

- When we intend to leverage the models after they are created in the alter steps, we will adjust this query to pull data from the latest hour instead of the latest 100 days, using a field created specifically for this reason called "pkDummy":

2. Data Prep:

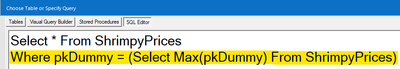

- Data is extracted from several different sources within the database using the methodology outlined in step 1, and some data manipulation is performed to join all the data together and prepare the dataset for the predictive modeling step:

- It's not worth taking too deep of a dive into the specifics of the data preparation, but it mainly consists of calculating the target variable, in this case the % change in USD price over the next 6 hour period for individual cryptocurrencies, and joining data across different data sources matching both on when the data was collected and for what asset.

3. Make Predictions:

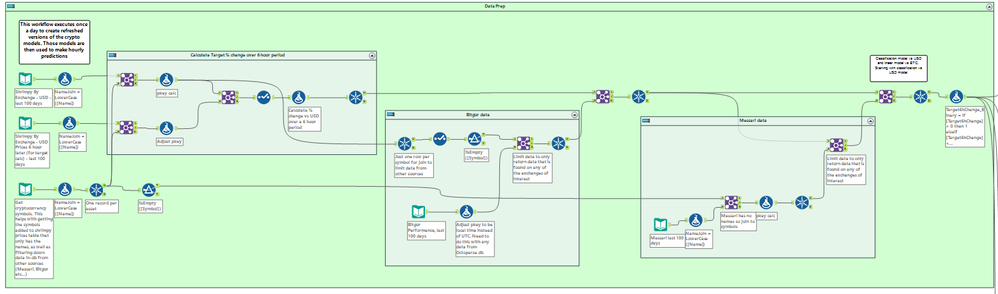

- Because the trades are executed on multiple exchanges, we create individual models for each exchange. Each exchange has an independent model created using the XGBoost framework programmed in R using the R developer tool. Additional models using the R Predictive tools found within Alteryx are also created:

- The XGBoost model itself is outside the scope of this tutorial, but see the Related Resources section at the end of this article for an excellent practical example of how to create an XGBoost model with automated hyperparameter tuning in R.

4. Execute Trades:

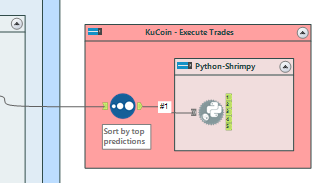

- Using the models created in step 3, predictions on the latest/live data are made. Before actually performing the trades, the predictions get finalized applying an 80% weight to the predictions made by the XGBoost models, and 20% weight to the predictions made by the Alteryx models, adjusting them as necessary based on observed performance. After making the necessary adjustments to the predicted values, the data is passed to a Python tool, which leverages the Shrimpy developer API to execute trades on each of the exchanges individually:

- We use the Shrimpy platform to execute our trades because it can be challenging to connect to the individual exchanges through different APIs, and the Shrimpy.io service allows us to connect to several different exchanges using the same API and methodology. There are also additional benefits to using the service, such as "rebalancing", and allowing other users to automatically follow the same portfolio as the one doing the automated trading. Go to the end of the article to the related resources section for more information about the very awesome Shrimpy.io service/platform.

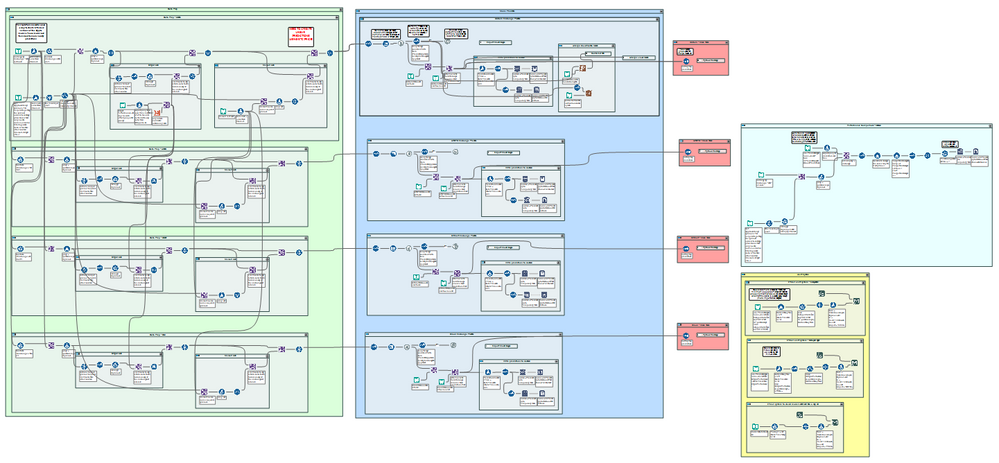

- Once we repeat the process for several exchanges independently, this is what the workflow that performs trades on the latest data ends up looking like:

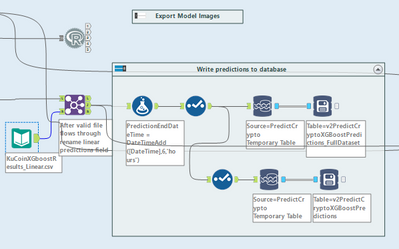

- Once the predictions are made, that information is written to a database table as a centralized location to then be able to reference to compare the predicted values vs. actuals:

- In this step we create new fields that summarize the DateTime of when the prediction end is supposed to be. For example, if a prediction was made at 3:00PM on November 23rd 2019, we would be predicting the % price change against the dollar from 3:00PM to 9:00PM.

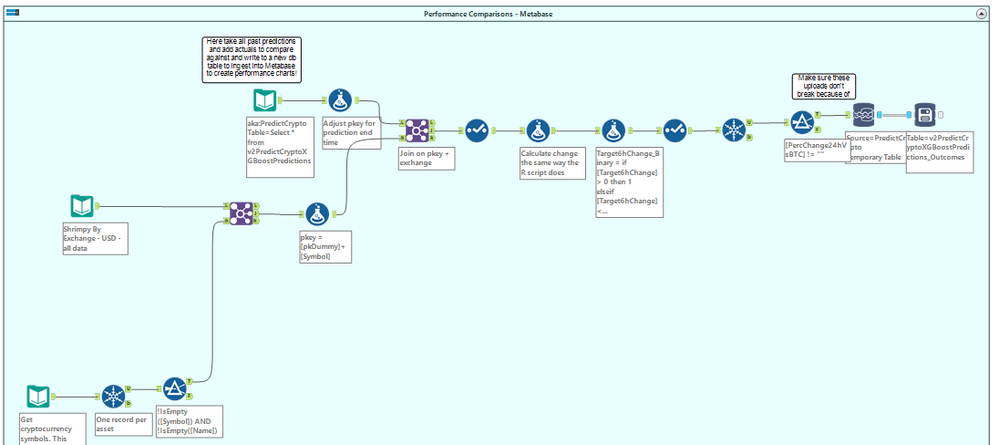

- After writing the predictions on November 23rd 2019 at 3:00PM (just an example), once 6 hours have passed, we can pickup the data using the Prediction End Date Time field and compare those predictions to the real life results and write the data to a table in the database for further analysis:

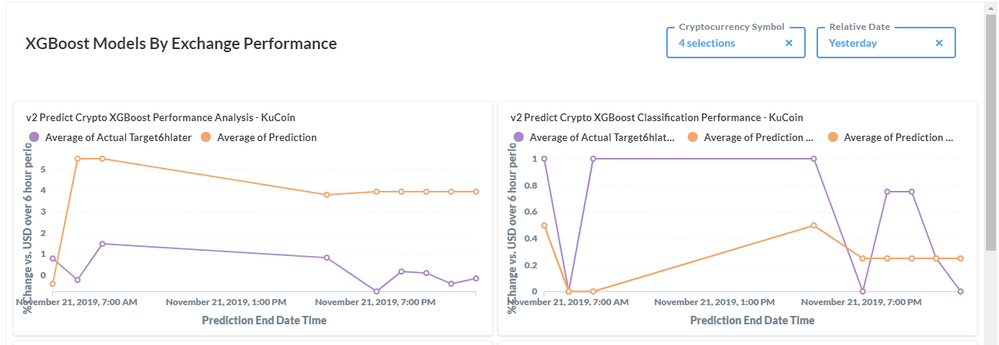

- Once that process is complete, the performance vs. actuals are visualized as interactive dashboards for internal analysis using an instance of Metabase running on AWS that works very similarly to how a Tableau Online site would work:

- https://blog.shrimpy.io/blog/the-best-threshold-for-cryptocurrency-rebalancing-strategies

- https://blog.shrimpy.io/blog/cryptocurrency-index-funds-step-by-step-guide

XGBoost:

- An XGBoost tutorial with hyperparameter tuning in R we recommend: https://rpubs.com/ippromek/336732

- XGBoost Python documentation: https://xgboost.readthedocs.io/

Metabase:

- Quick overview: https://www.metabase.com/docs/latest/getting-started.html

- Setup a Metabase environment on AWS in less than one hour: https://www.youtube.com/watch?v=wmJ02K8LIFk

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Hello Ries9112,

this is an awesome idea and great work. I have been trading currencies on and off in the past couple of years. I am so very much interested in this program.

I am wondering if it can be done for other non-crypt currencies.

is there any way to get the actual Alteryx process? how can I get more info?

thank you,

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Hi @koheny01, thanks for stopping by!

In terms of applying this process to trading on the forex markets, you wouldn't be able to re-purpose anything from the workflows themselves, and the trading step happens through a platform called Shrimpy which only supports cryptocurrency exchanges, but the general process would be the same so I am going to outline that instead:

- First you will need to create or find a dataset with historical data for the currencies you will be trading on. In this step already be thinking about the predictions/trades you want to make; how many minutes/hours/days out into the future should the prediction be for? In terms of what you are predicting, it probably makes the most sense to predict the % change against USD (or any other currency as long as you keep it consistent).

- Now that you have a dataset to work with, you can bring it into Alteryx and do your data prep work. For example, here you would go ahead and calculate/implement the % change vs. USD for the specific currencies for the prediction period. As a last step before creating your model, I would also create a split of the data where you only use 80% of the data to train the model and 20% to test how it's performing. Here is a quick screenshot of one way you could achieve this:

(keep an eye on the fact that I am taking the R side of the join to get everything that was in the original data but not found once the 80% sample is taken to get to the remaining 20% of the data)

- Now you want to create the model you will use to make your predictions. This will vary wildly based on what you are trying to achieve, and there are a ton of great resources in the realm of making predictions, but one extremely powerful framework you could test is XGBoost. It tends to perform quite well on a wide range of problems. One way you could get started, is by taking a more "black-box" version of the model by doing automated hyper-parameter tuning for the model; meaning, the code will produce several versions of the models tweaking hyperparameters automatically, and going with the best version of the model. Be careful of overfitting and try to get a better understanding of what works and what does not over time. Below is the code in R used to make the XGBoost model as described:

library(tidyverse) # data manipulation library(mlr) # ML package (also some data manipulation) library(xgboost) train <- read.Alteryx("#1", mode = "data.frame") test <- read.Alteryx("#2", mode = "data.frame") # Fitting XGBoost trainTask <- makeClassifTask(data = train, target = "Target6hChange_Binary", positive = 1) testTask <- makeClassifTask(data = test, target = "Target6hChange_Binary") set.seed(1) # Create an xgboost learner that is classification based and outputs labels (as opposed to probabilities) xgb_learner <- makeLearner( "classif.xgboost", predict.type = "prob", par.vals = list( objective = "binary:logistic", eval_metric = "auc", nrounds = 300, early_stopping_rounds = 30 ) ) # Create a model xgb_model <- train(xgb_learner, task = trainTask) result <- predict(xgb_model, testTask) head(result$data) # Hyper-parameter Tuning # Full list of parameters and explanations: https://xgboost.readthedocs.io/en/latest/parameter.html # Could also run the following code to get a list with value ranges: getParamSet("classif.xgboost") xgb_params <- makeParamSet( # The number of trees in the model (each one built sequentially) # makeIntegerParam("nrounds", lower = 50, upper = 500), # number of splits in each tree makeIntegerParam("max_depth", lower = 1, upper = 10), # "shrinkage" - prevents overfitting makeNumericParam("eta", lower = .1, upper = .5), # L2 regularization - prevents overfitting makeNumericParam("lambda", lower = -1, upper = 0, trafo = function(x) 10^x), # Type of booster makeDiscreteParam("booster", values = c("gbtree", "gblinear", "dart")), # Additional parameters to test makeNumericParam("min_child_weight", lower = 1L, upper = 10L), makeNumericParam("subsample", lower = 0.5, upper = 1), makeNumericParam("colsample_bytree", lower = 0.5, upper = 1), # Test error type makeDiscreteParam("eval_metric", values = c("auc", "rmse", "logloss")) ) # Set Random or grid control. Grid control does 100% of options and is crazy computationally expensive, # but could set less parameter options and do that instead. Change "Random" to "Grid" and pass no parameters # and should work the same otherwise control <- makeTuneControlRandom(maxit = 30) # Create a description of the resampling plan resample_desc <- makeResampleDesc("CV", iters = 5) # Perform tuning tuned_params <- tuneParams( learner = xgb_learner, task = trainTask, resampling = resample_desc, par.set = xgb_params, control = control ) # Create a new model using tuned hyperparameters xgb_tuned_learner <- setHyperPars( learner = xgb_learner, par.vals = tuned_params$x ) # Re-train parameters using tuned hyperparameters (and full training set) xgb_model <- train(xgb_tuned_learner, trainTask) # Make a new prediction result <- predict(xgb_model, testTask) prediction <- result$data %>% select(pkey = id, Target6hChange_Binary = response) %>% # No sorting has happened, so everything still matches up. mutate(pkey = test$pkey) # Output data to Alteryx write.Alteryx(as.data.frame(prediction), 1) #Save xgb model save.image(file="C:\\Users\\Ricky\\Desktop\\CryptoWork2019\\November\\XGBoostModelsByExchange\\KuCoinXGBoostModel.RData")This code was adapted from this excellent tutorial: https://rpubs.com/ippromek/336732

- Now that you have a saved model, you will need to figure out a way to create a fast enough method to create a new set of data, which will need to match the "train" used in step #3 in terms of data preparation steps, to make predictions on (again, in terms of the % change in price over the prediction time period). This is the most challenging step of the process, as in a lot of cases it's not about making a good prediction but about being the fastest one to take action.

- Once you have figured out how to take care of step #4, you will go ahead and make predictions on the new data using similar code to before (once again in R but XGBoost is available in Python):

library(tidyverse) # data manipulation library(mlr) # ML package (also some data manipulation) library(xgboost) test <- read.Alteryx("#1", mode = "data.frame") # Load XGBoost model which is refreshed once a day load("C:\\Users\\Ricky\\Desktop\\CryptoWork2019\\November\\XGBoostModelsByExchange\\KuCoinXGBoostModel.RData") # Create test task testTask <- makeClassifTask(data = test, target = "Target6hChange_Binary") # Make a new prediction result <- predict(xgb_model, testTask) prediction <- result$data %>% select(pkey = id, Target6hChange_Binary = response) %>% # Put back the original passenger IDs. No sorting has happened, so # everything still matches up. mutate(pkey = test$pkey) # Print preview of predictions print(head(prediction)) # Output data to Alteryx write.Alteryx(as.data.frame(prediction), 1) - Do whatever quick data prep is necessary. For example, if you are looking to trade on the top 3 predictions made, you could sort it by the prediction % descending and then only select rows 1-3 and use that information to initiate trades in the final step.

- Finally, your last step would be to connect to some sort of exchange or service in order to execute the trades. Typically you will be able to do this using an API provided by the exchange, and in some cases (like ours), there will be a developer that creates some sort of library that makes it easier to interact with the API, so if that's an option it's worth looking into it.

I hope this helps and makes sense, I shared what I felt like was useful and relevant to your question and I'm not sharing the workflow itself for now because I honestly don't think it would be very useful as nothing would run with all the database connections breaking, but if there is a demand for it I will give it some thought and try to make some form of template available. Let me know if you are still looking for more info, but I should definitely mention that it took a lot of time and effort to setup the infrastructure to get the data to flow into the database used for this post in close to live time, and although there have been some positive results from the predictive modeling itself, it has not yet translated into a consistently positive trading strategy, so I would encourage you to do a project like this to enhance your learning rather than assuming it will actually end up working.

Let me know if you have any questions!

Ricky

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Hello Ricky,

thank you so very much for your great detail response.

I am relatively new with Alteryx. it will take a while for me to digest what you wrote me. it's above my paycheck !! 🙂

However, I will certainly look into this. my broker is OANDA. As much as I know, they have a great databases and I need to discover what is suitable and what is not.

I will be in touch with you if I have any update.

All the best,

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

This is an amazing and uniquely creative post, Riccardo @ries9112 !

My colleague has actually written a Data Science blogpost regarding building an XGBoost macro (both in R & Python). Do check it out here: https://community.alteryx.com/t5/Data-Science-Blog/Expand-Your-Predictive-Palette-XGBoost-in-Alteryx...

Best,

Michael Utama

Associate Solutions Engineer

Alteryx APAC

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Thank you @mutama! That's a seriously awesome post and an even better macro! I actually stumbled upon it in the past and I've used the R one (could never quite figure out the installation of things on the Python side of things at the time) and I definitely should have mentioned that macro is available in my use case. I didn't use it because I am quite good in R and had experience using XGBoost models, but that's a no-brainer for others who don't have that comfort level and I should have thought to make that adjustment. I just made a note to myself to include that within the use case; I plan on doing some more additional work on the section describing the benefits achieved, and once I reach out to the moderator of the post to make those changes I will be sure to make this adjustment as well and include the excellent Data Science blogpost.

Thank you for reaching out about that!

Ricky

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Awesome workflow! I was trying to zoom in on some of the pictures you posted but can see it that well. Is it possible to post the workflow file? I realize I don't have some of the same macros installed but I'd still like to see the process. Thank you!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Hey! Can you share the workflow please?

-

Adobe

2 -

Alteryx Connect

5 -

Alteryx Designer

202 -

Alteryx for Good

1 -

Alteryx Promote

2 -

Alteryx Server

66 -

Alteryx using Alteryx

29 -

Americas

158 -

Analyst

108 -

Analytics Leader

54 -

Asia Pacific

29 -

AWS

9 -

BI + Analytics + Data Science

100 -

Business Leader

37 -

C-Leader

18 -

Data Prep + Analytics

230 -

Data Science + Machine Learning

113 -

Data Scientist

14 -

Department: Other

14 -

Education

18 -

Energy + Utilities

5 -

Europe + Middle East + Africa

58 -

Experian

2 -

finance

29 -

Financial Services

33 -

Healthcare + Insurance

21 -

Human Resources

19 -

Information Technology

25 -

IT

31 -

Life Sciences + Pharmaceuticals

3 -

Manufacturing

20 -

Marketing

16 -

Media + Entertainment

12 -

Microsoft

52 -

Operations

38 -

Other

10 -

Process Automation

59 -

Professional Services

69 -

Public Sector

15 -

Qlik

1 -

Retail + CPG

32 -

Sales and Service

24 -

Salesforce

9 -

SAP

11 -

Snowflake

6 -

Tableau

71 -

Tech Partner: Other

86 -

Technology

34 -

Telecommunications

5 -

Teradata

5 -

Thomson Reuters

1 -

Transportation + Logistics

25 -

Travel + Hospitality

4 -

UiPath

1

- « Previous

- Next »