Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

-

Category Transform

-

Desktop Experience

I have a process that joins 3 data sets to identify a specific group of data and apply certain ruling. From this created file, I need to extract the data (not the headings) from specific columns and insert into an already existing template. The template has formatting that needs to remain in order for it to function.

Is this possible?

-

Category Join

-

Category Transform

-

Desktop Experience

Hi,

Recently I was helping a client to design the workflow to do transformation. In the middle of the work, I feel a bit lost on handling so many fields and thinking it would be great if there is a feature that allow me to track the field actions along the workflow. It could be something like a configuration on the canvas, user activate it only when they want to.

And when it is activated, the workflow could become:

So it is easier to find the path of certain field along the whole workflow.

Or is there any method to achieve this at the moment?

Thanks.

Kenneth

-

Category Input Output

-

Category Transform

-

Data Connectors

-

Desktop Experience

Would be nice if in Designer customer's may want to upload and reference a " DATA DICITIONARY - METADATA REPOSITORY file when working with various input source to transform data .

Organizations that are mature in their data governance strategy implement special software that extracts, manages and provides access to data dictionary of data assets in multiple databases such as ERWIN to maintain schema for enterprise.

Within DESIGNER access to a file METADATA REPOSITORY held in DESIGNER customer may easily select a list of columns fields or attributes from that file to manipulate data elements using DESIGNER and provide all the relevant information required they wish to massage the data.

Possible Attributes that may be in data dictionary file:

Table name

Column Name

Data Type

Foreign Table

Source

Table Description

Sensitive Data

Required Field

Values

-

Category Transform

-

Desktop Experience

Hello Community,

I was wondering if there is a tool that could de-duplicate records after serializing (or after using Transpose Tool) with a given priority for each field in one of the keys? i.e.

| ID | Origin | Field Name | Value |

| 1 | A | NAME | JACK |

| 1 | B | NAME | PETER |

| 1 | B | ZIP CODE | 15024 |

| 1 | C | ZIP CODE | 15024 |

| 1 | D | TYPE | MID |

| 1 | H | TYPE |

PKL |

Assuming for the field name NAME, the priority should be [ A, B ]

ZIP CODE -> [ C, B ]

TYPE -> [ H, D ]

The expected outcome for Id 1 should be -> JACK, 15024, PKL

Record discarded -> PETER, 15024, MID

In this case I'm using ID and Origin as keys in the Transpose Tool.

I just want to make sure there is no other route than the Python Tool.

Thank you

Luis

-

Category Transform

-

Desktop Experience

Was thinking with my peers at work that it might be good to have join module expanded both for desktop and in-database joins.

As for desktop join: left and right join shows only these records that are exclusive to that side of operation. Would it be possible to have also addition of data that is in common?

As for in-db join: db join acts like classic join (left with matching, right with matching data). Would it be possible to get as well only-left, only-right join module?

-

Category In Database

-

Category Join

-

Category Parse

-

Category Transform

Thanks.

-

Category Transform

-

Desktop Experience

Hi All,

It would be a given wherein IT would have invested effort and time building workflows and other components using some of the tools which became deprecated with the latest versions.

It is good to have the deprecated versions still available to make the code backward compatible, but at the same time there should be some option where in a deprecated tool can be promoted to the new tool available without impacting the code.

Following are the benefits of this approach -

1) IT team can leverage the benefits of the new tool over existing and deprecated tools. For e.g. in my case I am using Salesforce connectors extensibly, I believe in contrast to the existing ones the new ones are using Bulk API and hence are relatively much faster.

2) It will save IT from reconfiguring/recoding the existing code and would save them considerable time.

3) As the tool keeps forward moving in its journey, it might help and make more sense to actually remove some of the deprecated tool versions (i.e. I believe it would not be the plan to have say 5 working set of Salesforce Input connectors - including deprecated ones). With this approach in place I think IT would be comfortable with removal of deprecated connectors, as they would have the promote option without impacting exsiting code - so it would ideally take minimal change time.

In addition, if it is felt that with new tools some configurations has changed (should ideally be minor), those can be published and as part of

promotions IT can be given the option to configure it.

Thanks,

Rohit Bajaj

-

Category Connectors

-

Category Transform

-

Data Connectors

-

Desktop Experience

In SQL, obtaining partial sums in a grouped aggregation is as simple as adding "WITH ROLLUP" to the GROUP BY clause.

Could we get a "WITH ROLLUP" checkbox in the Summary Tool's confg panel in order to produce partial sums?

(This was initiated as a question here.)

Here is an example of without rollup vs. with rollup, in SSMS:

(Replacing "NULL" with the phrase "(any)" or some such, and we have a very useful set of partial sums.

-

Category Transform

-

Desktop Experience

It would help if there is some option provided wherein one can test the outcome of a formula during build itself rather than creating dummy workflows with dummy data to test same.

For instance, there can be a dynamic window, which generates input fields based on those selected as part of actual 'Formula', one can provide test values over there and click some 'Test' kind of button to check the output within the tool itself.

This would also be very handy when writing big/complex formulas involving regular expression, so that a user can test her formula without having to

switch screens to third party on the fly testing tools, or running of entire original workflow, or creating test workflows.

-

Category Data Investigation

-

Category Preparation

-

Category Transform

-

Desktop Experience

Hi,

I think that the sample tool should have a T or F port.

Lets say I want to keep first N records but would like to stream the rest of the data (the not sampled one) somewhere else in my workflow, its possible but it would be easier to have that in the sampler.

Simon

Korem

-

Category Transform

-

Desktop Experience

Currently only DateTime based functions are available, Time based functions should be introduced. like TimeAdd(), TimeDiff() etc.

This will help users a lot to calculate different aspects of time based calculations...

Ashok Bhatt

-

Category Transform

-

Desktop Experience

-

Category Transform

-

Desktop Experience

Today i stuck in one position where my current module gaves an error because it doesnt found the Fields name. I define the field name in Formula tool for validation and harmonization. So as my Fields changes formula is also changes. But i donot want to make any changes in my Module.

So what i am thinking it will be better that we can define a formula in any file format like (.xlsx or .csv) and take the Input in formula tool.

So we do not have to change the module again and again. We just need to change the mapping file against the lates file coming. So we can check the file and define the formula in mapping file.

Thanks in Advance.

-

Category Transform

-

Desktop Experience

We had a workflow where we needed to count business days. The standard solve of generating rows for each day between the dates wouldn't work as it would slow down the workflow too much.

Something that takes 5 seconds in Excel turned into a tremendous pain.

It would be really nice to have a built out tool where you can input the start date / end date (or what field they are tied to).

Which days of the week are considered business days and which days are not.

Which holidays should be excluded and available to add custom holidays.

-

Category Transform

-

New Request

For the summary tool, allow for the field data definition type of the output.

-

Category Transform

-

Desktop Experience

Hi

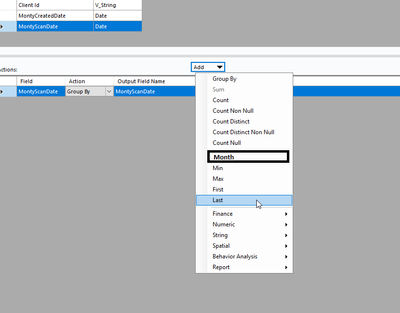

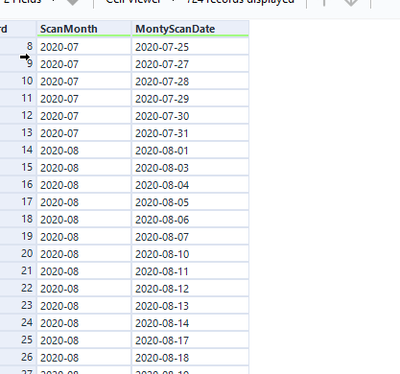

In all its simplicity, I would like to be able to group by Month based of dates:

To acieve something like this:

-

Category Transform

-

Desktop Experience

The Summarize tool returns NULL when performing a Mode operation. This doesn't seem to be documented anywhere in Alteryx documentation nor the community. Please fix this behaviour.

-

AMP Engine

-

Category Transform

-

Desktop Experience

-

Engine

Hello

I have searched the community but haven't found any obvious solutions to this.

When using a cross tab I often find that there shouldn't be any aggregated values and if there is it means there is an issue with my data or workflow.

Therefore I think a useful feature would be an option for the cross tab tool to be able to return an error if it trying to aggregate any values.

I have a work around by using a summarize tool to count the non unique records and then a test to see if there are any duplicates but I think this could be a useful addition to the tool.

Thanks

-

Category Transform

-

Desktop Experience

-

Enhancement

It would be helpful to be able to toggle the way the Mode calculation handles two or more "ties." Currently if there is a tie between records, the lower is returned. I have a use case where I would rather have the higher value return if there is a tie. I could also see there being a use for an average between the tied records. Ideally I think there would be three options for a tie: use the 1. lowest value 2. highest value 3. average of tied values. I'm not sure if first/last would also be helpful to have as options.

My use case is for product dimensions. We use the mode to normalize the dimensions (height, width, depth) of products. Because we are using the dimensions for space planning, if the lower value is used there may not be enough space for the product on the shelf. We would rather use the higher of the tied values to make sure we aren't creating a plan where the products won't fit.

-

Category Transform

-

Desktop Experience

- New Idea 207

- Accepting Votes 1,837

- Comments Requested 25

- Under Review 150

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow