Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

When building custom tools for Alteryx using the Python SDK, there is no current way to test these outside of the Alteryx Designer.

This means that your development process is:

- write some code (no code-sense; intellisense; auto-complete because Jupyter; VSCode; Visual Studio; etc cannot access AlteryxEngine or any of the other imports)

- hope

- copy that .py module into your C:\Users\<username>\AppData\roaming\Alteryx\Tools\<toolname>

- fire up Alteryx

- drop this new custom tool on a canvas

- run it to see if you get any errors

- then copy these errors out of Alteryx result window into Notepad to be able to read them

- then go back into your development environment to make changes

- repeat.

This is very painful, and this will directly scare most people away from learning how to create custom tools since it's not only inefficient - but also scary and frustrating for beginners.

Proposal:

Could we instead create mock python libraries; and a development harness (like Google does with Android development in Eclipse) in this SDK where:

- you have full code intelligence (intellisense, autocomplete)

- you can simulate engine events in a test harness (for example in the Android SDK; you can simulate the user rotating their phone, turning off GPS, hitting a volume button, etc).

- you can also write test cases which can run automatically

- then once you know that your tool will work - only then you drop it into the Alteryx Designer environment.

NOTE: This IDE way of thinking also allows you to bring the configuration pieces (like number of inputs; etc) out of raw code and into configuration options.

Although you may be able to do remote debugging by using platforms like PyCharm - that really does not give you the full ability to check in the code of your tool; along with all the test cases; in a harness that allows you to automatically check different events; or to make sure that your tool works in the test harness before deploying.

Thank you

cc: @BlytheE @SteveA @Ozzie @tlarsen7572 @cam_w @jdunkerley79

-

API

-

SDK

In response to my question here: https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Singin-Error-to-Tableau-server-using-P...

The Publish to Tableau Server does not support SAML/SSO. I would like this feature to be added to this tool as it will make our business process more efficient.

Thank you.

-

API

API Security requirements are constantly evolving and strengthening. As API architectures migrate from traditional authentication models (Basic, OAuth, etc.) to more secure, certificate-based models, like MTLS/MSSL, leveraging Alteryx Designer will become increasingly difficult, especially for larger organizations trying to scale the use of Alteryx across a large user base, with vastly diverse skillsets.

I realize issuing API calls with certificates is possible via the Run Command tool. We consider this a temporary workaround, and not a permanent, strategic solution. The Run Command tool can be clunky to use when passing in variables and passing the output back into the workflow for downstream processing.

Therefore, I would like to request a more scalable approach to issuing MTLS/MSSL API calls. Can an option be added to the Download Tool to allow for certificates to be passed on API calls?

-

API

-

Tool Improvement

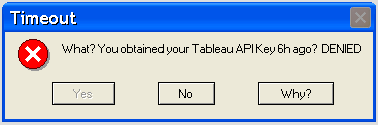

The current version of the Publish to Tableau macro retrieves an API key at the start of the workflow run. Often times the workflow may take several hours to run before it's ready to write to Tableau by which time the API may have expired. (I think the default tableau server setting times out in 2 hrs) It's one of those soul crushing "I should've forked the output!"

Sample Log Error -

- Tool #46: TableauServer.UploadChunks (238): Iteration #1: Tool #19: Tool #4: Tableau Server API Request (Upload file) Error Code 401002: Unauthorized Access -- Invalid authentication credentials were provided.

- Tool #46: Tool #252: Tool #4: Tableau Server API Request (Publish file) Error Code 401002: Unauthorized Access -- Invalid authentication credentials were provided.

The idea would be to change when the macro obtains the API from when the workflow is initiated to just before the workflow is ready to write to the Tableau avoiding these timeouts.

(If you're having this issue in the meantime you can have your Tableau server admin up the timeout)

When developing in Python using custom objects - you often use print( object) or str(object) to see what's in this object quickly.

For example

myDictionary = {

'CarType': 'Ford',

'Cost': 20000}

this defines a dictionary. If I want to quickly look into these to see what's there I can use:

print(myDictionary)

# gives {'Cost': 20000, 'CarType': 'Ford'}

str(myDictionary)

"{'Cost': 20000, 'CarType': 'Ford'}"

This is incredibly useful for debugging and to understand how these custom objects / classes work.

Please could you add an overload to the str() method to allow this kind of simple debugging and understanding for all the classes used in the Alteryx Python SDK (https://help.alteryx.com/20193/developer-help/sdks/build-custom-tools/python-engine-sdk/classes)

For example:

str(record_info_in) which is of type <class 'AlteryxPythonSDK.RecordInfo'>) gives you <AlteryxPythonSDK.RecordInfo object at 0x000001A2C48C3190> which is not very helpful.

Much more useful would be to flatten this into a string format or dictionary so that users can see what's in the RecordInfo object that they're working with to make delivery and debugging easier.

-

API

-

SDK

My idea is to have the AlteryxEngineCMD.exe to run a workflow as part of the standard Alteryx license.

Use case - be able to run Alteryx from the command line without the need to buy the entire Scheduler package (at $6,500/seat).

I understand why certain features are add on, but the ability to run AlteryxEngineCMD.exe (I feel) should be part of the standard license which is already $5K+. For those who only need to be able to run a command line execution of a workflow $6.5K is a lot of money!

-

API

-

Feature Request

Using the download tool is great and easy to use. However, if there was a connection problem with a request the workflow errors out. Having an option to not error out, ability to skip failed records, and retrying records that failed would be A LIFE CHANGER. Currently I have been using a Python tool to create multi-threaded requests and is proven to be time consuming.

Recently we had a situation where installing the data packages was expected to take over 20 hours! We do not have the ability to run a machine undisturbed for this length of time at the office, and VPN automatically times out after 12 hours. Okay, so these items are company-specific, but how nice would it be to be able to download/install the data in smaller portions so you don't have to worry about setting up shop for 8-10 hours?!

I was able to copy the DataInstall.ini and name only the portions of the data I wanted to install in each session. But then I had to separate the installs into different network folders otherwise you end up overwriting the DataInstall.exe file, which users need for each install in order to register from a network location (for each install).

Needless to say, it took SEVERAL weekends and quite a few mistakes before I was able to do it this way successfully!

Please vote for data installs in smaller portions!

-

API

In the current version of alteryx, we are only able to pull the first 2000 records from an existing salesforce report, a lot of times it becomes difficult to automate my monthly/weekly requests because it is depended on an existing salesforce report and because of that I need to recreate the report on alteryx using the salesforce objects and fields.

If we had the ability to bring in the entire salesforce report to alteryx environment it would save a lot of time and effort for analysts like me because in that way, we won't have to re-invent the wheel.

-

API

Hi,

The Adobe Analytics API token is currently set to expire 30 days after the call has been configured. When the token expires, I have to re-authenticate AND reconfigure the API call.

The API call shuoldn't expire when the token expires. Upon re-authenticating, the call should persist as it was originally configuired.

Thanks,

Mihail

-

API

Good afternoon,

I work with a large group of individuals, close to 30,000, and a lot of our files are ran as .dif/.kat files used to import to certain applications and softwares that pertain to our work. We were wondering if this has been brought up before and what the possibility might be.

-

API

- New Idea 206

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,492 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

176 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 41 | |

| 31 | |

| 20 | |

| 10 | |

| 7 |