Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello all,

Apache Doris ( https://doris.apache.org/ ) is a modern datawarehouse with a lot of ambitions. It's probably the next big thing.

You can read the full doc here https://doris.apache.org/docs/get-starting/what-is-apache-doris but to sum it up, it aims to be THE reference solution for OLAP by claiming even better performance than Clickhouse, DuckDB or MonetDB. Even benchmarks from the Clickhouse team seem to agree.

Best regards,

Simon

-

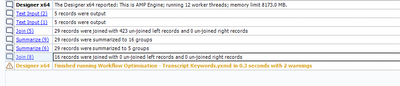

AMP Engine

-

Category In Database

-

Category Input Output

-

New Request

As mentioned in detail here, I think that the addition of a "run as metadata" feature could be very helpful for making the analytic apps more dynamic in addition to enabling the dynamic configuration of the tools included in analytic apps chained together in a single workflow using control containers, therefore mostly eliminating the need to chain multiple YXWZ files together to be able to utilize the previous analytic app's output (this of course doesn't include the cases where a complex WF/App would have to be built by the previous app in chain to switch to it, but Runner helps solve this issue to a certain extent provided you don't have to provide any parameters/values to the generated WF/App).

The addition of this feature would be somewhat similar to running an app with its outputs disabled, without having to run the entire app itself, but rather only certain parts specified by the user in a limited manner. Clicking the Refresh Metadata button (which will be active only if there is at least one metadata tool in the workflow) will update the data seen in the app interface (such as Drop Down lists, List Boxes etc.), provided the user selected the up-to-date input file(s) (or the data in a database is up-to-date) where the data will be obtained from.

To explain in detail with a use case, suppose you have two flows added to separate Control Containers where the second CC uses the field info of a file used in the first CC to enable the user to select a field from a drop down list to apply a formula (such as parsing a text using RegEx) for example. After specifying the necessary branch in the first CC where the field info is obtained, the user could select these tools and then Right Click => Convert to Metadata Tool to select which tools will run when the user clicks on the Refresh Metadata button. The metadata tools could of course be specified across the entirety of the workflow (multiple Control Containers) to update the metadata for all Control Containers and therefore all tabs of a "concatenated app", where multiple apps are contained in a single workflow.

With this feature, all tools that are configured as metadata tools (excluding the tools that have no configuration) will be able to be configured as a "metadata only" tool or a "hybrid" tool, meaning a hybrid tool will be able to be configured separately for its both behaviours (being able to change all configurations for a tool without any restrictions in each mode, MetaInfo would dynamically update while refreshing metadata). Metadata only configuration of a tool could be left the same as the workflow only configuration if desired.

For example an Input File marked as a hybrid tool could be configured to read all records for its workflow tool mode and only 1 record for its metadata tool mode. This could be made possible with the addition of a new tab named Metadata Tool Configuration in addition to the already existing Configuration tab, and a MDToolConfig XML tree could be added to reflect these configurations to the XML of the tool in question, separate from the Configuration XML tree, and either one of those XML trees or both of them would be present depending on the nature of the tool chosen by the user (workflow tool, metadata tool or hybrid).

This would also mean that all the metadata tool configurations of a tool could optionally be updated using Interface tools. You could for example either update the input file to be read for both the workflow tool mode and metadata mode of an input tool at once, or specify separate input files using different interface tools. As another example, the amount of records to be read by a Sample tool could be specified by a Numeric Up/Down tool but the metadata tool configuration could be left as First 1 rows, without being able to change it from the App Interface.

Hybrid tool (note how Configuration and MDToolConfig has different RecordLimit settings):

<Node ToolID="1">

<GuiSettings Plugin="AlteryxBasePluginsGui.DbFileInput.DbFileInput">

<Position x="102" y="258" />

</GuiSettings>

<Properties>

<Configuration>

<Passwords />

<File OutputFileName="" RecordLimit="" SearchSubDirs="False" FileFormat="25">C:\Users\PC\Desktop\SampleFile.xlsx|||`Sheet1$`</File>

<FormatSpecificOptions>

<FirstRowData>False</FirstRowData>

<ImportLine>1</ImportLine>

</FormatSpecificOptions>

</Configuration>

<MDToolConfig>

<Passwords />

<File OutputFileName="" RecordLimit="1" SearchSubDirs="False" FileFormat="25">C:\Users\PC\Desktop\SampleFile.xlsx|||`Sheet1$`</File>

<FormatSpecificOptions>

<FirstRowData>False</FirstRowData>

<ImportLine>1</ImportLine>

</FormatSpecificOptions>

</MDToolConfig>

<Annotation DisplayMode="0">

<Name />

<DefaultAnnotationText>SampleFile.xlsx

Query=`Sheet1$`</DefaultAnnotationText>

<Left value="False" />

</Annotation>

<Dependencies>

<Implicit />

</Dependencies>

<MetaInfo connection="Output">

<RecordInfo />

</MetaInfo>

</Properties>

<EngineSettings EngineDll="AlteryxBasePluginsEngine.dll" EngineDllEntryPoint="AlteryxDbFileInput" />

</Node>

Please also note that this idea differs from another idea I posted (link above) named Dynamic Tool Configuration Change While the Workflow is Running in that the configuration is updated while the WF/App is actually running and for example the Text to Columns tool in the second CC is dynamically changed using the output of a tool in the first CC, unlike selecting an input file and clicking Refresh Metadata from the App Interface before the workflow is run.

Attached is a screenshot and an analytic app to better demonstrate the idea.

Thanks for reading.

-

AMP Engine

-

Category Apps

-

New Request

Currently, using AMP Engine will cause any workflow that depends on Proxies to fail. This includes any API workflow or any workflow with Download tool, etc.

They will all fail with DNS Lookup failures.

Many newer features in Alteryx Designer are now dependent on using AMP Engine, making those features (such as Control Containers) totally useless when running inside a corporate network that uses proxies to the outside world.

Please re-examine the difference between how a regular non-AMP workflow processes such traffic vs how AMP does it, because AMP is broken!

-

AMP Engine

-

Engine

Hello all,

As of today, we can easily copy or duplicate a table with in-database tool.This is really useful when you want to have data in development environment coming from production environment.

But can we for real ?

Short answer : no, we can't do it in these cases :

-partitions

-any constraints such as primary-foreign keys

But even if these ideas would be implemented, this means manually setting these parameters.

So my proposition is simply a "clone table"' tool that would clone the table from the show create table statement and just allow to specify the destination path (base.table)

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

Hello all,

Like many softwares in the market, Alteryx uses third-party components developed by other teams/providers/entities. This is a good thing since it means standard features for a very low price. However, these components are very regurarly upgraded (usually several times a year) while Alteryx doesn't upgrade it... this leads to lack of features, performance issues, bugs let uncorrected or worse, safety failures.

Among these third-party components :

- CURL (behind Download tool for API) : on Alteryx 7.15 (2006) while the current release is 8.0 (2023)

- Active Query Builder (behind Visual Query Builder) : several years behind

- R : on Alteryx 4.1.3 (march 2022) while the next is 4.3 (april 2023)

- Python : on Alteryx 3.8.5 (2020) whil the current is 3.10 (april 2023)

-etc, etc....

-

of course, you can't upgrade each time but once a year seems a minimum...

Best regards,

Simon

-

AMP Engine

-

Engine

Hello all,

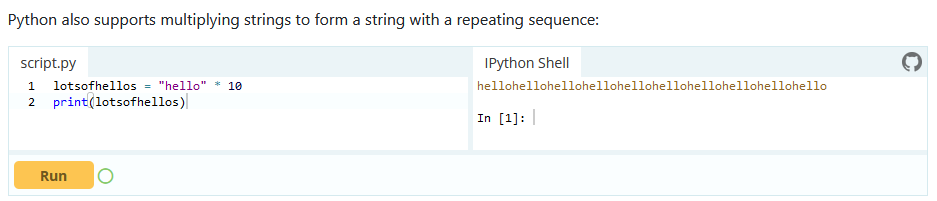

I'm currently learning Pythin language and there is this cool feature : you can multiply a string

Pretty cool, no? I would like the same syntax to work for Tableau.

Best regards,

Simon

-

AMP Engine

-

Category Preparation

-

Desktop Experience

-

Engine

Hello,

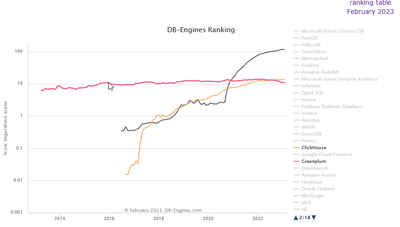

Just like Monetdb or Vertica, Clickhouse is a column-store database, claiming to be the fastest in the world. It's available on Cloud (like Snowflake), linux and macos (and here for free, it's open-source). it's also very well ranked in analytics database https://db-engines.com/en/system/ClickHouse and it would be a good differenciator with competitors.

https://clickhouse.com/

it has became more popular than Greenplum that is supported : (black snowflake, red greenplum, orange clickhouse)

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

Hello all,

MonetDB is a very light, fast, open-source database available here :

https://www.monetdb.org/

Really enjoy it, works pretty well with Tableau and it's a good introduction to column-store concepts and analytics with SQL.

It has also gained a lot of popularity these last years :

https://db-engines.com/en/ranking_trend/system/MonetDB

Sadly, Alteryx does not support it yet.

Best regards

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

The Summarize tool returns NULL when performing a Mode operation. This doesn't seem to be documented anywhere in Alteryx documentation nor the community. Please fix this behaviour.

-

AMP Engine

-

Category Transform

-

Desktop Experience

-

Engine

Hello all,

Change Data Capture ( https://en.wikipedia.org/wiki/Change_data_capture ) is an effective way to deal with changes in a database, allowing streaming or delta functionning. Several technos, more or less intrusive, can be applied (and combined). Ex : logs reading.

Qlik : https://www.qlik.com/us/streaming-data/data-streaming-cdc

Talend : https://www.talend.com/resources/change-data-capture/

Best regards,

Simon

-

AMP Engine

-

Engine

Hey YXDB Bosses,

Let's move forward with our YXDB. Maybe give AMP a real edge over e1. Here are some things that could may YXDB super-powered:

- Metadata

- Workflow information about what created that poorly named output file.

- When was the file originally created/updated.

- SORT order. If there is a sort order for the data, what is it?

- Other stuff

- INDEX. Currently you get spatial indexes (or you can opt out). If I want to search through a 100+MM record file, it is a sequential read of all of the data. With an index I could grab data without the expense of a calgary file creation. Don't go crazy on the indexing option, just allow users to set 1+ fields as index (takes more time to write).

- I'm sure that you've been asked before, but CREATE DIRECTORY if the output directory doesn't exist.

- Old School - Crazy Idea

- Generation Data Groups (GDG)

This will likely make @NicoleJ 's eyes roll 🙄 but back in the days, we could write our data to the SAME filename and the old data became 1 version older. You could read the (0) version of the file or read from 1, 2, 3 or more previous versions of the data using the same name (e.g. .\Customers|||3). The write of the output file would do all of the backing up of the data (easy to use) and when the initial defined limit expires, the data drops off.

- Generation Data Groups (GDG)

Just a little more craziness from me

cheers

-

AMP Engine

-

Engine

Hi UX interested parties,

Here are some ideas for you to consider:

1. These lines are BORING and UNINFORMATIVE. I'd like to understand (pic = 1,000 words) more when looking at a workflow.

- A line could communicate:

- Qty of Records

- Size of Data

- Is the data SORTED

- What sort order

- Quality of Data

If you look at lines A, B, C in the picture above. Nothing is communicated. Weight of line, color of line, type of line, beginning line marker/ending line marker, these are all potential ways that we could see a picture of the data without having to get into browse everywhere to see the information. If we hover over the data connection, even more information could appear (e.g. # of records, size of file) without having to toggle the configuration parameters.

2. Wouldn't it be nice to not have to RUN a workflow to know last SAVED metadata (run) of a workflow? I'd like to open a "saved" workflow and know what to expect when I run the workflow. Heck, how long does it take the beast to run is something that we've never seen unless we run it.

3. I'd like to set the metadata to display SORT keys, order. Sort1 Asc, Sort 2 Desc .... This sort information is very helpful for the engine and I'll likely post about that thought. As a preview, when a JOIN tool has sorted data and one of the anchors is at EOF, then why do we need to keep reading from the other anchor? There won't be another matched record (J) anchor. In my example above, we don't ask for the L/R outputs, so why worry about the rest of the join?

4. Have you ever seen a map (online) that didn't display watermark information? I think that the canvas experience should allow for a default logo (like mine above, but transparent) in the lower right corner of the canvas that is visible at all times. Having the workflow name at the top in a tab is nice, but having it display as a watermark is handy.

5. Once the workflow has RUN, all anchors are the same color. How about providing GREY/White or something else on EMPTY anchors instead of the same color? This might help newbies find issues in JOIN configuration too.

6. If the tool has ERRORs you put a RED exclamation mark. I despise warnings, but how about a puke colored question mark? With conversion errors, the lines could be marked to let you know the relative quantity of conversion errors (system messages have a limit)

Just a few top of mind things to consider ....

Cheers,

Mark

-

AMP Engine

-

Engine

Hello,

More and more databases have complex data types such as array, struct or map. This would be nice if we could use it on Alteryx as input, as internal and as output, with calculations available on it.

https://cwiki.apache.org/confluence/display/hive/languagemanual+types#LanguageManualTypes-ComplexTyp...

Best regards,

Simon

-

AMP Engine

-

Engine

Hello all,

EDIT : stupid me : it's an excel limitation in output, not an alteryx limitation :( Can you please delete this idea ?

I had to convert some string into dates and I get this error message (both with select tool and DateTime tool) :

ConvError: Output Data (10): Invalid date value encountered - earliest date supported is 12/31/1899 error in field: DateMatch record number: 37399

This is way too early. Just think to birthdate or geological/archeological data !

Also : other products such as Tableau supports earlier dates!

Hope to see that changed that soon.

Best regards,

Simon

-

AMP Engine

-

Engine

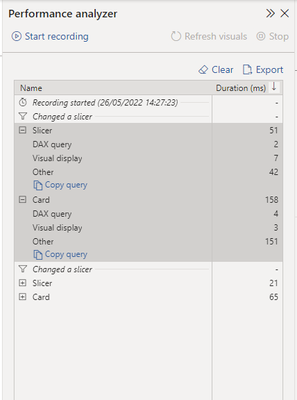

Alteryx is very quick already but it world be useful to know the computational cost of different approaches to building a workflow using a lot of data. This would make it easier to know if your optimization to the workflow is working as expected and also which tools in particular are doing the work best. Other software such as Power BI has a performance analysis section which breaks down how each action impacted performance.

It would be great to get a similar breakdown of how long each tool is taking to run in the results window.

-

AMP Engine

-

Engine

I learnt Alteryx for the first time nearly 5 years ago, and I guess I've been spoilt with implicit sorts after tools like joins, where if I want to find the top 10 after joining two datasets, I know that data coming out of the join will be sorted. However with how AMP works this implicit sort cannot be relied upon. The solution to this at the moment is to turn on compatibility mode, however...

1) It's a hidden option in the runtime settings, and it can't be turned on default as it's set only at the workflow level

2) I imagine that compatibility mode runs a bit slower, but I don't need implicit sort after every join, cross-tab etc.

So could the effected tools (Engine Compatibility Mode | Alteryx Help) have a tick box within the tool to allow the user to decide at the tool level instead of the canvas level what behaviour they want, and maybe change the name from compatibility mode to "sort my data"?

-

AMP Engine

-

Engine

I am working with client to modernize the way to provide data to core systems - in this case, Prophet.

The data volume is huge, and the logic is complex, also some files are in legacy format, so AMP engine not able to deliver a satisfactory result.

Appreciate if the team can work on AMP engine enhancement to help handling Flat files, or other tools so to make the workflow truly automated. This will definitely help to justify the investment on server to clients with similar need.

-

AMP Engine

-

Engine

In order to run a canvas using either AMP or E1 - the user has to perform at least 5 operations which are not obvious to the user.

a) click on whitespace for the canvas to get to the workflow configuration. If this configuration pane is not docked - then you have to first enable this

b) set focus in this window

c) change to the runtime tab

d) scroll down past all the confusing and technical things that most end users are nervous to touch like "Memory limits" and temporary file location and code page settings - to click on the last option for the AMP engine.

e) and then hit the run button

A better way!

Could we instead simplify this and just put a drop-down on the run button so that you can run with the old engine, or run with the new engine? Or even better, have 2 run buttons - run with old engine, and run with super-fast cool new engine?

- This puts the choice where the user is looking at the time they are looking to run (If I want to run a canvas - I'm thinking about the run button, not a setting at the bottom of the third tab of a workflow configuration)

- It also makes it super easy for users to run with E1 and AMP without having to do 10 clicks to compare - this way they can very easily see the benefit of AMP

- It makes it less scary since you are not wading through configuration changes like Memory or Codepages

- and finally - it exposes the new engine to people who may not even know it exists 'cause it's buried on the bottom of the third tab of a workflow configuration panel, under a bunch of scary-sounding config options.

cc: @TonyaS

-

AMP Engine

-

Engine

As we begin to adopt the AMP engine - one of the key questions in every user's mind will be "How do I know I'm going to get the same outcome"

One of the easiest ways to build confidence in AMP - and also to get some examples back to Alteryx where there are differences is to allow users to run both in parallel and compare the differences - and then have an easy process that allows users to submit issues to the team.

For example:

- Instead of the option being run in AMP or run in E1 - instead can we have a 3rd option called "Run in comparison mode"

- This runs the process in both AMP and E1; and checks for differences and points them out to the user in a differences repot that comes up after the run.

- Where there's a difference that seems like a bug (not just a sorting difference but something more material) - the user then has a button that they can use to "Submit to Alteryx for further investigation". This will make it much simpler for Alteryx to identify any new issues; and much simpler for users to report these issues (meaning that more people will be likely to do it since it's easier).

The benefit of this is that not only will it make users more comfortable with AMP (since they will see that in most cases there are no difference); it will also give them training on the differences in AMP vs. E1 to make the transition easier; and finally where there are real differences - this will make the process of getting this critical info to Alteryx much easier and more streamlined since the "Submit to Alteryx" process can capture all the info that Alteryx need like your machine; version number etc; and do this automatically without taxing the user.

-

AMP Engine

-

Engine

In cases where there are dynamic tools - you often get a situation where there are zero rows returned - which means that the output of something like a transpose or a JSON parse or a regex may not have the field names expected.

However - any downstream filter tools (or other similar tools) fail even though there are no rows (see screenshot below).

The only way to get around this is to insert fake rows using a union or use the CReW macro for Ensure Fields. However, this is all waste since there are no rows so there's no point in even evaluating the predicate in the filter tool. Rather than making users work around this - can we please change the engine so that a tool can avoid evaluation if there are zero rows - this will significantly reduce the amount of these kind of workaround that need to be used with any dynamic tools (including any API calls).

thank you

Sean

-

AMP Engine

-

Engine

- New Idea 206

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,492 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

176 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 41 | |

| 31 | |

| 20 | |

| 10 | |

| 7 |