Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Advanced Join Question

Advanced Join Question

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I ran into a situation while translating from some code from SAS/SQL to Alteryx, and they were left joining tables with three conditions; the first was a simple join TableA.x1 = TableB.y1, then had a condition that TableA.x2 be between, say, TableB.min2 and TableB.max2, and finally a third condition where TableA.x3 >= TableB.x3. (Naturally you could imagine other scenarios even more nefarious, but this will suffice for now).

The Advanced Join macro could almost solve this - I needed to join back to the original TableA in order to ensure the outer join still included everything from TableA. However, my TableA in real life has over 100 columns, and my "join back" would need to join on all of them, which is more pointing and clicking than is reasonable.

I also tried using the R tool (my favorite), and SQLDF, in order to do it, and that works; however in addition to having over 100 columns, I also had over a million rows, so it not only took a while to complete (a few minutes - not that bad really), but also gave me an error that left me a little nervous, even if perhaps it's nothing to worry about: same error as seen here. So it worked, but also not ideal.

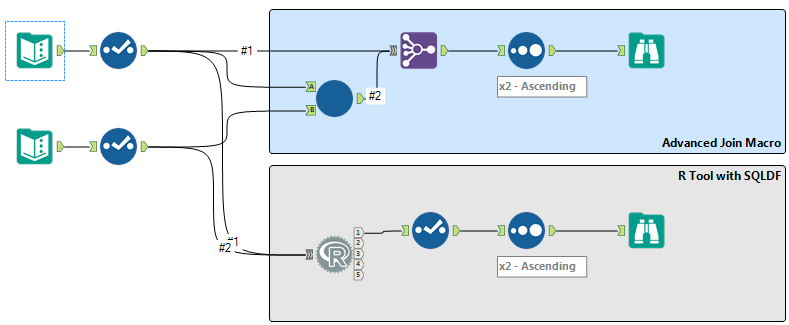

I simplified and generalized all this in the attached workflow, which looks like:

This doesn't have the sheer number of columns or rows, but illustrates the complexity of the join conditions.

So the question is, is there an easier way to do left joins of arbitrary complexity... that also scales well to both many columns and many rows?

Solved! Go to Solution.

- Labels:

-

Join

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I looked at your module and found that NULL values might be inflating your results. You've got 3 records with NULLs for min2 and max2 and y3 that might not qualify according to your rule set.

My K.I.S.S. approach to this would be to JOIN on the EQUALITY of TableA.x1 == TableB.y1 and then filter for the "Between" condition and the "Greater" condition being TRUE.

Just a thought,

Mark

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@MarqueeCrew (nice current icon by the way),

I believe they want to retain all rows from the first table, so the NULL are expected; I probably should have added added another column in TableB that was "some data we want to have if it's there based on the join conditions."

Although... that does rephrase the problem as, essentially these are just lookup tables with complex lookup conditions... perhaps I can rewrite the whole approach based on that. Thanks for your response!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This feels similar to the VLookup question that comes up in the community all the time, which I think @JohnJPS and me had a go at using the R tool.

Looking inside the advanced macro it does a cartesian join, so that feels like no way it can be performant on a massive dataset.

This has been one I have been mean to try to within the .Net API but even there will have difficulties due to needing to cache in memory large datasets to do the join. WIll hopefully get back to trying this weekend.

Looking at the specific case, could you generate rows for all the x2 values and then do a standard join on x1 and x2 and then use a filter for the x3 clause.

Again just some quick thougts. Feels like worth generating a representative scale dataset then we can all see who can make the best solution :)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This data set (download from kaggle from their Homesite Quote Conversion competition) isn't quite big enough to break R, but it did break the Advanced Join Macro, or I did something stupid - entirely possible. I first converted it to a .yxdb, and then am using it in the attached workflow. Even though it doesn't break R, it should be large enough to at least compare alternative solutions. My "TableB" is still just dummy cooked up data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I think we just nailed it! Your version lacks the "left outer join" aspect in that it doesn't retain all rows of the original file. However - and I'm not sure how I missed this previously - all we need to do is generate a record ID on the file (unique on every row), and join back on that key after our complex conditions are met. This has been done in the attached module.

Pretty exciting: this runs really fast and fully accomplishes a left outer join using arbitrarily complex conditions.

(Aside: the "auto-field" tool will shrink the .yxdb considerably and get everything running lightning quick, too.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

One slight tuning to get that little bit more...

Use a union to get back to full record set then no need for record id.

With the auto fielded yxdb shaves about 10% off (3s vs 3,3s on mine)

Still want to build a decent advance join tool... this trick wont work on large domain of values.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Using union required also a distinct tool, to pare down the rows returned by the union (unless I missed a setting); in the end for me the RecordID approach was slightly faster (3.7s worst case vs. 4.2s best case with the union after 5 runs of each; on a ho-hum laptop with no SSD).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Dont think would get dupes but will be losing the rows in the filter, so yep wont quite work.

-

AAH

1 -

AAH Welcome

2 -

Academy

24 -

ADAPT

82 -

Add column

1 -

Administration

20 -

Adobe

174 -

Advanced Analytics

1 -

Advent of Code

5 -

Alias Manager

69 -

Alteryx

1 -

Alteryx 2020.1

3 -

Alteryx Academy

3 -

Alteryx Analytics

1 -

Alteryx Analytics Hub

2 -

Alteryx Community Introduction - MSA student at CSUF

1 -

Alteryx Connect

1 -

Alteryx Designer

44 -

Alteryx Engine

1 -

Alteryx Gallery

1 -

Alteryx Hub

1 -

alteryx open source

1 -

Alteryx Post response

1 -

Alteryx Practice

134 -

Alteryx team

1 -

Alteryx Tools

1 -

AlteryxForGood

1 -

Amazon s3

135 -

AMP Engine

187 -

ANALYSTE INNOVATEUR

1 -

Analytic App Support

1 -

Analytic Apps

17 -

Analytic Apps ACT

1 -

Analytics

2 -

Analyzer

17 -

Announcement

4 -

API

1,036 -

App

1 -

App Builder

42 -

Append Fields

1 -

Apps

1,165 -

Archiving process

1 -

ARIMA

1 -

Assigning metadata to CSV

1 -

Authentication

4 -

Automatic Update

1 -

Automating

3 -

Banking

1 -

Base64Encoding

1 -

Basic Table Reporting

1 -

Batch Macro

1,265 -

Beginner

1 -

Behavior Analysis

216 -

Best Practices

2,403 -

BI + Analytics + Data Science

1 -

Book Worm

2 -

Bug

619 -

Bugs & Issues

2 -

Calgary

58 -

CASS

45 -

Cat Person

1 -

Category Documentation

1 -

Category Input Output

2 -

Certification

4 -

Chained App

233 -

Challenge

7 -

Charting

1 -

Clients

3 -

Clustering

1 -

Common Use Cases

3,378 -

Communications

1 -

Community

188 -

Computer Vision

44 -

Concatenate

1 -

Conditional Column

1 -

Conditional statement

1 -

CONNECT AND SOLVE

1 -

Connecting

6 -

Connectors

1,173 -

Content Management

8 -

Contest

6 -

Conversation Starter

17 -

copy

1 -

COVID-19

4 -

Create a new spreadsheet by using exising data set

1 -

Credential Management

3 -

Curious*Little

1 -

Custom Formula Function

1 -

Custom Tools

1,712 -

Dash Board Creation

1 -

Data Analyse

1 -

Data Analysis

2 -

Data Analytics

1 -

Data Challenge

83 -

Data Cleansing

4 -

Data Connection

1 -

Data Investigation

3,040 -

Data Load

1 -

Data Science

38 -

Database Connection

1,885 -

Database Connections

5 -

Datasets

4,558 -

Date

3 -

Date and Time

3 -

date format

2 -

Date selection

2 -

Date Time

2,872 -

Dateformat

1 -

dates

1 -

datetimeparse

2 -

Defect

2 -

Demographic Analysis

172 -

Designer

1 -

Designer Cloud

468 -

Designer Integration

60 -

Developer

3,629 -

Developer Tools

2,902 -

Discussion

2 -

Documentation

449 -

Dog Person

4 -

Download

900 -

Duplicates rows

1 -

Duplicating rows

1 -

Dynamic

1 -

Dynamic Input

1 -

Dynamic Name

1 -

Dynamic Processing

2,518 -

dynamic replace

1 -

dynamically create tables for input files

1 -

Dynamically select column from excel

1 -

Email

740 -

Email Notification

1 -

Email Tool

2 -

Embed

1 -

embedded

1 -

Engine

129 -

Enhancement

3 -

Enhancements

2 -

Error Message

1,966 -

Error Messages

6 -

ETS

1 -

Events

176 -

Excel

1 -

Excel dynamically merge

1 -

Excel Macro

1 -

Excel Users

1 -

Explorer

2 -

Expression

1,688 -

extract data

1 -

Feature Request

1 -

Filter

1 -

filter join

1 -

Financial Services

1 -

Foodie

2 -

Formula

2 -

formula or filter

1 -

Formula Tool

4 -

Formulas

2 -

Fun

4 -

Fuzzy Match

613 -

Fuzzy Matching

1 -

Gallery

586 -

General

93 -

General Suggestion

1 -

Generate Row and Multi-Row Formulas

1 -

Generate Rows

1 -

Getting Started

1 -

Google Analytics

139 -

grouping

1 -

Guidelines

11 -

Hello Everyone !

2 -

Help

4,094 -

How do I colour fields in a row based on a value in another column

1 -

How-To

1 -

Hub 20.4

2 -

I am new to Alteryx.

1 -

identifier

1 -

In Database

852 -

In-Database

1 -

Input

3,699 -

Input data

2 -

Inserting New Rows

1 -

Install

3 -

Installation

305 -

Interface

2 -

Interface Tools

1,638 -

Introduction

5 -

Iterative Macro

946 -

Jira connector

1 -

Join

1,730 -

knowledge base

1 -

Licenses

1 -

Licensing

210 -

List Runner

1 -

Loaders

12 -

Loaders SDK

1 -

Location Optimizer

52 -

Lookup

1 -

Machine Learning

229 -

Macro

2 -

Macros

2,491 -

Mapping

1 -

Marketo

12 -

Marketplace

4 -

matching

1 -

Merging

1 -

MongoDB

66 -

Multiple variable creation

1 -

MultiRowFormula

1 -

Need assistance

1 -

need help :How find a specific string in the all the column of excel and return that clmn

1 -

Need help on Formula Tool

1 -

network

1 -

News

1 -

None of your Business

1 -

Numeric values not appearing

1 -

ODBC

1 -

Off-Topic

14 -

Office of Finance

1 -

Oil & Gas

1 -

Optimization

644 -

Output

4,491 -

Output Data

1 -

package

1 -

Parse

2,091 -

Pattern Matching

1 -

People Person

6 -

percentiles

1 -

Power BI

197 -

practice exercises

1 -

Predictive

2 -

Predictive Analysis

817 -

Predictive Analytics

1 -

Preparation

4,620 -

Prescriptive Analytics

185 -

Publish

228 -

Publishing

2 -

Python

726 -

Qlik

35 -

quartiles

1 -

query editor

1 -

Question

18 -

Questions

1 -

R Tool

452 -

refresh issue

1 -

RegEx

2,101 -

Remove column

1 -

Reporting

2,107 -

Resource

15 -

RestAPI

1 -

Role Management

3 -

Run Command

499 -

Run Workflows

10 -

Runtime

1 -

Salesforce

242 -

Sampling

1 -

Schedule Workflows

3 -

Scheduler

371 -

Scientist

1 -

Search

3 -

Search Feedback

20 -

Server

523 -

Settings

755 -

Setup & Configuration

47 -

Sharepoint

463 -

Sharing

2 -

Sharing & Reuse

1 -

Snowflake

1 -

Spatial

1 -

Spatial Analysis

555 -

Student

9 -

Styling Issue

1 -

Subtotal

1 -

System Administration

1 -

Tableau

461 -

Tables

1 -

Technology

1 -

Text Mining

407 -

Thumbnail

1 -

Thursday Thought

10 -

Time Series

397 -

Time Series Forecasting

1 -

Tips and Tricks

3,773 -

Tool Improvement

1 -

Topic of Interest

40 -

Transformation

3,196 -

Transforming

3 -

Transpose

1 -

Truncating number from a string

1 -

Twitter

24 -

Udacity

85 -

Unique

2 -

Unsure on approach

1 -

Update

1 -

Updates

2 -

Upgrades

1 -

URL

1 -

Use Cases

1 -

User Interface

21 -

User Management

4 -

Video

2 -

VideoID

1 -

Vlookup

1 -

Weekly Challenge

1 -

Weibull Distribution Weibull.Dist

1 -

Word count

1 -

Workflow

8,427 -

Workflows

1 -

YearFrac

1 -

YouTube

1 -

YTD and QTD

1

- « Previous

- Next »