Alteryx Designer Desktop Knowledge Base

Definitive answers from Designer Desktop experts.- Community

- :

- Community

- :

- Support

- :

- Knowledge

- :

- Designer Desktop

- :

- How To: Connect to Redshift

How To: Connect to Redshift

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

02-28-2020 07:53 AM - edited 06-29-2021 08:59 AM

How To: Connect to Redshift

Alteryx supports connecting to Amazon Redshift, a columnar database. Amazon Redshift Spectrum is a supported feature that allows running queries against unstructured data stored in Amazon S3.

Please note that your DBA can be an invaluable resources in configuring the database specific settings.

Prerequisites

- Product - Alteryx Designer

- 3rd party product - Access to Redshift database

- Version1.3.7.1000 or newer

- 3rd party product - Access to Amazon S3 to be able to use Amazon Redshift Bulk Connection.

- Redshift ODCB driver.

Configure an ODBC Connection

To configure an ODBC connection to a Redshift database:

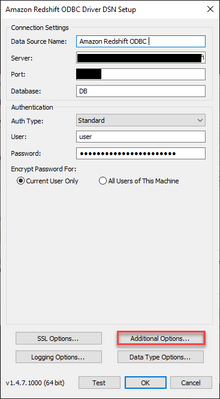

- Navigate to ODBC Data Source Administrator

- Select Redshift driver and click Configure

- Enter you connection settings and credentials

- Navigate to your Redshift Data Source and click "configure",

- Select Additional Options

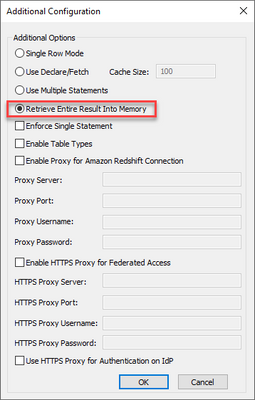

- Check the option "Retrieve Entire Result Into Memory". Please note that this setting will load the entire result set into physical memory while reading the data. If physical memory is low compared to the size of data loaded from Redshift into Designer please work with your DBA for the recommended setting. Alternatives could be "Single Row Mode" or "Use Declare/Fetch" (with specified Cache Size). Please refer to Simba Redshift ODBC driver documentation for details.

- Confirm with OK.

Configure an Amazon Redshift Bulk Connection

Write-only Bulk Load support is available for standard and in-DB workflows for Redshift. For Redshift Spectrum standard workflows writing is only supported through Bulk Load.

Please note that an S3 account is required for the below steps.

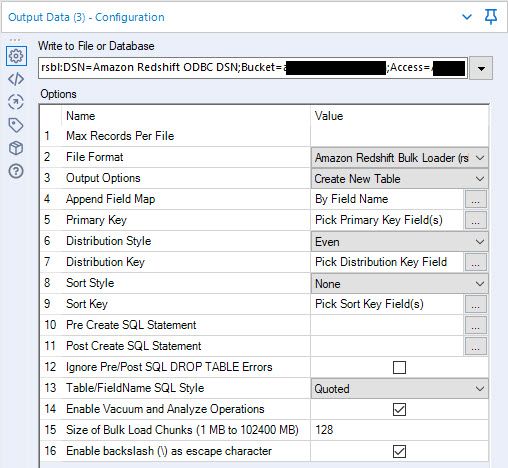

- In Designer draw an Output Data tool on the Canvas,

- Then,

- 2019.1 and earlier or when using Classic Mode for Input Data / Output Data tool. This can be set under Alteryx - Options - User Settings - Edit User Settings - Defaults - "Use classic mode for the Input / Output tool menu options":

- Click the Write to File or Database drop down menu and select Other Databases > Amazon Redshift Bulk

- 2019.2 and later:

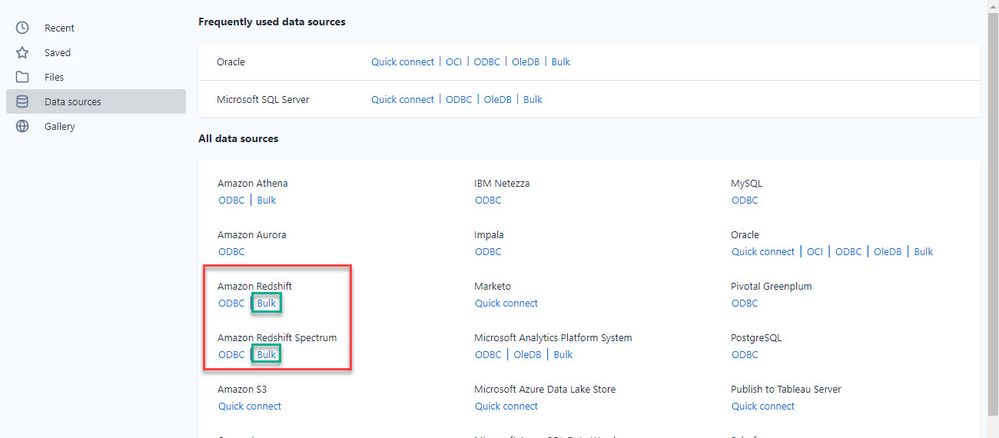

- Alteryx displays the Data connections window. To create new connection click Data Sources and select Amazon Redshift - Bulk or Amazon Redshift Spectrum - Bulk

-

- 2019.1 and earlier or when using Classic Mode for Input Data / Output Data tool. This can be set under Alteryx - Options - User Settings - Edit User Settings - Defaults - "Use classic mode for the Input / Output tool menu options":

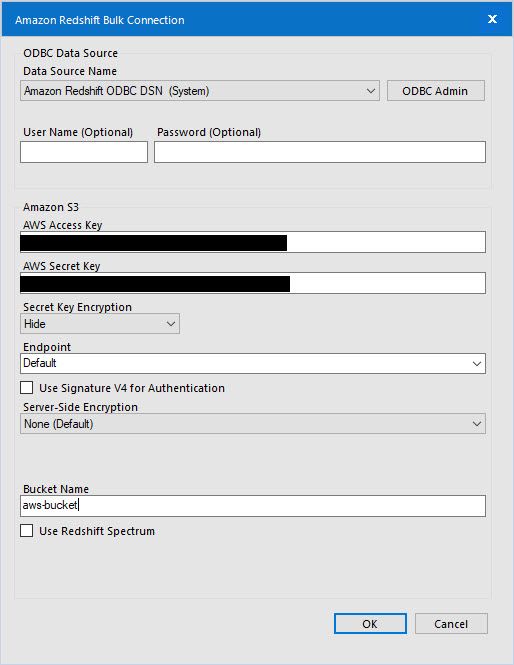

- Select a Data Source Name or create a new one in ODBC Data Source Admin

- Optionally enter User name and password

- Fill in AWS credentials to access data for upload

- In the Secret Key Encryption drop down, select an encryption option:

- Hide: Hide the password using minimal encryption.

- Encrypt for Machine: Any user on the computer will be able to fully use the connection.

- Encrypt for User: The logged in user can use the connection on any computer.

- The endpoint should be set to Default so that Amazon will automatically determine the endpoint to be used. The endpoint should be set manually if a specific endpoint is needed for either private S3 deployments or to select a specific region.

- Optionally, select Use Signature V4 for Authentication to use Signature Version 4 instead of the default Signature Version 2. This will increase security, but connection speeds may be slower. This option is automatically enabled for regions requiring Signature Version 4.

- Select a Server-Side Encryption method for uploading to an encrypted Amazon S3 bucket. For more information on Amazon S3 encryption methods, see the Amazon Simple Storage Service Developer Guide.

- None (Default): No encryption method is used.

- SSE-KMS: Use server-side encryption with AWS KMS-managed keys. Optionally provide a KMS Key ID. When you select this method ,Use Signature V4 for Authentication is enabled by default.

- In Bucket Name, type the name of the AWS bucket in which your data objects are stored.

- Optionally select Use Redshift Spectrum to connect to Redshift Spectrum tables

Please note that the Output Options are described in greater detail in the Amazon Redshift Database Developer Guide.

Common Issues

The following error message occurs:

The bucket you are attempting to access must be addressed using the specified endpoint. Please send all future requests to this endpoint.

The above error message typically occurs if the bucket selected is not in the region the specified endpoint is in.

Select the Default endpoint solve this issue.

Amazon Redshift: Check 'stl_load_errors' system table for error details

When writing to Amazon Redshift, the following error appears:

Data Stream In (x): The COPY failed with error: [Amazon][Amazon Redshift] (30) Error occurred while trying to execute a query: [SQLState XX000] ERROR: Load into table 'ayx1XXX' failed. Check 'stl_load_errors' system table for details.

Please refer to the following KB article for further information -Amazon Redshift: Check 'stl_load_errors' system table for details.

The following error message occurs:

Error SQLExecute: [Amazon][Amazon Redshift] (30) Error occurred while trying to execute a query: [SQLState HY000] out of memory for query result

The above error message typically occurs if the result set read into the client machine is too large for the physical memory. Typical workarounds include methods to reduce physical memory usage on the client machine. Thus options include, but are not limited to refactoring the workflow to use in-DB tools as most in-DB tools work in the memory of the database; use a batch macro to load the data in smaller batches or usage of single row mode or declare / fetch mode, see also Simba Redshift ODBC driver. The DBA will be a helpful resource for the specific driver settings to be used.

As always if you encounter difficulties with the above feel free to reach out to Alteryx Customer Support.

Additional Resources

- Amazon Redshift help documentation

- Alteryx help documentation covering Amazon Redshift

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

My organization uses Alteryx to read data from AWS. Recently when users open a workflow from our Gallery with an AWS connection the UID and PWD no longer have the credentials and the user has to re enter them before saving the workflow. The workflows run fine on our Gallery but have blank credentials when opened in Designer. Has anyone else experienced this issue?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

@MattBenj that is by design (helps protect creds). You may have better luck if you download the file from the gallery instead.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

This picture should show the SIMBA Amazon Redshift driver - not the native Amazon Redshift driver - I believe the native driver truncates characters.

-

2018.3

17 -

2018.4

13 -

2019.1

18 -

2019.2

7 -

2019.3

9 -

2019.4

13 -

2020.1

22 -

2020.2

30 -

2020.3

29 -

2020.4

35 -

2021.2

52 -

2021.3

25 -

2021.4

38 -

2022.1

33 -

Alteryx Designer

9 -

Alteryx Gallery

1 -

Alteryx Server

3 -

API

29 -

Apps

40 -

AWS

11 -

Computer Vision

6 -

Configuration

108 -

Connector

136 -

Connectors

1 -

Data Investigation

14 -

Database Connection

196 -

Date Time

30 -

Designer

204 -

Desktop Automation

22 -

Developer

72 -

Documentation

27 -

Dynamic Processing

31 -

Dynamics CRM

5 -

Error

267 -

Excel

52 -

Expression

40 -

FIPS Designer

1 -

FIPS Licensing

1 -

FIPS Supportability

1 -

FTP

4 -

Fuzzy Match

6 -

Gallery Data Connections

5 -

Google

20 -

In-DB

71 -

Input

185 -

Installation

55 -

Interface

25 -

Join

25 -

Licensing

22 -

Logs

4 -

Machine Learning

4 -

Macros

93 -

Oracle

38 -

Output

110 -

Parse

23 -

Power BI

16 -

Predictive

63 -

Preparation

59 -

Prescriptive

6 -

Python

68 -

R

39 -

RegEx

14 -

Reporting

53 -

Run Command

24 -

Salesforce

25 -

Setup & Installation

1 -

Sharepoint

17 -

Spatial

53 -

SQL

48 -

Tableau

25 -

Text Mining

2 -

Tips + Tricks

94 -

Transformation

15 -

Troubleshooting

3 -

Visualytics

1

- « Previous

- Next »