Alteryx Designer Desktop Knowledge Base

Definitive answers from Designer Desktop experts.- Community

- :

- Community

- :

- Support

- :

- Knowledge

- :

- Designer Desktop

- :

- Getting Data to Big Query

Getting Data to Big Query

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

07-20-2016 11:09 AM - edited 08-03-2021 04:11 PM

NOTE: This article was written by Paul Houghton and was published originally here. All credit goes to Paul Houghton and his group at the Information Lab. Thought this was such a great solution; wanted to make sure it was made available on the Alteryx Community as well.

The Problem

I have recently been working with a client who wanted to get their data into a Google Big Query table with Alteryx. It sounded like a simple problem and should have been pretty easy for me to get working.

Unfortunately that was not to be the case. Connecting to a big query table with the Simba ODBC driver is pretty easy, but that driver is just read only. Dead end there.

So off to the Big Query API documentation.

I found that uploading a single record was relatively easy but slow, uploading thousands of records should be easier.

Deeper digging I went. Using the web interface I can upload a csv to Google Cloud Storage, then load that file into Big Query, the challenge I had was to automate the process.

Step 1 - Throw it into the (Google) Cloud

So I was quite lucky that my colleagues Johanthan MacDonald and Craig Bloodworthhad already made a functioning cloud uploader using the cURL program. All I had to do was doctor the URL to work for me. Ideally I wanted to make the entire process native to Alteryx.

… (T)he next phase of the automation, (is) importing the csv into big query.

Step 2 - Big Query your Data

So the second step was to get the data into big query. I found this a bit of a humdinger and it really stretched my API foo. So what did I have to do?

What query do I run?

I struggled with this for a while until I attended the Tableau User Group held at the Google Town Hall in London, I was able to get in touch with Reza Rokni who pointed me at the example builder at the bottom of the Jobs List Page where you could build an example of the query to send.

The biggest problem with this is getting the JSON file with the table schema right. I got it eventually, but there were a fair few challenges.

Step 3 - Get it to the Cloud with Alteryx

Once I had the whole process working I wanted to go back to the problem of uploading a file with Alteryx. I knew it was surely possible to upload a file with the download tool. I just couldn't work out how to get the file in a row to upload.

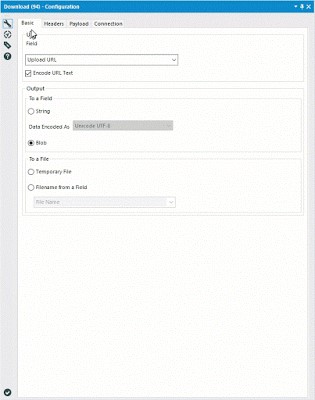

|

| What settings do I use? |

Enter the Blob Family

What made this the upload work was finding what the blob tools do and how they work. So what is a blob? It's a Binary Large Object, basically a file without the extension.

|

| Blob Family |

So now using the blob input tool I'm able to read in a file to a single field. This is exactly what I needed. It’s relatively simple from here to read the file into a single cell and use the normal download tool for the upload process.

The Final Furlong

Now to pull this all together the last step is to put in the details needed to do the upload. So what are the settings?

Well on the 'Basic' we simply want to set the target URL (check the API documentation to work out what that would be), and what we want to do with the response from the server.

On the headers page we need to define the 'Authorization' parameter and the 'Content Type' parameter that is needed by Google's API.

The last set of configuration is the payload, and that is where we define the use of a POST command and what column contains the Blob.

Success!

And after all that I have managed to have success. By combining these two processes the records are uploaded as csv, then imported into a pre-defined Big Query table.

Paul has uploaded this to his Alteryx Gallery Page(v10.5) and would love to get feedback from anyone who uses it in its current configuration. He would appreciate any feedback on how it works as it is and how easy it is to configure. He would also love to get some ideas on how to improve the tool. He already have ideas to automate the table schema file creation, develop separate up-loaders for Big Query and Google Cloud, see if changing the upload query will make it more robust, possibly build in compression, and who knows what else would be useful. Please post your comments here.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Interesting. Such a comprehensive article. Thanks for providing all the information.

-

2018.3

17 -

2018.4

13 -

2019.1

18 -

2019.2

7 -

2019.3

9 -

2019.4

13 -

2020.1

22 -

2020.2

30 -

2020.3

29 -

2020.4

35 -

2021.2

52 -

2021.3

25 -

2021.4

38 -

2022.1

33 -

Alteryx Designer

9 -

Alteryx Gallery

1 -

Alteryx Server

3 -

API

29 -

Apps

40 -

AWS

11 -

Computer Vision

6 -

Configuration

108 -

Connector

136 -

Connectors

1 -

Data Investigation

14 -

Database Connection

196 -

Date Time

30 -

Designer

204 -

Desktop Automation

22 -

Developer

72 -

Documentation

27 -

Dynamic Processing

31 -

Dynamics CRM

5 -

Error

267 -

Excel

52 -

Expression

40 -

FIPS Designer

1 -

FIPS Licensing

1 -

FIPS Supportability

1 -

FTP

4 -

Fuzzy Match

6 -

Gallery Data Connections

5 -

Google

20 -

In-DB

71 -

Input

185 -

Installation

55 -

Interface

25 -

Join

25 -

Licensing

22 -

Logs

4 -

Machine Learning

4 -

Macros

93 -

Oracle

38 -

Output

110 -

Parse

23 -

Power BI

16 -

Predictive

63 -

Preparation

59 -

Prescriptive

6 -

Python

68 -

R

39 -

RegEx

14 -

Reporting

53 -

Run Command

24 -

Salesforce

25 -

Setup & Installation

1 -

Sharepoint

17 -

Spatial

53 -

SQL

48 -

Tableau

25 -

Text Mining

2 -

Tips + Tricks

94 -

Transformation

15 -

Troubleshooting

3 -

Visualytics

1

- « Previous

- Next »