Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

In the new Intelligence Suite tools, it would be extremely useful to have the option to add n-gram (combining words/tokens ) in the Topic Modeling Text Mining Tool.

This is important in many NLP topic modeling scenarios.

It would provide more flexibility to build better NLP models.

For details on n-gram

https://en.wikipedia.org/wiki/N-gram

When using the text mining tools, I have found that the behaviour of using a template only applies to documents with the same page number.

So in my use case I've got a PDF file with 100+ claim statements which are all laid out the same (one page per statement). When setting up the template I used one page to set the annotations, and then input this into the T anchor of the Image to Text tool. Into the D anchor of this tool is my PDF document with 100+ pages. However when examining the output I only get results for page 1.

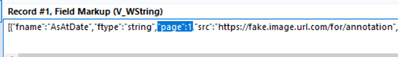

On examining the JSON for the template I can see that there is reference to the template page number:

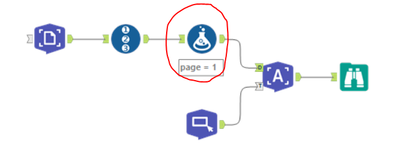

And playing around with a generate rows tool and formula to replace the page number with pages 1 - 100 in the JSON doesn't work. I then discovered that if I change the page number on the image input side then I get the desired results.

However an improvement to the tool, as I suspect this is a common use case for the image to text tool, is to add an option in the configuration of the image to text tool to apply the same template to all pages.

I have a PDF of 27 pages and each page is identical. The headers, footers and data are static in positioning on each page. It would be great if I could define the text to parse out on the first page, then that could be used to parse out all of the pages in the PDF. It would make the tool far more useful.

It would be great to have the below functionality in Alteryx.

A workflow is built in Alteryx and button click in Alteryx can be used to generate SQL code that can be ran on a specific database platform, such as SQL Server to run external editors such as SQL Server Management Studio. Thanks.

Alteryx Server was recently updated to allow TLS-mediated connections to the MongoDB persistence layer. This allowed us to switch off of the embedded MongoDB to a highly-available MongoDB Atlas cluster. To our surprise after the switch, when we went to edit our workflows that make use of the persistence layer's data (Server Usage Report, etc.) to hit the new Atlas cluster, we found that the MongoDB Input tool does not support TLS connections. This absolutely needs to be changed. Based on organizational constraints, Atlas is our only option for a HA persistence layer. We absolutely have to have TLS support for the MongoDB Input tool. There is no other way for us to natively query our server persistence layer in Designer. Please bring the MongoDB Input tool into alignment with the MongoDB connections that are supported by Alteryx Server.

I surprisingly couldn't find this anywhere else as I know it's been discussed in person on many occasions.

Basically the Formula tool needs to be smarter in many ways, but this particular post focuses on the Data Type component.

The formula tool, should not always default to V_String as the data type when entering data or a formula into the formula tool, it should look at the data type and estimate the most likely option.

I know there are times where the logical type might not be consistent in all fields, but the Data Preview and the Function of the formula should be used to determine the most likely option.

E.G. If I type a number or a date directly into the formula tool, then Alteryx should be smart enough to change the data type from the standard V_String to Int, Double or date.

This is an extension to the ideas posted here:

Please upgrade the "curl.exe" that are packaged with Designer from 7.15 to 7.55 or greater to allow for -k flags. Also please allow the -k functionality for the Atleryx Download tool.

-k, --insecure

(TLS) By default, every SSL connection curl makes is verified to be secure. This option allows curl to proceed and operate even for server connections otherwise considered insecure.

The server connection is verified by making sure the server's certificate contains the right name and verifies successfully using the cert store.

Regards,

John Colgan

When using the output data tool, it would save me and my cluttered organizational skills a lot of effort if the writing workflow was saved as part of the yxdb metadata.

I've often had to search to find a workflow which created the yxdb. I tend to use naming conventions to help me, but it would be easier if the file and or path was easily found.

cheers,

mark

I love this tool, but think it would be improved by including an option to create a column per delimiting character. This could be added in the number of columns selector box. In the case where 1 row has more delimiters than another, null columns can be created. Without this option you have to Regex count the delimiters, select the max and then embed the Text to columns tools in a macro and then pass the max columns as a param. Would be nice to resolve all this in the main tool.

Thanks, nick

Referencing the previous idea: Inputs/Output should have the option to read/write a compressed file (ZIP or GZIP)

This idea has been implemented for inputting .zip files. However, we still need to use the run command workaround for outputs. It's very common for many users to want to output their .csv, .xlsx, .pdf to a .zip. The functionality would also need to extend to Gallery.

See the following links for people that are looking for this type of functionality:

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Output-files-to-ZIP/td-p/163502

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Zip-files/td-p/151456

Feel free to merge this idea with the previous one for continuity.

Only csv is provided (and json etc) but not .xlsx

Let us know when this can be added to Alteryx

Thanks

I reported this to the support team but was told it was by design and to post here.

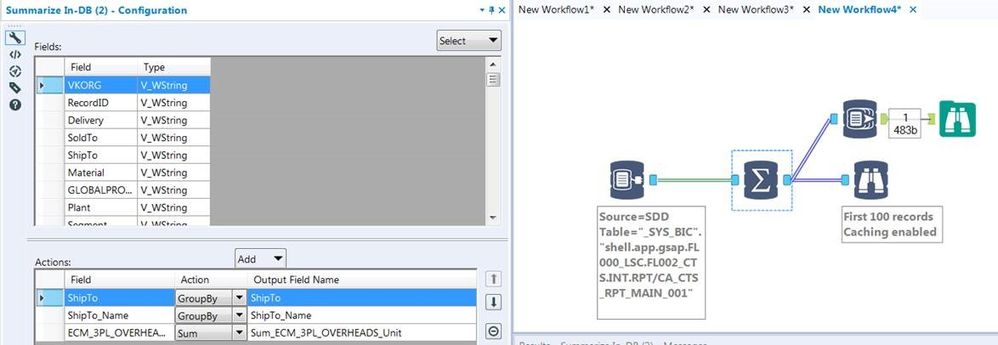

In-DB Inefficient SQL

I would like to report that the In-DB tools are generating horribly inefficient SQL code for simple operations. It seems no matter what tools you use every statement is starting with a nested 'Select * From'.

Example Simple workflow:

This is a simple Select and Group by but the SQL Generated is:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM (SELECT * FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001") AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

This is taking a very long time to execute:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 15.752 seconds (server processing time: 15.699 seconds)

Whereas if I take the same query and remove the nested Select *:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001" AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

It is very quick:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 1.211 seconds (server processing time: 1.157 seconds)

So Alteryx is generating queries up to x13 slower than they should be thereby defeating the point of using In-DB. As you can imagine in a workflow where we have multiple Connect In-DB tools this is a really substantial amount of time. Example used above is from SAP HANA DB has 1.9m rows and ~90 columns but we have much bigger tables/views than this.

If you look you will see its same behaviour for all In-DB tools where each tool creates another nested Select with its particular operator.

MY SUGGESTION:

So my suggestion is that Alteryx should combine the SQL of the first few tools and avoid using SELECT * completely unless no Select tools have been used. So it should combine:

- Connect In-DB + Select

- Connect In-DB + Filter

- Connect In-DB + Summarise

Preferably it should combine/flatten everything up until the first join or union. But Select + Filter are a must!

Note it seems some DB's can cope OK with un-nesting these big nested queries in the query plans for some Tables but normally not for Views. But some cannot cope at all and so the In-DB tools cannot even be used to Browse 100 records (due to select *).

Hi there,

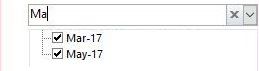

My idea comes when I've built an application, where user select filter from drop-down list. However it contains thousands of records, so it takes lot's of time to find desired record.

In Excel and MS Access when you use filter you can put many letter and filter shows rows that match the input. In Alteryx user can only put first letter, which is huge drawback to my users.

This is how it works in Excel:

Hope you like it!

A common problem with the R tool is that it outputs "False Errors" like the following: "The R.exe exit code (4294967295) indicted an error"

I call this a false error because data passes out of the R script the same as if there were no error. As such, this error can generally be ignored. In my use case, however, my R tool is embedded within an iterative macro, and the error causes the iterator to stop running.

I was able to create a workaround by moving the R tool to a separate workflow and calling it from the CReW runner macro within my iterator, effectively suppressing the error message, but this solution is a bit clumsy, requires unnecessary read/writes, and uses nonstandard macros.

I propose the solution suggested by @mbarone (https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Boosted-Model-Error/td-p/5509) to only generate an error when the R return code is 1, indicating a true error, and to either ignore these false errors or pass them as warnings. This will allow R scripts and R-based tools to be embedded within iterative macros without breaking.

Presently when mapping an Excel file to an input tool the tool only recognizes sheets it does not recognize named tables (ranges) as possible inputs. When using PowerBI to read Excel inputs I can select either sheets or named ranges as input. Alteryx input tool should do the same.

From Wikipedia :

In a database, a view is the result set of a stored query on the data, which the database users can query just as they would in a persistent database collection object. This pre-established query command is kept in the database dictionary. Unlike ordinary base tables in a relational database, a view does not form part of the physical schema: as a result set, it is a virtual table computed or collated dynamically from data in the database when access to that view is requested. Changes applied to the data in a relevant underlying table are reflected in the data shown in subsequent invocations of the view. In some NoSQL databases, views are the only way to query data.

Views can provide advantages over tables:

Views can represent a subset of the data contained in a table. Consequently, a view can limit the degree of exposure of the underlying tables to the outer world: a given user may have permission to query the view, while denied access to the rest of the base table.

Views can join and simplify multiple tables into a single virtual table.

Views can act as aggregated tables, where the database engine aggregates data (sum, average, etc.) and presents the calculated results as part of the data.

Views can hide the complexity of data. For example, a view could appear as Sales2000 or Sales2001, transparently partitioning the actual underlying table.

Views take very little space to store; the database contains only the definition of a view, not a copy of all the data that it presents.

Depending on the SQL engine used, views can provide extra security.I would like to create a view instead of a table.

Statistics are tools used by a lot of DB to improve speed of queries (Hive, Vertica, etc...). It may be interesting to have an option on the write in db or data stream in to calculate the statistics. (something like a check box for )

Example on Hive : analyse {table} comute statistics; analyse {table} compute statistics for columns;

Option to select start and end time per day

e.g. between 8:00 AM and 5:00 PM every 2 hours

Would be nice to group workflows and their schedules because it gets confusing if you have a lot of schedules/workflows in the schedule view.

Especially if you have more than one schedule for a workflow.

One way could be to create folder system or to manage it through the meta info like macros.

- New Idea 207

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow