Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello all,

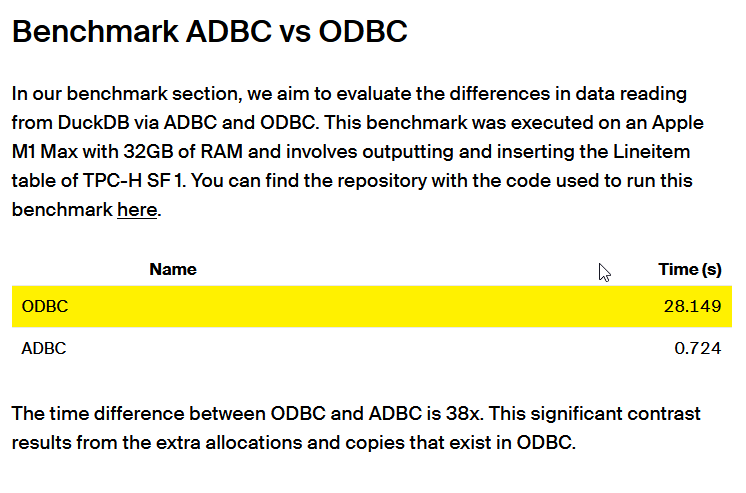

ADBC is a database connection standard (like ODBC or JDBC) but specifically designed for columnar storage (so database like DuckDB, Clickhouse, MonetDB, Vertica...). This is typically the kind of stuff that can make Alteryx way faster.

more info in https://arrow.apache.org/blog/2023/01/05/introducing-arrow-adbc/

Here a benchmark made by the guys at DuckDB : 38x improvement

https://duckdb.org/2023/08/04/adbc.html

Best regards,

Simon

-

Category In Database

-

Category Input Output

-

New Request

Problem statement -

Currently we are storing our Alteryx data in .yxdb file format and whenever we want to fetch the data, the whole dataset first load into the memory and then we can able to apply filter tool afterwards to get the required subset of data from .yxdb which is completely waste of time and resources.

Solution -

My idea is to introduce a YXDB SQL statement tool which can directly apply in a workflow to get the required dataset from .YXDB file, I hope this will reduce the overall runtime of workflow and user will get desired data in record time which improves the performance and reduce the memory consumption.

-

Category Input Output

-

Data Connectors

Hello all,

Apache Doris ( https://doris.apache.org/ ) is a modern datawarehouse with a lot of ambitions. It's probably the next big thing.

You can read the full doc here https://doris.apache.org/docs/get-starting/what-is-apache-doris but to sum it up, it aims to be THE reference solution for OLAP by claiming even better performance than Clickhouse, DuckDB or MonetDB. Even benchmarks from the Clickhouse team seem to agree.

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Category Input Output

-

New Request

Hello all,

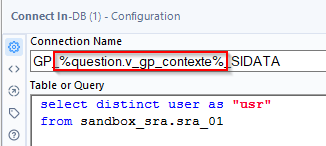

As of today, we use the good old alias in-memory to connect to our datasources in in-memory. We have several environments so we use constants in order to change the name of the in-memory alias during execution.

To illustrate :

Depending of the environment, the constant « v_gp_contexte » will take different values :

- GP_DS08_SIDATA for la dev.

- GP_EE_SIDATA for prod.

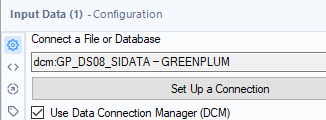

Sounds nice, right? But now, we would like to use DCM and the nightmare begins :

We can't manually change the name and set the question :

if we look at the xml of the workflow, we only find an id so editing it is useless :

(for informationDCM connections are stored in some sqlite db in C:\Users\{yourname}\AppData\Local\Alteryx

So, I would like to use the DCM inside the in-memory alias (the in-memory alias is stored and can be edited), just like for in-db connection alias.

Best regards,

Simon

-

Category Input Output

-

Enhancement

-

User Settings

For companies that have migrated to OneDrive/Teams for data storage, employees need to be able to dynamically input and output data within their workflows in order to schedule a workflow on Alteryx Server and avoid building batch MACROs.

With many organizations migrating to OneDrive, a Dynamic Input/Output tool for OneDrive and SharePoint is needed.

- The existing Directory and Dynamic Input tools only work with UNC path and cannot be leveraged for OneDrive or SharePoint.

- The existing OneDrive and SharePoint tools do not have a dynamic input or output component to them.

- Users have to build work arounds and custom MACROS for a common problem/challenge.

- Users have to map the OneDrive folders to their machine (and server if published to the Gallery)

- This option generates a lot of maintenance, especially on Server, to free up space consumed by the local version when outputting the data.

The enhancement should have the following components:

OneDrive/SharePoint Directory Tool

- Ability to read either one folder with the option to include/exclude subfolders within OneDrive

- Ability to retrieve Creation Date

- Ability to retrieve Last Modified Date

- Ability to identify file type (e.g. .xlsx)

- Ability to read Author

- Ability to read last modified by

- Ability to generate the specific web path for the files

OneDrive/SharePoint Dynamic Input Tool

- Receive the input from the OneDrive/SharePoint Directory Tool and retrieve the data.

Dynamic OneDrive/SharePoint Output Tool

- Dynamically write the output from the workflow to a specific directory individual files in the same location

- Dynamically write the output to multiple tabs on the same file within the directory.

- Dynamically write the output to a new folder within the directory

-

Category Connectors

-

Category Input Output

-

Data Connectors

Hello all,

As of now, you have two very distinct kinds of connection :

-in memory alias

-in database alias

It happens than every single time I use a in-database alias I have to create the same for in memory since some operations cannot be realized in in-database (such as pre-sql or interface tools)

What does that mean for us :

-more complex settings operations/training/tests

-unefficient worflows that have to deal with two kinds of alias.

What I propose :

-a single "connection alias", that can be used either for in-db either for in-memory,

-one place to configure

-the in-db or in-memory being dependant on the tools you use

Best regards,

Simon

-

Category In Database

-

Category Input Output

-

New Request

-

User Settings

Hi everyone,

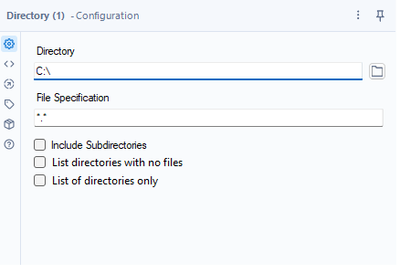

Add two additional features to a directory tool. Something like this:

Use cases:

1. Since it is not possible to use a folder browse on the Gallery, this could help a basic user create a list of possible folders to select from with the help of a drop-down

2. Directory analysis for cleaning purposes - currently, if you want to get a list of the folders with Alteryx, it takes forever for big file servers since Alteryx is mapping all the files

Both are achievable today through regex or a bat script.

Thank you,

Fernando Vizcaino

-

Category Input Output

-

Data Connectors

Hello all,

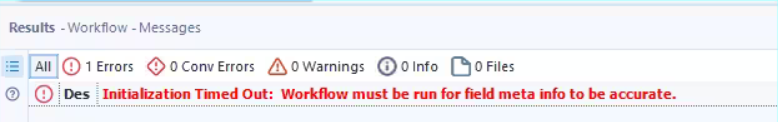

Sometimes, when you have too much time to retrieve your tables metadas, you can have this message

Initialization Timed Out: Workflow must be run for field meta info to be accurate.

From what I understand, it's Alteryx and the source system that drives the time out value. However, I have some cases where the long time is "normal" and that really hurts the user experience.

So, I would like the ability in settings to change the default value.

Best regards,

Simon

-

Category In Database

-

Category Input Output

-

New Request

-

User Settings

Hi all,

At present, Alteryx does not support DSN-free connections to Snowflake using the Bulk Connector. This is a critical functionality for any large company that uses Alteryx - and so I'm hoping that this can be changed in the product in an upcoming release. As a corollary - every DB connection type has to be able to work without DSNs for any medium or large size server instance - so it's worth extending this to check every DB connection type available in Alteryx.

Here are the details:

What is DSN-Free?

In order to be able to run our Alteryx canvasses on a multi-node server - we have to avoid using DSNs - so we generally expand connection strings that look like this:

odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

For Snowflake BL:

Now - for the Snowflake Bulk Loader the same process does not work and Alteryx gives the classic error below

With DSN:

snowbl:DSN=DSNSnowFlakeTest;UID=Username;pwd=__EncPwd1__;Staging=local;Method=user|||NEWTESTDB.PUBLIC.MYTESTTABLE

Without DSN:

snowbl:driver=SnowflakeDSIIDriver;UID=SeanBAdamsJPMC;pwd=__EncPwd1__;SERVER=xnb27844.us-east-1.snowflakecomputing.com;WAREHOUSE=compute_wh;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user|||NEWTESTDB.PUBLIC.MYTESTTABLE

Many thanks

Sean

-

Category Input Output

-

Data Connectors

The ability to output to Amazon Workdocs via a special Output tool would be very helpful for anyone looking into using Workdocs for personal or professional purposes. This is similar in functionality to the OneDrive connector.

-

Category Input Output

-

New Request

Have you ever had the business deliver an Excel (EEK!) file to be passed into Alteryx with a different number of header rows (because it looks pretty and is convenient)? Never, you say? Lies!

I would suggest adding an option to the Input Data Tool that would give us the ability concatenate multiple header rows. This would help enable accurate data profiling for columns when output and eliminate loss from unnecessary conversion errors. Currently, the options allow us to Start Data Input on Line X; however, if the header for the column is on multiple rows, they would have to be manually entered after input due to only being able to select the lowest possible row to assure the data is accurately passed. The solution would be to be able to specify the number of rows that contain headers, concatenate them to a single row (ignoring null and carriage return) and then output that as the header.

The current functionality, in a situation where each row has a variable number of header rows, causes forced errors such as a scientific string conversion of a numeric value.

-

Category Input Output

-

Data Connectors

If you cancel a workflow while its writing into a file, the file creation will not be rollbacked and hence a partial file would have been created.

This is problematic when working with incremental load relying on file from the past.

-

Category Input Output

-

Enhancement

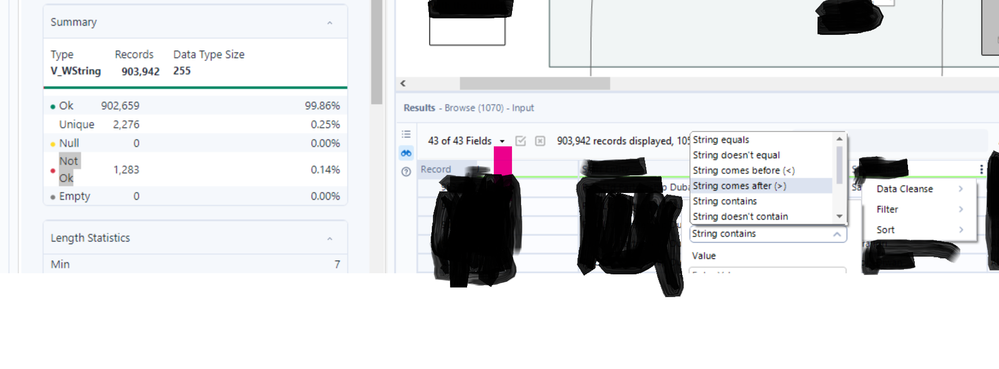

To embed the "Not ok" filter option in the browse tool

-

Category Input Output

-

Enhancement

-

UX

I am a big user of the browse tool and the filter option within the browse tool. In many cases I filter on multiple columns at the same time as I'm sure many users do. I am suggesting the following 2 enhancements to filter functionality in the browse tool:

1. After applying some filters, although I can see the filter icon activate at the top of the tool, it is difficult to know at a glance which columns have filters applied without clicking on every column heading and examining the filter settings. In the event a column is filtered, a filter icon could be provided at the top of the column to easily identify filtered columns, removing the need for users to memorise filtered columns.

2. After applying multiple filters, if a user clicks onto another tool with the workflow or anywhere else on the canvas - even accidentally - all filters will be removed and the user will need to reapply them. In my view it would make more sense to make the filters persistent, or at least give users the option of doing so. Doing so would be a big time saver.

-

Category Input Output

-

Data Connectors

My Team Heavily rely on Dremio.

It would be great for Alteryx team to add Dremio as a dedicated data source Input for Alteryx, it would be so much easier for us to configure and run things in the future.

Thanks!

-

Category Input Output

-

Data Connectors

Being able to specify a name for the FileName field in the Input Tool configuration would be helpful for cases where a field named FileName is already present in the input data and has a different purpose than the newly added FileName field. Instead of having to use Field Info and other tools to rename the last field into something else (i.e. AYX_FileName), this would be an easier approach.

-

Category Input Output

-

Enhancement

Please allow disable or ignore conversion errors in SharePoint List Input.

In SharePoint List Input I see the same conversion error about 10 times. Then....

"Conversion Error Limit Reached".

Can you simply show the error once or allow users to choose to ignore the error? (Union Tool allows users to ignore errors).

I am not using that SP column in my workflow. Meanwhile I have to show my workflow to a 3rd party within the company. SO annoying to see errors that do not apply to my workflow being shown.

-

Category Input Output

-

Desktop Experience

-

New Request

-

User Settings

It would be neat to add a feature to the Output tool to allow grouping by rows, with all the data related to the group column viewable under a drop-down of the selected field.

I've heard that this is possible with a power pivot but would be a nice feature in Alteryx.

Ex. A listing of all customers in a specific city -> Group by the "Neighborhood" column, the output should be a list of all neighborhoods in the city, with an option to drop down on each neighborhood to see its residents and their relevant data.

Thanks!

-

Category Input Output

-

Data Connectors

-

Enhancement

Writing to XLSB Files using Delete and Append does not behave properly.

Alteryx currently is having an issue with writing to an XLSB file using the Delete and append option with Take the file/table name From field.

Issue:

- Old data gets deleted, and new data is added but on the wrong row.

- New data is added after location where old data was originally.

- This output error for XLSB files only shows when using Take the file/table name From field. Static paths are fine.

Workaround:

Create a Batch macro to simulate the Take the file/table name From field function without actually using it.

Example of Issue:

| Record ID | Original File | ----> | Updated File |

| 1 | Old Data | ----> | |

| 2 | Old Data | ----> | |

| 3 | Old Data | ----> | |

| … | Old Data | ----> | |

| 1200 | Old Data | ----> | |

| 1201 | ----> | New Data | |

| 1202 | ----> | New Data | |

| 1203 | ----> | New Data | |

| … | ----> | New Data |

-

Category Input Output

-

Enhancement

- New Idea 206

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,492 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

176 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 40 | |

| 32 | |

| 21 | |

| 10 | |

| 7 |