Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

In some of our larger workflows it's sometime tedious to run a workflow in order to see some data, when adding something in the beginning of the workflow. Running und stopping it as soon as the tools gets a green border is sometimes an option.

It would be convenient to have an option in the context menu to run a workflow only until a specific tool.

In effect, only this specific tool has an output visible for inspection and only the streams necessary for this tool have been run - everything else is ignored and I'm fine to not see data for the other tools.

This would speed up the development of small parts in a larger workflow much more convenient.

Regards

Christopher

PS: Yes, I can put everything else in a container and deactivate it. But a straight forward way without turning containers on and off would be preferable in my opinion. (I think KNIME as something similar.)

Bring back the Cache checkbox for Input tools. It's cool that we can cache individual tools in 2018.4.

The catch is that for every cache point I have to run the entire workflow. With large workflows that can take a considerable amount of time and hinders development. Because I have to run the workflow over and over just to cache all my data.

Add the cache checkbox back for input tools to make the software more user friendly.

Team,

It would be very useful if we could import Excel graphs as images. I've created graphs, tables, and charts using Alteryx tools from raw data (sql queries, etc.), but Excel offers more options. Generating customized emails with Excel graph images in the body instead of Alteryx charts would make this tool all the more powerful.

Idea is to pull in raw data through SQL queries, export data simultaneously to Tableau and Excel, pull back in the Excel graphs that are generated from that data, and create customized emails with links to Tableau workbooks, Excel file attachments, snapshots (graph images), and customized commentary. The Visual Layout tool is very handy for combining different types of data and images for email distribution, and importing Excel graphs as images would make this even better.

Hi Everyone,

Many workflows I work with along with those of my colleagues, use big databases in order to get some data. After a few steps down stream and testing, we normally just add an output and then open up that data in a new workflow to save time running the original workflow. Not that this is much of a burden, but I am used to copying and pasting tools from workflow A to workflow B, but you can't do that with the output, because in workflow B the output needs to be converted to an input. I just think it would be a cool added feature if possible. Anyone else agree?

Thank you,

Justin

Hi there,

Would it be possible to use the ISO week date system, which everybody uses?

In numerous workflows, I used to define the week number of a date with the formula DateTimeFormat(d, "%W") and it was perfectly working till the 1st January, 2019.

But from this date, the week numbers defined by Alteryx are not the same than the ones in the calendar we all use!

Alteryx says that from the 1st to the 6th January, 2019, the week number is "00" instead of "01"!!! And so on until the week 52 which, for Alteryx, goes from the 30th to 31st or December, 2019: the week 52 has only 2 days!!

As the year 2018 begins with a Monday, there is no problem but for 2019 it is completely weird! And it will repeat in 2020...

The rule from the ISO week date system is pretty simple: "The ISO 8601 definition for week 01 is the week with the Gregorian year's first Thursday in it."

Please correct that

Simon

This one may be a slightly niche request, but it has popped up a couple of times in my work so I thought I would submit. When importing multiple sheets from an Excel workbook using either the Dynamic Input or wildcard in the Input Data tool, I sometimes get an error that the schemas don't match. While this initially seems like the headers may be different, it can often occur because Alteryx is casting the columns as different data types (as I understand it, based on a sample of the incoming data). While this is often helpful, in this case it becomes problematic.

This has been asked several times on the discussion board including here and here. To workaround this issue, I have gone the batch macro route, but this could be solved more simply for the end user by adding an additional checkbox to the Input Data configuration when an Excel file is selected that imports all fields as strings (similar to the standard CSV input). Then the schema could be specified using a Select tool by the user once all of data is brought in.

I'm not sure if this would be valuable for other input data types where a schema is more explicitly specified, but for any where Alteryx is guessing the schema, I feel like it could be helpful to give the user a bit more control.

Hello,

the randomforest package implementation in Alteryx works fine for smaller datasets but becomes very slow for large datasets with many features.

There is the opensource Ranger package https://arxiv.org/pdf/1508.04409.pdf that could help on this.

Along with XGBoost/LightGMB/Catboost it would be an extremely welcome addition to the predictive package!

The "Manage Data Connections" tool is fantastic to save credentials alongside the connection without having to worry when you save the workflow that you've embedded a password.

Imagine if - there were a similar utility to handle credentials/environment variables.

- I could create an entry, give it a description, a username, and an encrypted password stored in my options, then refer to that for configurations/values throughout my workflows.

- Tableau credentials in the publish to tableau macro

- Sharepoint Credentials in the sharepoint list connector

- When my password changes I only have to change it in one place

- If I handoff the workflow to another user I don't have to worry about scanning the xml to make sure I'm not passing them my password

- When a user opens my workflow that doesn't have a corresponding entry in their credentials manager they would be prompted using my description to add it.

- Entries could be exported and shared as well (with passwords scrubbed)

Example Entry Tableau:

| Alias | Tableau Prod |

| Description | Tableau Production Server |

| UserID | JPhillips |

| Password | ********* |

| + |

Then when configuring a tool you could put in something like [Tableau Prod].[Password] and it would read in the value.

Or maybe for Sharepoint:

| Alias | TeamSP |

| Description | Team sharepoint location |

| UserID | JPhillips |

| Password | ********* |

| URL | http://sharepoint.com/myteam |

| + |

Or perhaps for a team file location:

| Alias | TeamFiles |

| Description | Root directory for team files |

| Path | \\server.net\myteam\filesgohere |

| + |

Any of these values could be referenced in tool configurations, formulas, macro inputs by specifying the Alias and field.

Current AWS S3 upload and download uses Long term keys with Access and Secret Key which sometimes causes security risk.

Adding Short term keys to the tool - https://docs.aws.amazon.com/IAM/latest/UserGuide/id_credentials_temp_request.html will help to use session keys that gets changed after specific duration .

Thanks

Make the Container Caption Font Size Adjustable

I find it helpful to see the entire workflow at once. It would be very helpful for the container size font to be adjustable. For example, I am documenting a workflow with many containers and tools. The containers represent segments of my workflow. When I am looking at or printing the entire workflow, the container heading is too small to be read. If the font size were adjustable, it could be increased to be readable and still fit easily into the length of the container.

Thanks to zuojing80 and tcroberts for their comments on 9/10/2018.

So far, Alteryx Products are offered in 6 different languages, which is a great thing indeed !!

However there is a lack of a toggle option to effortlessly switch the interface to a different language.

As a standard feature users should be allowed to switch language without re-installing the product (applicable to all Alteryx products)

We are working on building out training content in a story mode and would like to have short snippets playing in a loop for people to see embedded in the workflow. Currently you can add a .gif to a comment background and it will provide a still image on the worklfow itself but functions as a gif in the configuration display. The interesting part is when you are running the workflow the .gif works and then it pauses it when the workflow has completed!

I often copy/paste chunks of workflow and paste it into the same workflow (or a different one). It always seems to paste just diagonally below the upper most left Tool. This creates a real mess. I'd like to be able to select a small area within the work area and have the chunk of workflow I'm pasting drop there - instead of on top of the existing build.

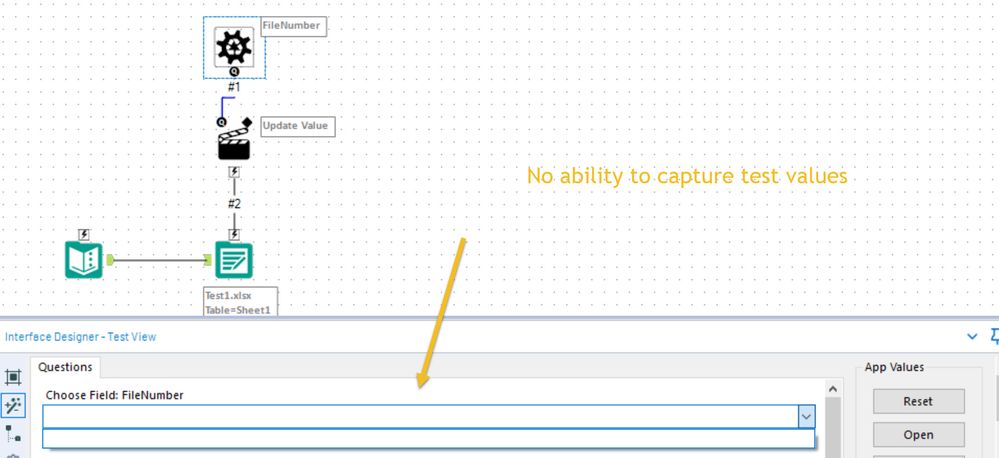

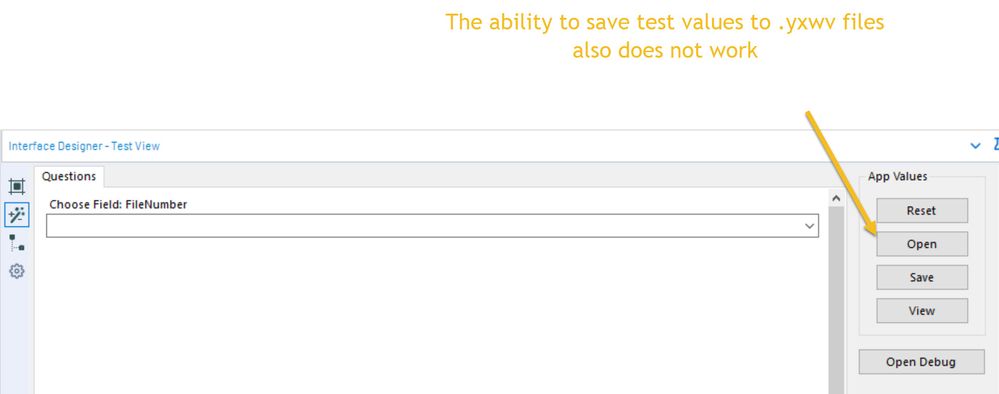

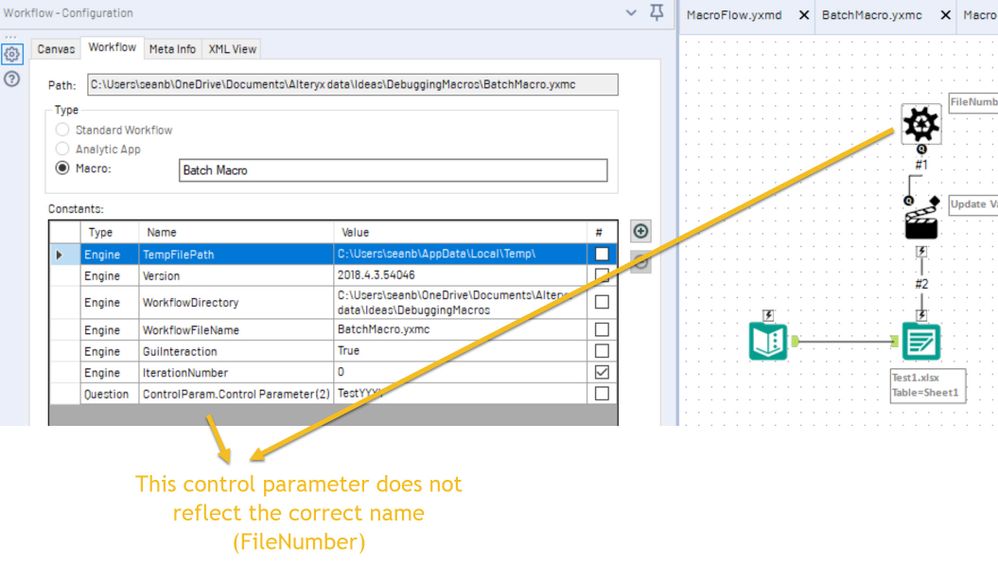

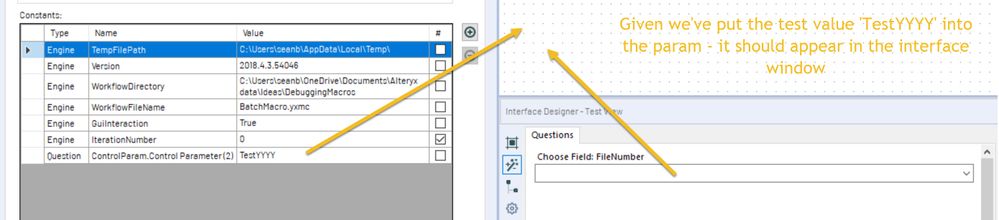

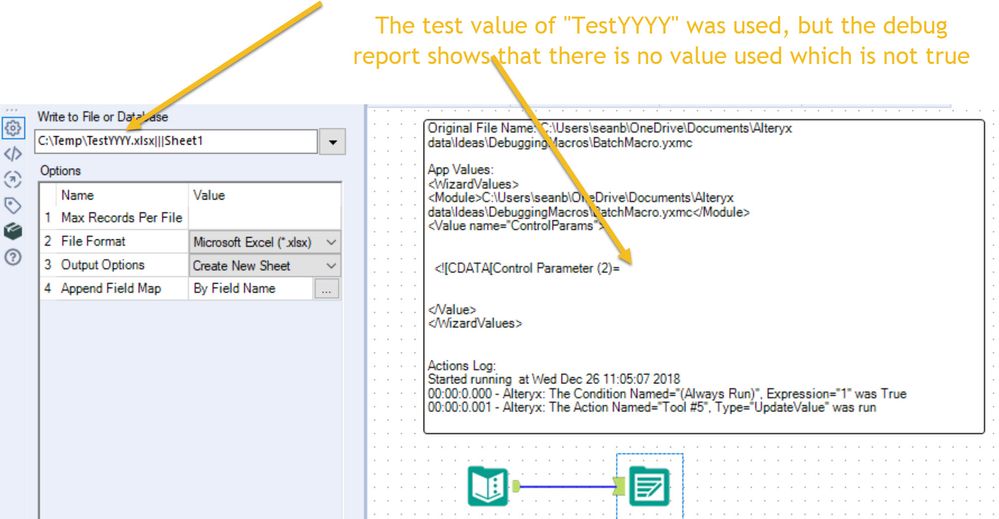

When building and debugging batch macros - it is important to be able to add test values and use these for debugging. However, the input values in the interface tools section do not allow input, and the ability to save or load test values also does not work.

While there is a workaround - setting the values in the workflow variables - this does not work fully (it doesn't reflect in the interface view; and is incorrect in the debug report) and is inconsistent with all other macro types.

Please could you make this consistent with other ways of testing & debugging macros?

All screenshots and examples attached

Screenshot 1: not possible to capture test values

Screenshot 2: saving and loading test values does not work

Screenshot 3: Workaround by using workflow variables

Scree

Screenshot 4: Values entered do not reflect properly

Screenshot 5: Debug works partially

Currently, the dynamic input tool reads variable types for two same variables in two different files as different. This causes an error while importing the files. e.g. A field called X in one file has type "double' while the same variable in other file would have type 'V_String'. The dynamic tool would give an error in this case saying that schema is different. Though its not.

Experts:

The Select Tool is great - except when it comes to reordering a large number of fields for a custom output, load etc. Single clicking every time you need to move a field up or down is time consuming (the ability to highlight multiple fields and move them in unison is great - assuming they are already in the right order).

I suggest two improvements to the Select Tool:

1) The ability to select a field and hold down either the "Up" or "Down" arrow so the field keeps moving without requiring one click per row and

2) The ability to drag and drop fields (skip clicking altogether if desired)

The combination of these 2 functionalities (or even one of them) will make field reordering much more efficient when using the Select Tool.

Thanks!

Hello All,

I received from an AWS adviser the following message:

_____________________________________________

Skip Compression Analysis During COPY

Checks for COPY operations delayed by automatic compression analysis.

Rebuilding uncompressed tables with column encoding would improve the performance of 2,781 recent COPY operations.

This analysis checks for COPY operations delayed by automatic compression analysis. COPY performs a compression analysis phase when loading to empty tables without column compression encodings. You can optimize your table definitions to permanently skip this phase without any negative impacts.

Observation

Between 2018-10-29 00:00:00 UTC and 2018-11-01 23:33:23 UTC, COPY automatically triggered compression analysis an average of 698 times per day. This impacted 44.7% of all COPY operations during that period, causing an average daily overhead of 2.1 hours. In the worst case, this delayed one COPY by as much as 27.5 minutes.

Recommendation

Implement either of the following two options to improve COPY responsiveness by skipping the compression analysis phase:

Use the column ENCODE parameter when creating any tables that will be loaded using COPY.

Disable compression altogether by supplying the COMPUPDATE OFF parameter in the COPY command.

The optimal solution is to use column encoding during table creation since it also maintains the benefit of storing compressed data on disk. Execute the following SQL command as a superuser in order to identify the recent COPY operations that triggered automatic compression analysis:

WITH xids AS (

SELECT xid FROM stl_query WHERE userid>1 AND aborted=0

AND querytxt = 'analyze compression phase 1' GROUP BY xid)

SELECT query, starttime, complyze_sec, copy_sec, copy_sql

FROM (SELECT query, xid, DATE_TRUNC('s',starttime) starttime,

SUBSTRING(querytxt,1,60) copy_sql,

ROUND(DATEDIFF(ms,starttime,endtime)::numeric / 1000.0, 2) copy_sec

FROM stl_query q JOIN xids USING (xid)

WHERE querytxt NOT LIKE 'COPY ANALYZE %'

AND (querytxt ILIKE 'copy %from%' OR querytxt ILIKE '% copy %from%')) a

LEFT JOIN (SELECT xid,

ROUND(SUM(DATEDIFF(ms,starttime,endtime))::NUMERIC / 1000.0,2) complyze_sec

FROM stl_query q JOIN xids USING (xid)

WHERE (querytxt LIKE 'COPY ANALYZE %'

OR querytxt LIKE 'analyze compression phase %') GROUP BY xid ) b USING (xid)

WHERE complyze_sec IS NOT NULL ORDER BY copy_sql, starttime;

Estimate the expected lifetime size of the table being loaded for each of the COPY commands identified by the SQL command. If you are confident that the table will remain under 10,000 rows, disable compression altogether with the COMPUPDATE OFF parameter. Otherwise, create the table with explicit compression prior to loading with COPY.

_____________________________________________

When I run the suggested query to check the COPY commands executed I realized all belonged to the Redshift bulk output from Alteryx.

Is there any way to implement this “Skip Compression Analysis During COPY” in alteryx to maximize performance as suggested by AWS?

Thank you in advance,

Gabriel

Workflow Dependencies is a great feature. It would be even better if you can save several configurations so that you can switch between them with 1 click only, saving the effort to copy and paste. For example:

| Configuration Name | Local | Test | Production | Custom |

| Dependency 1 | Path 1A | Path 1B | Path 1C | (Null) |

| Dependency 2 | Path 2A | Path 2B | Path 2C | (Null) |

| Dependency 3 | Path 3A | Path 3B | Path 3C | (Null) |

Something similar to the Field Configuration (Field Type Files) for Select Tool.

Another option is you save several dependencies there so that you can choose them in the drop-down list of Input and Output tools.

This would facilitate sharing and deployment.

It would make life a bit easier and provide a more seamless experience if Gallery admins could create and share In-Database connections from "Data Connections" the same way in-memory connections can be shared.

I'm aware of workarounds (create System DSN on server machine, use a connection file, etc.), but those approaches require additional privileges and/or tech savvy that line-of-business users might not have.

Thanks!

In the Configuration section of the Formula tool, the “Output Column” area is resizable. However, it has a limit that needs to be increased. Several of the column names I work with are not clearly identifiable with the current sizing constraint. I do not think the sizing needs to be constrained.

- New Idea 206

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,492 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

176 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 40 | |

| 32 | |

| 21 | |

| 10 | |

| 7 |