Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello gurus -

I think it would be an important safety valve if at application start up time, duplicate macros found in the 'classpath' (i.e., https://help.alteryx.com/current/server/install-custom-tools, ) generate a warning to the user. I know that if you have the same macro in the same folder you can get a warning at load time, but it doesn't seem to propagate out to different tiers on the macro loading path. As such, the developer can find themselves with difficult to diagnose behavior wherein the tool seems to be confused as to which macro metadata to use. I also imagine someone could also arrive at a situation where a developer was not using the version of the macro they were expecting unless they goto the workflow tab for every custom macro on their canvas.

Thank you for attending my TED talk on the upsides of providing warnings at startup of duplicate macros in different folder locations.

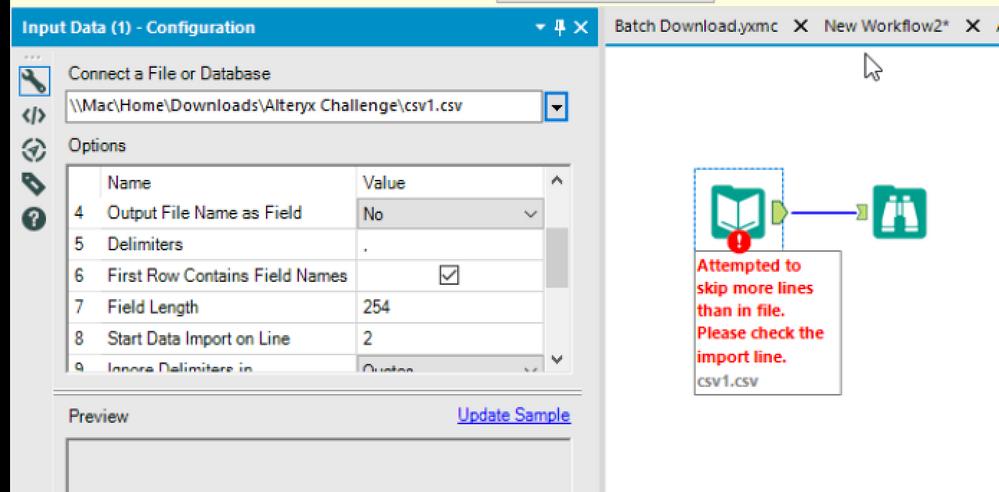

When running Alteryx using parallels we were unable to start a CSV file on a specific line (greater than 1).

Message: Attempted to skip more lines than in file. Please check the import line.

The filename includes a UNC path. We attempted to modify the workflow dependency to be relative (all options were tried). Alteryx could not/did not change the path as when using parallels the directory is always UNC.

This problem was posted in the discussions and I'm entering it here in ideas for remediation.

Cheers,

Mark

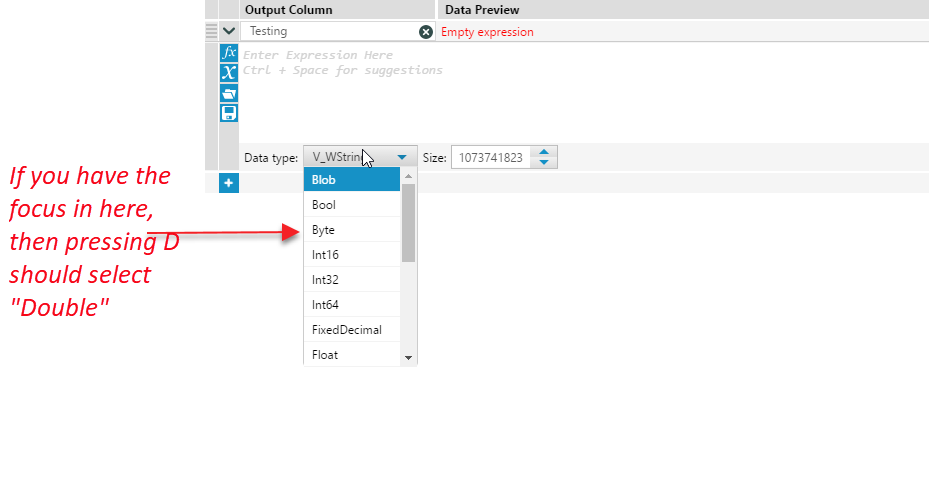

When creating a formula with the formula tool, it would be useful to be able to quickly tab into the data type column, then press D for Double and select this quickly.

Right now, you cannot use the keyboard to quickly type-ahead in this field, so every double field requires you to select, drop down, move the mouse to the bottom of the scroll window, click scroll down, then mouse back up to D for double.

(or "S" for Spatial etc - this is standard for most drop-down boxes)

In addition to the existing functionality, it would be good if the below functionality can also be provided.

1) Pattern Analysis

This will help profile the data in a better way, help confirm data to a standard/particular pattern, help identify outliers and take necessary corrective action.

Sample would be - for emails translating 'abc@gmail.com' to 'nnn@nnnn.nnn', so the outliers might be something were '@' or '.' are not present.

Other example might be phone numbers, 12345-678910 getting translated to 99999-999999, 123-456-78910 getting translated to 999-999-99999, (123)-(456):78910 getting translated to (999)-(999):99999 etc.

It would also help to have the Pattern Frequency Distribution alongside.

So from the above example we can see that there are 3 different patterns in which phone numbers exist and hence it might call for relevant standadization rules.

2) More granular control of profiling

It would be good, that, in the tool, if the profiling options (like Unique, Histogram, Percentile25 etc) can be selected differently across fields.

A sub-idea here might also be to check data against external third party data providers for e.g. USPS Zip validation etc, but it would be meaningful only for selected address fields, hence if there is a granular control to select type of profiling across individual fields it will make sense.

Note - When implementing the granular control, would also need to figure out how to put the final report in a more user friendly format as it might not conform to a standard table like definition.

3) Uniqueness

With on-going importance of identifying duplicates for the purpose of analytic results to be valid, some more uniqueness profiling can be added.

For example - Soundex, which is based on how similar/different two things sound.

Distance, which is based on how much traversal is needed to change one value to another, etc.

So along side of having Unique counts, we can also have counts if the uniqueness was to factor in Soundex, Distance and other related algorithms.

For example if the First Name field is having the following data -

Jerry

Jery

Nick

Greg

Gregg

The number of Unique records would be 5, where as the number of soundex unique might be only 3 and would open more data exploration opportunities to see if indeed - Jerry/Jery, Greg/Gregg are really the same person/customer etc.

4) Custom Rule Conformance

I think it would also be good if some functionality similar to multi-row formula can be provided, where we can check conformance to some custom business rules.

For e.g. it might be more helpful to check how many Age Units (Days/Months/Year) are blank/null where in related Age Number(1,10,50) etc are populated, rather than having vanila count of null and not null for individual (but related) columns.

Thanks,

Rohit

This setting is currently in the Options menu under user settings, but I think it would be more intuitive and more consistent with the norm for most software if the check box were directly on the splash screen.

I would like to see some functionality that would allow the user to select a specific tool(s) and run them. It looks like the workflow caches data at each tool so it should be doable to make it where i can run a specific tool from any point.

I know for me, i often run my new workflow and have forgotten a browse somewhere then have to add it and run the whole thing. Instead it would be ideal to be able to insert my browse like normal and select the tool before that browse and run just that one.

Do you guys think this would also be a useful capability?

Would be great to have a dynamic input tool that is a SFDC connector to update SOQL queries based on the fly.

I spend too much time repositioning tools by hand to minimize space between tools (especially when trying to parse messy XML documents). It would be great to have a feature that automatically reduces the space between the set of selected tools, such as "Distribute Horizontally and Compact". This would help free up canvas space.

I understand that the font types available for Interactive Charts is limited to 3 fonts. For tables and other parts of reporting there are more options. This makes it difficult to create a consistent report layout including e..g. one font type. I guess it is not to difficult to add all fonts available in reporting to the interactive chart tool?

It would be nice to improve upon the 'Block Until Done' tool.

Additional Features I could see for this tool:

1: Allow Any tool (even output) to be linked as an incoming connection to a 'Block Until Done' tool.

2: Allow Multiple Tools to be linked to a 'Block Until Done' tool. (similar to the 'Union' tool)

The functionality I see for this is to enable Alteryx set the Order of Operation for workflows and Allowing people to automate processes in the same way that people used to do them. I understand there's a work around using Crew Macros (Runner/Conditional Runner) that can essentially accomplish this; howerver (and I may be wrong). But it feels like a work around, instead of the tool working the way one would expect; and I'm loosing the ability to track/log/troubleshoot my workflow as it progresses (or if it has an issue)

Happy to hear if something like that exists. Just looking for ways to ensure order of operation is followed for a particular workflow I am managing.

Thanks,

Randy

All the other file types have different coloured icons and it works well to differentiate them in a directory. Its impossible to differentiate workflows and database icons currently.

The trade area tool currently allows for

- Radius (miles)

- Radius (Km)

- Drivetime Minutes

We would like to see:

- Driving Distance

2 benefits of this:

- Mapping of driving distance polygons

- Once polygon is created - then faster processing times of driving distance calculations

The Text to Columns tool allows for multiple delimeters, such as comma AND tab. It would be great if that capability was baked into the input tool.

Dynamic Input is a fantastic tool when it works. Today I tried to use it to bring in 200 Excel files. The files were all of the same report and they all have the same fields. Still, I got back many errors saying that certain files have "a different schema than the 1st file." I got this error because in some of my files, a whole column was filled with null data. So instead of seeing these columns as V_Strings, Alteryx interpreted these blank columns as having a Double datatype.

It would be nice if Alteryx could check that this is the case and simply cast the empty column as a V_String to match the previous files. Maybe make it an option and just have Alteryx give a warning if it has to do this..

An even simpler option would be to add the ability to bring in all columns as strings.

Instead, the current solution (without relying on outside macros) is to tick the checkbox in the Dynamic Input tool that says "First Row Contains Data." This then puts all of the field titles into the data 200 times. This makes it work because all of the columns are now interpreted as strings. Then a Dynamic Rename is used to bring the first row up to rename the columns. A Filter is used to remove the other 199 rows that just contained copies of the field names. Then it's time to clean up all of the fields' datatypes. (And this workaround assumes that all of the field names contain at least one non-numeric character. Otherwise the field gets read as Double and you're back at square one.)

Would be useful to convert an auto field component to a select component, after at least 1 run, by right-click-->convert to select

I keep Connection Progress on "Show" by default. I find that the row count tooltips are crucial for spotting issues during development. Sometimes, the counts are huge and I have to mentally insert commas. Please give the option to add commas/decimals (based on US/EU standards) into the Connection Progress tooltips. Thank you.

Following unexpected behaviour from the Render tool where outputting to a UNC Path (see post) in a Gallery Appliction, on advice of support raising this idea to introduce consistent behavior across all tools where utilising a UNC Path.

Hello Alteryx Dev Gurus -

We are migrating and some workflows that used to successfully update a datasource are now giving a useless error message, "An unknown error occurred".

Back in my coding days, we could configure the ORM to be highly verbose at database interaction time to the point where you could tell it to give you every sql statement it was trying to execute, and this was extremely useful at debug time. Somewhere down the pipe Alteryx is generating a sql statement to perform an update, so why not have something on the Runtime tab that says, 'Show all SQL statements for Output tools'? Or allow it on an Output tool by Output tool basis? If this was possible by changing a log4j properties file 15 years ago, I'm pretty sure it can be done today.

Thank you for attending my TED talk on how allowing for detailed sql statements to bubble back up to the user would be a useful feature improvement.

- New Idea 205

- Accepting Votes 1,839

- Comments Requested 25

- Under Review 148

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,491 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

175 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow