Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Even with browse everywhere I see plenty of workflows dotted with browses. Old habits die hard. Maybe a limit configuration for browses would be a good thing. If you've recently browsed a very large set of data you might agree.

Cheers,

Mark

There is currently no way to export interactive output from the network graph tool. I would like to be able to export a png of the static network graph image, a pdf of the report, and a complete html of the whole (which means including the JSON and vis.js files necessary for creating the report).

The Interactive Chart configure window cannot be resized. I'm using a single, large monitor, and this window occupies the entire monitor so that I am forced to switch between windows to see other applications. Most of the space used by the configure window is wasted white space. Please update this to allow this window to be resized.

I'm using a 32:9 monitor running at 5120x1440, and the attached screenshot shows the size of the configure window on this monitor - 4986x1286.

Hi

The native email tool is great up to a certain volume, but at high volume it makes more sense to use sendgrid to take advantage of its features like deliverability etc

Would be great if there was a ready-made connector tool for SendGrid

Thanks

Transfer of records from Python SDK RecordRef seems to be slow sending large amounts of data to the Alteryx Engine (e.g. discussion here). Although unclear of the exact specifics, it seems that there's a copy and convert process in play.

Apache Arrow appears to be addressing this issue, and the roadmap and specs are impressive! It seems like (again I have no understanding of the Alteryx Engine specifics) that something like this would be excellent for expanding SDK use cases as well as for other connectors such as the Apache Spark connector.

And it looks like it'd be fun to build into Alteryx! 🙂

When we rename a field in canvas it breaks a lot of formulas or config on tools like select tool or unique tool or summarize tool. If designer can automatically update these new field names in all the further tools if will save us a lot of time.

Thanks,

Sanju

Dear Alteryx Team,

Dynamic Input Tool is a great tool to import easily multiple files using files paths parameters ... having the same tool for outputs would be great to export many files in pre-established folder.

Many thanks

Arno

Hi all,

Based on the thread here https://community.alteryx.com/t5/Data-Preparation-Blending/Support-for-unsigned-int-database-type/m-... - there would be value in natively supporting the Unsigned Int data type in Alteryx.

This idea was raised by @jgreene.

Note: this does appear to be directly supported in the core OCBC library (as long as it's supported by the ODBC driver for the specific database in question), so hopefully this won't be a huge lift:

https://docs.microsoft.com/en-us/sql/odbc/reference/appendixes/sql-data-types

@jgreene- would you mind adding any further information about the DBMS you are using which supports unsigned int, and any other info that may help the team to develop and test this (e.g. any link you can find to an available ODBC driver for this database etc?)

Thank you

Sean

Hi there,

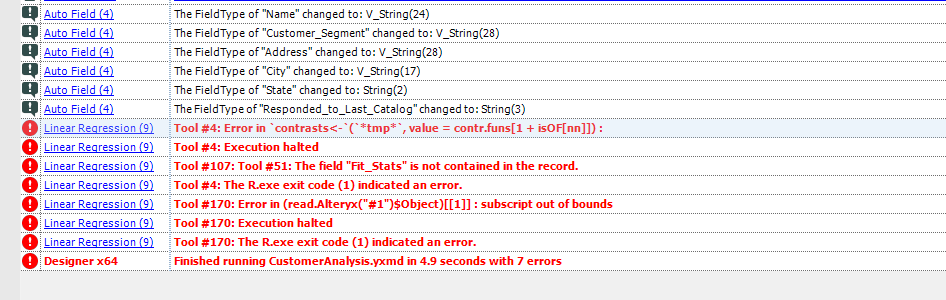

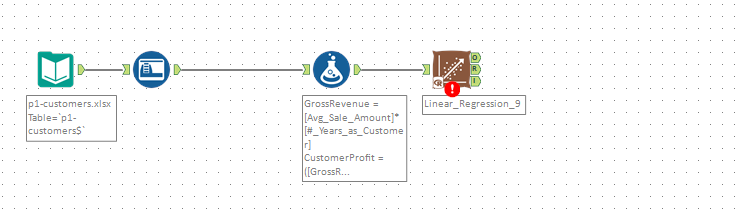

Similar to @aselameab1 - I was having trouble with using the Linear regression tool because it was giving error messages that were not explanatory or self descriptive.

@chadanaber identified the issue - that a specific field only had one unique value which was causing the regression tool to fail - however the error message provided gives no useful or helpful indication that this is the issue. You can see that the error message below is pretty tough to understand.

Could we add an item to the development backlog to add defensive checks to the predictive analytics tools to check for conditions that will cause them to fail, and rework the error messaging?

I've attached the workflow with the sample data that replicates this issue

Many thanks

Sean

Would be nice to make it so if I change from RowCount to index it all is linked together.

Something like a $FieldName$ which would automatically get substituted.

Also nice in other formula tools, but most useful in places where name needs to be duplicated over and over

When I perform an average aggregation the data type changes to increase the precision. While I could see this is useful sometimes, it is not when I have a currency based field and I want to keep the precision fixed at two decimal places. The result is I have to either add another select tool to update the data type or find an embedded select in a tool that exists post summarizing.

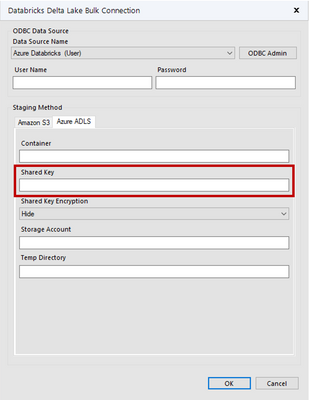

It is great to see the ability to stage data for bulk loading into Databricks in s3 and ADLS. Previously this only appeared to allow staging in Databricks DBFS.

However the current connector included in Designer 2022.1 has a key gap in functionality with ADLS Gen 2

The only authentication method provided to the ADLS storage is though a shared key.

Shared keys provide access that is

- Too broad and allows access to the entire storage

- Limited or no auditability and traceability to who is using it

We do not provide users the shared key for the ADLS storage, thus our users cannot take advantage of this new feature.

The preferred method of authentication to ADLS would be

- RBAC - Role Based Access Control

- ACL - Access Control lists

Either of these options can be provided though a service principal with a tenant id, client id and client secret as inputs to the bulk load tool

This request would specifically be to allow the ACL authentication. ACL would help empower our our self service data analysts and data scientists who could have access to a specific container.

For example

storageAccount/Container/directory

The ACL access in this tool would allow the Alteryx tool to follow the same access patterns where fine grained access was provided at the directory level and not at the storage account or container level. This would provide self service analysts and data scientists to use Alteryx as they need within their directory without providing higher level access.

Access control model for Azure Data Lake Storage Gen2 | Microsoft Docs

It would be really helpful if Alteryx server could connect directly to files on cloud file storage such as Dropbox, Box and OneDrive. For example; a workflow could access specific source files or a folder with multiple files stored on Dropbox and could run the workflow against those files and then write the output to another folder on Dropbox. We are making less and less use of internal file servers, so accessing files directly from the cloud allow for additional deployment scenerios and flexibility.

The one single feature I miss the most in Alteryx, is the possibility to restart the workflow from wherever I want by using a built-in cache functionality. I have used the 'Cache Dataset V2' macro, but it really is to inflexible and really doesn't make me a happy Alteryx user. I would like to se a more flexible, quicker way of working with cached data.

On a single tool in the workflow I want to be able to set the option to:

- Enable cache

This would enable me to always use cached data from this node when possible - Run to this node

Run from start OR from node with enabled cache to this node.

There should be lots of workflow options regarding the creation/deletion of cached data. Examples:

- Enable data cache on all nodes

This would enable functionality to always use cached data on all nodes in the workflow - Enable data cache on end nodes

This would enable functionality to cache data on all 'Run to this node'-nodes.

...and so on. These are just a few examples, but there should be lots of options and shortcut keys revolving the cached data functionality in the workflow.

It is important to be able to test for heteroscedasticity, so a tool for this test would be much appreciated.

In addition, I strongly believe the ability to calculate robust standard errors should be included as an option in existing regression tools, where applicable. This is a standard feature in most statistical analysis software packages.

Many thanks!

Often in larger workflows, I will copy data partway down the stream into a new workflow in order to troubleshoot a small section in order to avoid having to run the workflow over and over again which can take a while. I'm aware (and thankful) of cacheing, but sometimes if there are many parallel streams or, I'd rather just copy the data from the data preview built into the tool so I don't have to take the time to run the workflow again. I'm also aware I could output a yxdb file and use that, but again that takes longer than I would like.

The issue I run into is if I copy the data and paste in a text input tool, all the field types change to what they would default to. This is fine with new data, but for data that has specific fields throughout the workflow, this can be a hassle. If copying data could also copy the field type and size that would be great.

It would be helpful to have the Read Uncommitted listed as a global runtime setting.

Most of the workflows I design need this set, so rather than risk forgetting to click this option on one of my inputs it would be beneficial as a global setting.

For example: the user would be able to set specific inputs according to their need and the check box on the global runtime setting would remain unchecked.

However, if the user checked the box on the global runtime setting for Read Uncommitted then all of the workflow would automatically use an uncommtted read on all of the inputs.

When the user unchecks the global runtime setting for Read Uncommitted, then only the inputs that were set up with this option will remain set up with the read uncommitted.

I would LOVE the quick win of selecting some cells in the browse tool results pane, and a label somewhere shows me the sum, average, count, distinct count, etc. I hate to use the line but ... “a bit like Excel does”. They even alow a user to choose what metrics they want to show in the label by right clicking. 🙂

This improvement would save me having to export to Excel, check they add up just by selecting, and jumping back, or even worse getting my calculator out and punching the numbers in.

Jay

- New Idea 205

- Accepting Votes 1,839

- Comments Requested 25

- Under Review 148

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,491 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

175 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow