Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

For companies that have migrated to OneDrive/Teams for data storage, employees need to be able to dynamically input and output data within their workflows in order to schedule a workflow on Alteryx Server and avoid building batch MACROs.

With many organizations migrating to OneDrive, a Dynamic Input/Output tool for OneDrive and SharePoint is needed.

- The existing Directory and Dynamic Input tools only work with UNC path and cannot be leveraged for OneDrive or SharePoint.

- The existing OneDrive and SharePoint tools do not have a dynamic input or output component to them.

- Users have to build work arounds and custom MACROS for a common problem/challenge.

- Users have to map the OneDrive folders to their machine (and server if published to the Gallery)

- This option generates a lot of maintenance, especially on Server, to free up space consumed by the local version when outputting the data.

The enhancement should have the following components:

OneDrive/SharePoint Directory Tool

- Ability to read either one folder with the option to include/exclude subfolders within OneDrive

- Ability to retrieve Creation Date

- Ability to retrieve Last Modified Date

- Ability to identify file type (e.g. .xlsx)

- Ability to read Author

- Ability to read last modified by

- Ability to generate the specific web path for the files

OneDrive/SharePoint Dynamic Input Tool

- Receive the input from the OneDrive/SharePoint Directory Tool and retrieve the data.

Dynamic OneDrive/SharePoint Output Tool

- Dynamically write the output from the workflow to a specific directory individual files in the same location

- Dynamically write the output to multiple tabs on the same file within the directory.

- Dynamically write the output to a new folder within the directory

My organization use the SharePoint Files Input and SharePoint Files Output (v2.1.0) and connect with the Client ID, Client Secret, and Tenant ID. After a workflow is saved and scheduled on the server users receive the error "Failed to connect to SharePoint AADSTS700082: The refresh token has expired due to inactivity" every 90 days. My organization is not able to extend the 90 day limit or create non-expiring tokens.

If would be great if the SharePoint connectors could automatically refresh the token when it expires so users don't have to open the workflow and do it manually.

There is no tool that exists that outputs all records that are duplicates (those sharing the selected values with at least one other record) and also outputs the records that are not duplicates (those not sharing the selected values with at least one other record).

The Unique Tool is not sufficient. It only provides the first record of a unique duplicate group along with any non-duplicates and then provides a secondary output that only contains the additional records of a duplicate group. Sometimes you only care about the duplicates and want to quickly see what differs between the unique groups.

For example, if there are 4 records with the City of Austin and I am looking for duplicates on City I want to see all 4 records with Austin in the output so I can quickly compare additional fields to see what might differ, or if they are all indeed truly duplicates.

Hello all,

As of today, Alteryx proposes the Intelligence Suite with amazing tools never seen in a data tool, even OCR, image analysis etc.. https://www.alteryx.com/fr/products/intelligence-suite

But... these wonderful tools are part of a paid add-on. And this is what is problematic :

-Alteryx is already an expensive tool. With a huge value but honestly expensive.

-The tools in Intelligence Suite are not common in data tools because you won't use often. And paying for tools you use once or twice in a month is not easy to justify.

So, I suggest to incorpore Intelligence Suite in the core product. The Alteryx users benefit is evident so let's see the Alteryx benefits :

-more user satisfaction

-a simpler catalog

-adding a lot of value to Designer, with the ability to communicate widely on the topic.

-almost no cost : most costumers won't buy the Intelligence Suite anyway.

Best regards,

Simon

I am aware that an Auto-Documenter tool is available in the Gallery, but that has not been maintained since 2020.

It would be great if Alteryx could have that as an added feature to the Designer as an option for end-users to utilize.

The breakdown of it can be done via XML parsing as such:

<Nodes>: Configuration of tools

<Connections>: The tools used

<Properties>: Workflow properties

Right now, the current workaround is for users to export their XML, and the internal Alteryx development team has to build another workflow that reads the XML accordingly + parses it to fit what is needed.

It would be better for Alteryx to build something more robust, and perhaps even include some elements of AiDIN which they are promoting now.

We have lots of tools that create new column(s) from the Inputs, e.g., Generate Rows. It'd be very nice if the new column(s) is/are highlighted in the Output. This makes it a lot easier for users when developing the workflow.

Connecting to Smartsheets using Alteryx Desktop (and by extension, Alteryx Server) is extremely cumbersome. If a user wants to read data from Smartsheet, they are required to get an API token (preferred) or use a username/password

Then do one of the following to read data from Smartsheets:

1. a. Install a ODBC driver

b. Configure a DSN connection for ODBC

c. Use the input data using a generic ODBC connection

or

2. Use python

To write data to Smartsheets, a user can use Python or upload the data using an API call - both very hard for end users to use especially if they're not Python developers.

Regardless, all of these are problematic. On the server I manage, I have over 15 ODBC connections to Smartsheets and it's getting very hard to upgrade the server hardware because of them. Creating a native connector for input/output of data to Smartsheets will eliminate a headache of managing ODBC connections, and make it simple for Alteryx Desktop users to read and write data.

The JOIN tool could use some love. Let's consider merging the JOIN and UNION functions into a single tool. Instead of strictly L, J, and R outputs, we could have an option to allow for all standard SQL joins:

- Cross Join (Warning!!!)

- Inner Join (boring)

- Left Outer Join (saves time configuring Union)

- Right Outer Join (saves time ...)

- Full Outer Join (saves time ...)

Being able to JOIN on case-insensitive values is a big bonus (resisted urge to BOLD and change font size).

Being able to JOIN on date-range is often requested.

Being able to JOIN on numeric-range is often requested.

If we are combining tools, getting UNIQUE on L or R (or both) inputs would also save time. Most JOIN errors are because the incoming (R) data contains duplicates by KEY.

cheers,

Mark

Hi @NicoleJ

The Find Replace tool has a checkbox to do a case insensitive find. It would be fabulous if the Join and Join Multiple tools had a similar checkbox.

I frequently have to create a new field in each data stream, convert the data I want to join on to upper case, perform the join and remove the extra "helper" fields. Using the helper field is needed in my case in order to preserve unique capitalization (i.e., acronyms within the string, etc.).

Hello all,

I really appreciate the ability to test tools in the Laboratory category :

However, these nice tools should go out of laboratory and become supported after a few monhs/quarters. Right now, without Alteryx support, we cannot use it for production workflow.

Example given :

Visual Layout Tool introduced in 2017

https://community.alteryx.com/t5/Alteryx-Designer-Knowledge-Base/Tool-Mastery-Visual-Layout/ta-p/835...

Make columns Tool also introduced in 2017

https://community.alteryx.com/t5/Alteryx-Designer-Knowledge-Base/Make-Columns-Tool/ta-p/67108

Transpose In-DB in 10.6 introduced 2016

https://help.alteryx.com/10.6/LockInTranspose.htm

etc, etc...

Best regards,

Simon

Currently it's not possible to "switch off" interface tools in the same was as we can with the other tools. This limits the functionality especially within chained apps. If we could switch these tools off it would be much easier for us to tailor the experience by allowing selections to be activated by logic, rather than simply the data therein.

Please Alteryx Gods. I beseech thee!

*lights candles*

Hello all,

Like many softwares in the market, Alteryx uses third-party components developed by other teams/providers/entities. This is a good thing since it means standard features for a very low price. However, these components are very regurarly upgraded (usually several times a year) while Alteryx doesn't upgrade it... this leads to lack of features, performance issues, bugs let uncorrected or worse, safety failures.

Among these third-party components :

- CURL (behind Download tool for API) : on Alteryx 7.15 (2006) while the current release is 8.0 (2023)

- Active Query Builder (behind Visual Query Builder) : several years behind

- R : on Alteryx 4.1.3 (march 2022) while the next is 4.3 (april 2023)

- Python : on Alteryx 3.8.5 (2020) whil the current is 3.10 (april 2023)

-etc, etc....

-

of course, you can't upgrade each time but once a year seems a minimum...

Best regards,

Simon

Currently there is a function in Alteryx called FindString() that finds the first occurrence of your target in a string. However, sometimes we want to find the nth occurrence of our target in a string.

FindString("Hello World", "o") returns 4 as the 0-indexed count of characters until the first "o" in the string. But what if we want to find the location of the second "o" in the text? This gets messy with nested find statements and unworkable beyond looking for the second or third instance of something.

I would like a function added such that

FindNth("Hello World", "o", 2) Would return 7 as the 0-indexed count of characters until the second instance of "o" in my string.

A very useful and common function

https://www.w3schools.com/sql/func_sqlserver_coalesce.asp

Return the first non-null value in a list:

It exits in SQL, Qlik Sense, etc...

Best regards,

Simon

Hello all,

As of now, you have two very distinct kinds of connection :

-in memory alias

-in database alias

It happens than every single time I use a in-database alias I have to create the same for in memory since some operations cannot be realized in in-database (such as pre-sql or interface tools)

What does that mean for us :

-more complex settings operations/training/tests

-unefficient worflows that have to deal with two kinds of alias.

What I propose :

-a single "connection alias", that can be used either for in-db either for in-memory,

-one place to configure

-the in-db or in-memory being dependant on the tools you use

Best regards,

Simon

It would be great if you could include a new Parse tool to process Data Sets description (Meta data) formatted using the DCAT (W3C) standard in the next version of Alteryx.

DCAT is a standard for the description of data sets. It provides a comprehensive set of metadata that can be used to describe the content, structure, and lineage of a data set.

We believe that supporting DCAT in Alteryx would be a valuable addition to the product. It would allow us to:

- Improve the interoperability of our data sets with other systems (M2M)

- Make it easier to share and reuse our data sets

- Provide a more consistent way to describe our data sets

- Bring down the costs of describing and developing interfaces with other Government Entities

- Work on some parts of making our data Findable – Accessible – Interopable - Reusable (FAIR)

We understand that implementing support for this standards requires some development effort (eventually done in stages, building from a minimal viable support to a full-blown support). However, we believe that the benefits to the Alteryx Community worldwide and Alteryx as a top-quality data preparation tool outweigh the cost.

I also expect the effort to be manageable (perhaps a macro will do as a start) when you see the standard RDF syntax being used, which is similar to JSON.

DCAT, which stands for Data Catalog Vocabulary, is a W3C Recommendation for describing data catalogs in RDF. It provides a set of classes and properties for describing datasets, their distributions, and their relationships to other datasets and data catalogs. This allows data catalogs to be discovered and searched more easily, and it also makes it possible to integrate data catalogs with other Semantic Web applications.

DCAT is designed to be flexible and extensible, so they can be used to describe a wide variety. They are both also designed to be interoperable, so they can be used together to create rich and interconnected descriptions of data and knowledge.

Here are some of the benefits of using DCAT:

- Improved discoverability: DCAT makes it easier to discover and use KOS, as they provide a standard way of describing their attributes.

- Increased interoperability: DCAT allows KOS to be integrated with other Semantic Web applications, making it possible to create more powerful and interoperable applications.

- Enhanced semantic richness: DCAT provides a way to add semantic richness to KOS , making it possible to describe them in a more detailed and nuanced way.

Here are some examples of how DCAT is being used:

- The DataCite metadata standard uses DCAT to describe data catalogs.

- The European Data Portal uses DCAT to discover and search for data sets.

- The Dutch Government made it a mandatory standard for all Dutch Government Agencies.

As the Semantic Web continues to grow, DCAT is likely to become even more widely used.

DCAT

- Reference Page: https://www.w3.org/TR/vocab-dcat/

- Dutch (NL) Standard: https://forumstandaardisatie.nl/open-standaarden/dcat-ap-donl

- WIKI Pedia on DCAT: https://en.wikipedia.org/wiki/Data_Catalog_Vocabulary

RDF

- Reference Page: https://www.w3.org/TR/REC-rdf-syntax/

- Dutch (NL) Standard: https://forumstandaardisatie.nl/open-standaarden/rdf

- WIKI Pedia on DCAT: https://en.wikipedia.org/wiki/Resource_Description_Framework

Hello all,

As of today, we can easily copy or duplicate a table with in-database tool.This is really useful when you want to have data in development environment coming from production environment.

But can we for real ?

Short answer : no, we can't do it in these cases :

-partitions

-any constraints such as primary-foreign keys

But even if these ideas would be implemented, this means manually setting these parameters.

So my proposition is simply a "clone table"' tool that would clone the table from the show create table statement and just allow to specify the destination path (base.table)

Best regards,

Simon

When working on a complex, branching workflow I sometimes go down paths that do not give the correct result, but I want to keep them as they are helpful for determining the correct path. I do not want these branches to run as they slow down the workflow or may produce errors/warnings that muddy debugging the workflow. These paths can be several tools long and are not easily put in a container and disabled. Similar to the Cache and Run Workflow feature that prevents upstream tools from refreshing i am suggesting a Disable all Downstream Tools feature. In the workflow below the tools in the container could be all disabled by a right click on the first sample tool in the container.

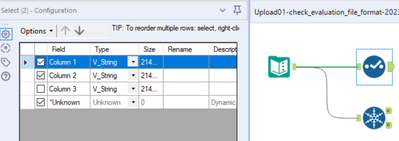

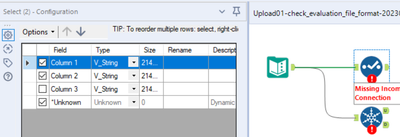

In some cases, the information about incoming columns to tools are (temporarily) forgotten, e.g. if Autoconfig is switched off, if the incoming connection is temporarily missing, or if column names are generated dynamically and the workflow has not been executed, yet.

Many tools deal with that situation well, e.g. Selection, Formula, or Summarize. In these cases, the tools tell the user that they cannot find incoming columns, but they preserve the configuration so that the user still can (at least partially) work on these tools and important information on the configuration is not lost:

Example Select Tool

- First step: Connections present, configuration typed in:

- Second step: Connection cut, confguration opened. The configuration looks screwed up but implicitly contains all settings:

- Third step: Connection re-connected. The configuration is as before:

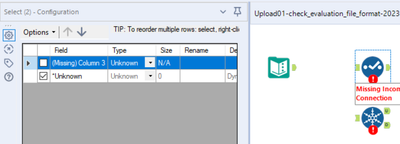

Other tools behave the opposite, for example Unique or Macro Input (an for sure many other tools). If the incoming columns are currently unknown to the Designer and you click once on the symbol, the entire configuration of this tool is lost. You might try to get the configuration back by pressing undo. This, in most cases does not work. Or, even worse, you find out what happened later when it's too late for undo. In this case, you either have an old version of that workflow to look up the configuration or you have to re-develop it. In any case, this is unnecessary and time-consuming software behaviour.

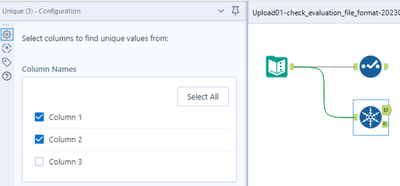

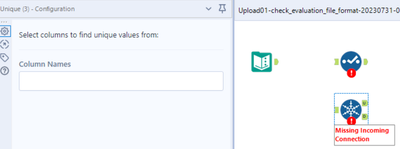

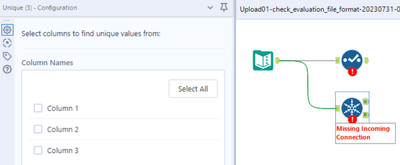

Example Unique Tool

- Step 1: Connections present, configuration typed in:

- Step 2: Connection cut, confguration opened. The configuration is empty:

- Step 3: Connection re-connected: The entire configuration is permanently lost:

I wasn't sure whether I should report this as a bug or a feature enhancement. It is somehow in between. Two aspects tell me that this should be changed:

- Inconsistent behaviour of different tools for now reason,

- Easy loss of programming work, resulting in time-consuming bug fixing.

Please make sure that all tools preserve their configuration also if information on incoming columns is temporarily lost.

Hello all,

As of today, you must set which database (e.g. : Snowflake, Vertica...) you connect to in your in db connection alias. This is fine but I think we should be able to also define the version, the release of the database. There are a lot of new features in database that Alteryx could use, improving User Experience, performance and security. (e.g. : in Hive 3.0, there is a catalog that could be used in Visual Query Builder instead of querying slowly each schema)

I think of a menu with the following choices :

-default (legacy) and precision of the Alteryx default version for the db

-autodetect (with a query launched every time you run the workflow when it's possible). if upper than last supported version, warning message and run with the last supported version settings.

-manual setting a release (to avoid to launch the version query every time). The choices would be every supported alteryx version.

Best regards,

Simon

- New Idea 206

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,492 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

176 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 41 | |

| 30 | |

| 19 | |

| 10 | |

| 7 |