Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

It would be ever so helpful and save a couple extra steps if a count distinct option could be added to the crosstab tool. Seems like a slam dunk since plain ole 'count' is already a choice.

When I'm organizing my workflow, sometimes I want to move a whole tool container on the canvas. Currently, the only way to do this is to first find the header then select and drag this. When the ends of the container is off screen, it can be hard to know how much I wanted to move my container to get it where I wanted relative to the other tools around it. I feel like it would be nice to be able to select anywhere on the tool container and drag it around (possibly holding right click and dragging so that current tool selection capabilities aren't hindered).

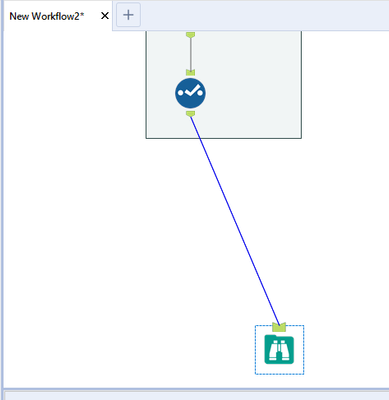

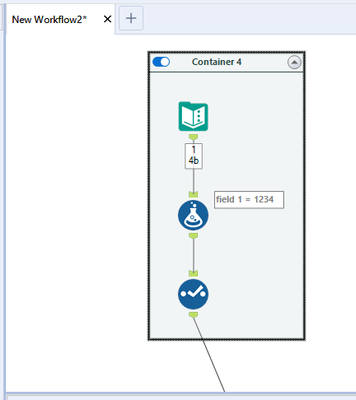

In the (simplified) images below, you'll see that I want my tool container to vertically align just above the browse tool:

I can't currently see the top of the tool container to move it, though, so I must first navigate to that part of the workflow to select the header.

When creating a connection using DCM (example being ODBC for SQL) - the process requires an ODBC Data Source Name (see screenshot 1 below).

However, when you use the alias manager (another way to make database connections) - this does allow for DSN-free connections which are essential for large enterprises (see screenshot 2 below).

NOTE: the connection manager screens do have another option - Quick Connect - which seems to allow for DSN-free connections, but this is non-intuitive; and you're asked to type in the name of the driver yourself which seems to be an obvious failure point (especially since the list of all installed drivers can be read straight from the registry)

Please could we change DCM to use the same interfaces / concepts as the alias screens so that all DCM connections can easily be created without requiring an ODBC DSN; and so that DSN-free connections are the default mode of operation?

Screenshot 1: DCM connection:

screenshot 2

cc: @wesley-siu @_PavelP @ToddTarney

Hello all, just another little QoL suggestion!

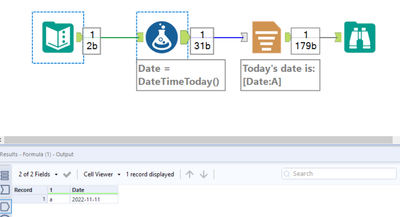

There have been a few occasions recently where I've been adding some Report Text to a Rendered output and have needed to reference the current date. However, when building a quick formula to do this, I've first needed to add a dummy field within a Text Input tool so that the Formula tool doesn't error due to no incoming connection.

I know I can create a branch off from the main dataset and just use that, but for something simple like this, I find it cleaner to isolate and generate it in this way and so it'd be great if - for situations like this - the Formula tool's input anchor was optional (obviously only when using it to create new fields).

There are likely many other examples where you may want to build a simple workflow (or branch of one), starting with a quick field generated within the Formula tool itself. However, just thought I'd raise this with a scenario I've encountered a couple of times recently.

Cheers!

From what I can tell using ProcMon, presently when using the Directory tool to list files (including subdirectories) the Alteryx Engine runs a single threaded process.

When you're trying to find files by checking recursively in large network paths, this can take hours to run.

It would be great if the tools would split up lists of directories (maybe by getting two or three levels down first) and then run each of those recursive paths in parallel.

While it is possible to do this using a custom Python or cmd->PS command, it would be great if this could just be a native part of the application.

It is my understanding that hidden in each yxdb is metadata. The following use case is common:

As an Alteryx Developer/Designer I want to know the source of a yxdb.

Ideally, I would know as much about the workflow (name, path, workflow version, AYX version, userid) as possible.

It would be awesome to be construct a workflow that would allow me to search the metadata of yxdb's on my client computers quickly.

Cheers,

Mark

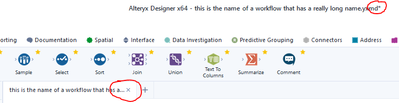

It looks like as of 2022.3, workflow tabs get shortened to a specific width. This is fine however now the asterisk that lets me know if my workflow has changed doesn't display in the tab anymore. I would have to look at the top of the screen to see this. I know this isn't a huge deal, but it would be nice to still be able to see the asterisk in the tab so that I can still know which workflows have been saved even if I am currently looking at a different open workflow. One solution may be to move the asterisk to the front of the workflow name.

Also, would users want a setting to allow them to keep full workflow names versus shortening them?

Thanks!

Apologies if this has been suggested already - did a search and didn't see anything similar.

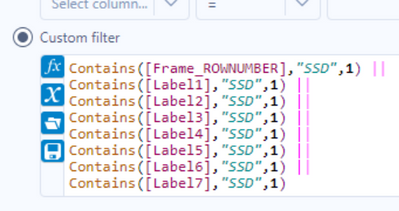

This is a quality of life/UX idea. The search functionality in the results pane essentially does a 'contains' search on all of the columns (see below screenshots for the filter inserted by the 'apply data manipulations button). As I build workflows and profile the data, it'd be helpful if I could click one or more columns and limit the search bar to just those fields.

Right now, depending on the dataset I could get rows returned by the search due to the search term appearing in columns that aren't relevant. To workaround this I could add select tools to limit the columns or do more robust filters in a filter tool, but having it built in would be very helpful.

When I use the Comment Tool its difficult to select the tools inside it, but when I use the Container Tool the Container Text doesn't support Font Sizes, and doesn't support multiple lines of text so I end up moving the Comment into the Container, but still have problems selecting a group of tools.

So a combined Comment and Container Tools would be wonderful!

Bonus: If the Comment Tool could support Multiple Font Sizes.

Alteryx hosting CRAN

Installing R packages in Alteryx has been a tricky issue with many posts over the years and it fundamentally boils down to the way the install.packages() function is used; I've made a detailed post on the subject. There is a way that Alteryx can help remedy the compatibility challenge between their updates of Predictive Tools and the ever-changing landscape that is open-source development. That way is for Alteryx to host their own CRAN!

The current version of Alteryx runs R 4.1.3, which is considered an 'old release', and there are over 18,000 packages on CRAN for this version of R. By the time you read this post, there is likely a newer version of one of these packages that the package author has submitted to the R Foundation's CRAN. There is also a good chance that package isn't compatible with any Alteryx tool that uses R. What if you need that package for a macro you've downloaded? How do you get the old version, the one that is compatible? This is where Alteryx hosting CRAN comes into full fruition.

Alteryx can host their own CRAN, one that is not updated by one of many package authors throughout its history, and the packages will remain unchanged and compatible with the version of Predictive Tools that is released. All we need to do as Alteryx users is direct install.packages() to the Alteryx CRAN to get our new packages, like so,

install.packages(pkg_name, repo = "https://cran.alteryx.com")

There is a R package to create a CRAN directory, so Alteryx can get R to do the legwork for them. Here is a way of using the miniCRAN package,

library(miniCRAN)

library(tools)

path2CRAN <- "/local/path/to/CRAN"

ver <- paste(R.version$major, strsplit(R.version$minor, "\\.")[[1]][1], sep = ".") # ver = 4.1

repo <- "https://cran.r-project.org" # R Foundation's CRAN

m <- available.packages() # a matrix of all packages and their meta data from repo

pkgs4CRAN <- m[,"Package"] # character vector of all packages from repo

makeRepo(pkgs = pkgs4CRAN, path = path2CRAN, type = c("win.binary", "source"), repos = repo) # makes the local repo

write_PACKAGES(paste(path2CRAN, "bin/windows/contrib", ver, sep = "/"), type = "win.binary") # creates the PACKAGES file for package binaries

write_PACKAGES(paste(path2CRAN, "src/contrib", sep = "/"), type = "source") # creates the PACKAGES files for package sources

It will create a directory structure that replicates R Foundation's CRAN, but just for the version that Alteryx uses, 4.1/.

Alteryx can create the CRAN, host it to somewhere meaningful (like https://cran.alteryx.com), update Predictive Tools to use the packages downloaded with the script above and then release the new version of Predictive Tools and announce the CRAN. Users like me and you just need to tell the R Tool (for example) to install from the Alteryx repo rather than any others, which may have package dependency conflicts.

This is future-proof too. Let's say Alteryx decide to release a new version of Designer and Predictive Tools based on R 4.2.2. What do they do? Download R 4.2.2, run the above script, it'll create a new directory called 4.2/, update Predictive Tools to work with R 4.2.2 and the packages in their CRAN, host the 4.2/ directory to their CRAN and then release the new version of Designer and Predictive Tools.

Simple!

If a tool fails, there should be a way to customise the error message. Currently a way to do it: log all messages in a file, read that file with another workflow, then customise the messages (Alteryx workflow error handling - Alteryx Community). However, there should be a more convenient solution. We should be able to:

- Find/replace parts of a message.

- Specify, which tools messages to modify.

- Change the message type.

- Change the order of the messages in the results window, to prioritise the critical ones.

- Pick which messages cannot be hidden by "xxx more errors not displayed".

This would especially help for macros, as sometimes we have a specific tool failing within a macro and producing a non-user friendly message.

When you have a "reminder"/"Notification" , there needs to be the option to permanently ignore the update.

Some updates only give you a timeframe for ignore/remind as little as 7 days. There should absolutely be options for longer time frames, and should include a permanent reminder of do not display/remind me of 'this' update again.

Fine for another reminder when there is another new update, but don't repeatedly place the notice of a reminder for the same system/version/data set etc etc etc update.

There are times companies don't provide updates for a year or more. You shouldn't have to keep dismissing update reminders/notices when you don't intend to update until maybe the next version or a year from now.

Remove the constant update notification.

I would love to be able to see the actual curl statement that is executed as part of the download tool. Maybe something like a debug switch can be added which would produce 1 extra output field which is the curl statement itself? This would greatly enhance the ability to debug when things aren't working as expected from the download tool.

At the moment, at least for Postgres and ODBC connections, the DCM only supports a names DSN that must be installed on each machine running Designer or Server. However, the ODBC admin function is admin only within my company, which makes DCM more trouble than it is worth to use.

Connection strings work well in the workflows, have been implemented on the gallery before, and do not require access to the ODBC admin to implement. Could DCM please be improved to support native connection strings?

Hi

The wording of the tool tip displayed in results window cells with long strings is misleading. The current wording is "This cell has truncated characters".

New users tend to infer that this means that the data value has been truncated somewhere upstream. See here, here and here. Changing this message to something like "Only a portion of long strings is displayed" will help reduce the confusion immensely.

Dan

I constantly find my using pre and post SQL Commands in the Output tool to run SQL when I don't actually have any data to output.

One example is when I load data into S3 and want to load it into Redshift. I have SQL code to run but no data to Output - I end up running a dummy row into a temp table.

So can we have an SQL tool that simply acts the same as a Pre-SQL command without the associated data output. Once the command is run we should be able to continue the workflow, so the tool should have an option input and output, like the Run Command tool.

There is an extensive need from customers to be able to create emails but not send them (right away at least).

I'm in the banking sector and I have been seeing many banks using Alteryx and Alteryx server in their routines. Also, when it comes to sending automatic e-mails in this sector, its very risky. We need a "four eyes check" when dealing with clients information. Currently there is no workaround that could be applied to e-mail tool when used in Alteryx server as well.

My idea is to simply create a button "Save in draft" in e-mail tool to create an .eml format as output. This .eml can be read by outlook and thus, it creates a draft.

This also should be taken into account when dealing with drafts in alteryx server, so that any user can run the workflow and get the desired draft.

Thanks

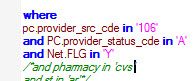

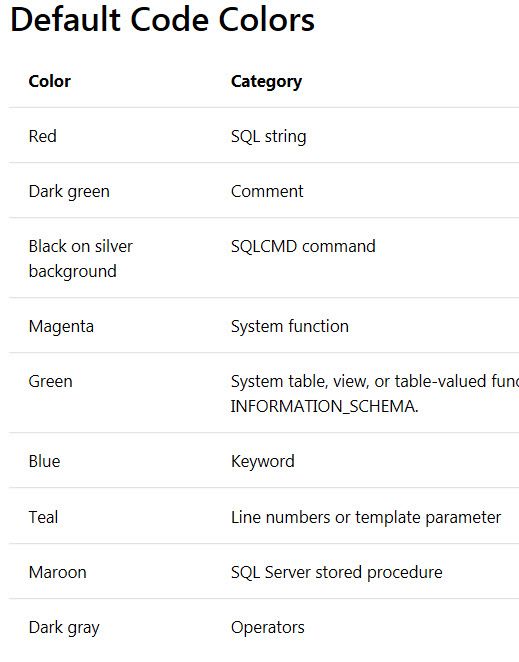

We need color coding in the SQL Editor Window for input tools. We are always having to pull our code out of there and copy it into a Teradata window so it is easier to ready/trouble shoot. This would save us some time and some hassle and would improve the Alteryx user experience. ( I think you've used a couple of my ideas already. This one is a good one too. )

When writing a good amount of code, it is easy to get lost in a sea of parentheses. Just when you think you're all done, you get an error that can force you to scour through your code to find the missing, extra, or misplaced parenthesis.

A common feature today is to highlight a parenthesis when its partner is clicked on. This instantly lets you know if you have the wrong number of them and where.

I didn't think this was that important early on in Alteryx, at least for me. Formulas were meant to be short and easily readable at a glance. Now as I dig deeper, there's R, Python, SQL and other text-heavy inputs.

I don't need a full-fledged text editor in Alteryx, but I would love some quality of life features like parentheses matching.

From Wikipedia :

In a database, a view is the result set of a stored query on the data, which the database users can query just as they would in a persistent database collection object. This pre-established query command is kept in the database dictionary. Unlike ordinary base tables in a relational database, a view does not form part of the physical schema: as a result set, it is a virtual table computed or collated dynamically from data in the database when access to that view is requested. Changes applied to the data in a relevant underlying table are reflected in the data shown in subsequent invocations of the view. In some NoSQL databases, views are the only way to query data.

Views can provide advantages over tables:

Views can represent a subset of the data contained in a table. Consequently, a view can limit the degree of exposure of the underlying tables to the outer world: a given user may have permission to query the view, while denied access to the rest of the base table.

Views can join and simplify multiple tables into a single virtual table.

Views can act as aggregated tables, where the database engine aggregates data (sum, average, etc.) and presents the calculated results as part of the data.

Views can hide the complexity of data. For example, a view could appear as Sales2000 or Sales2001, transparently partitioning the actual underlying table.

Views take very little space to store; the database contains only the definition of a view, not a copy of all the data that it presents.

Depending on the SQL engine used, views can provide extra security.I would like to create a view instead of a table.

- New Idea 207

- Accepting Votes 1,837

- Comments Requested 25

- Under Review 150

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow