Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I've seen this question before and have run into it myself. I'd like to see a new tool that would allow a developer (of a workflow) to choose a path of logic based upon criteria known only during the execution of a module.

If LEFT INPUT Count of records < 10,000 THEN Path1 (e.g. use a calgary join)

ELSE Path 2 (e.g. use a standard join)

endif

Thanks,

Mark

-

API SDK

-

Category Developer

-

Engine

-

Runtime

We have discussed on several occasions and in different forums, about the importance of having or providing Alteryx with order of execution control, conditional executions, design patterns and even orchestration.

I presented this idea some time ago, but someone asked me if it was posted, and since it was not, I’m putting it here so you can give some feedback on it.

The basic concept behind this idea is to allow us (users) to have:

- Design Patterns

- Repetitive patterns to be reusable.

- Select after and Input tool

- Drop Nulls

- Get not matching records from join

- Conditional execution

- Tell Alteryx to execute some logic if something happens.

- Record count

- Errors

- Any other condition

- Order of execution

- Need to tell Alteryx what to run first, what to run next, and so on…

- Run this first

- Execute this portion after previous finished

- Wait until “X” finishes to execute “Y”

- Orchestration

- Putting all together

This approach involves some functionalities that are already within the product (like exploiting Filtering logic, loading & saving, caching, blocking among others), exposed within a Tool Container with enhanced attributes, like this example:

The approach is to extend Tool Container’s attributes.

This proposition uses actual functionalities we already have in Designer.

So, basically, the Tool Container gets ‘superpowers’, with the addition of some capabilities like: Accepting input data, saving the contents within the container (to create a design pattern, or very commonly used sequence of tools chained together), output data, run the contents of the tools included in the container, etc.), plus a configuration screen like:

- Refers to the actual interface of the Tool Container.

- Provides the ability to disable a Container (and all tools within) once it runs.

- Idea based on actual behavior: When we enable or disable a Tool Container from an interface Tool.

- Input and output data to the container’s logic, will allow to pickup and/or save files from a particular container, to be used in later containers or persist data as a partial result from the entire workflow’s logic (for example updating a dimensions table)

- Based on actual behavior: Input & Output Data, Cache, Run Command Tools, and some macros like Prepare Attachment.

- Order of Execution: Can be Absolute or Relative. In case of Absolute run, we take the containers in order, executing their contents. If Relative, we have the options to configure which container should run before and after, block until previous container finishes or wait until this container finishes prior to execute next container in list.

- Based on actual behavior: Block until done, Cache, Find Replace, some interface Designer capabilities (for chained apps for example), macros’ basic behaviors.

- Conditional Execution: In order to be able to conditionally execute other containers, conditions must be evaluated. In this case, the idea is to evaluate conditions within the data, interface tools or Error/Warnings occurrence.

- Based on actual behavior: Filter tool, some Interface Tools, test Tool, Cache, Select.

- Notes: Documentation text that will appear automatically inside the container, with options to place it on top or below the tools, or hide it.

This should end a brief introduction to the idea, but taking it a little further, it will allow even to have something like an Orchestration layout, where the users can drag and drop containers or patterns and orchestrate them in a solution, like we can do with the Visual Layout Tool or the Interactive Chart tool:

I'm looking forward to hear what you think.

Best

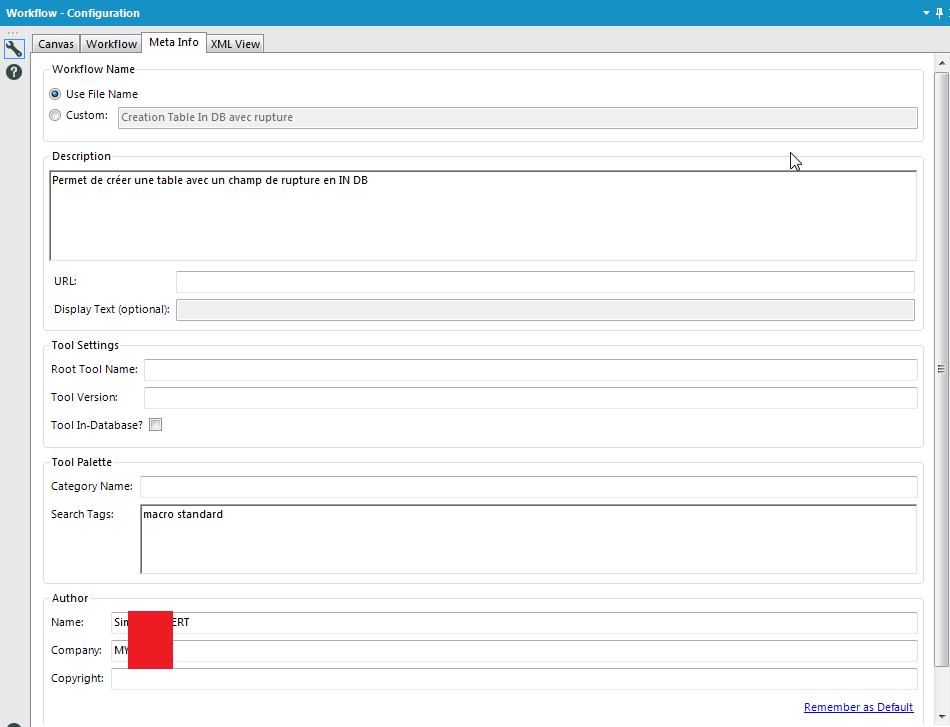

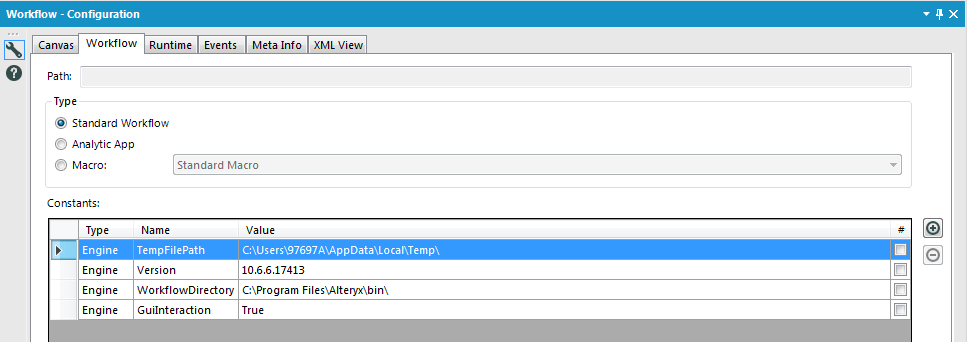

I love Workflow Meta info, especially the ability to put the Author, the search tags,the version, the description, etc...

But why can't we use it as Engine Constant? It doesn't seem very hard to implement and it would change life for development.

-

Engine

-

Feature Request

Limit conversion warning allows for a minimum of 1 message. Can we set the minimum to 0 to completely ignore the message?

Perhaps we can allow warning messages a similar function as ERROR messages and allow the designer to Ignore, Warn or Cancel?

ConvError: Imputation (441): Tool #104: No demand: 0.200000000000031 had more precision than a double. Some precision was lost.

ConvError: Summarize (456): Data: 0.360000000004675 had more precision than a double. Some precision was lost.

End: Designer x64: Finished running FP Model - Marquee Crew v3.yxmd in 32.3 seconds with 16 field conversion errors and 4 warnings

Thanks,

Mark

-

Engine

-

Feature Request

-

Runtime

Please add official support for newer versions of Microsoft SQL Server and the related drivers.

According to the data sources article for Microsoft SQL Server (https://help.alteryx.com/current/DataSources/SQLServer.htm), and validation via a support ticket, only the following products have been tested and validated with Alteryx Designer/Server:

Microsoft SQL Server

Validated On: 2008, 2012, 2014, and 2016.

- No R versions are mentioned (2008 R2, for instance)

- SQL Server 2017, which was released in October of 2017, is notably missing from the list.

- SQL Server 2019, while fairly new (~6 months old), is also missing

This is one of the most popular data sources, and the lack of support for newer versions (especially a 2+ year old product like Sql Server 2017) is hard to fathom.

ODBC Driver for SQL Server/SQL Server Native Client

Validated on ODBC Driver: 11, 13, 13.1

Validated on SQL Server Native Client: 10,11

- ODBC Driver 17+ is not mentioned, even though it was released in February of 2018. https://docs.microsoft.com/en-us/sql/connect/odbc/windows/release-notes-odbc-sql-server-windows?view...

- SQL Server Native Client is deprecated. It is being replaced by Microsoft OLE DB Driver for SQL Server. However, there is not a mention of Microsoft OLE DB Driver for SQL Server. The latest version of this is 18.3.0. https://docs.microsoft.com/en-us/sql/connect/oledb/release-notes-for-oledb-driver-for-sql-server?vie...

Hello,

SQLite is :

-free

-open source

-easy to use

-widely used

https://en.wikipedia.org/wiki/SQLite

It also works well with Alteryx input or output tool. 🙂

However, I think a InDB SQLite would be great, especially for learning purpose : you don't have to install anything, so it's really easy to implement.

Best regards,

Simon

Hello all,

Like many softwares in the market, Alteryx uses third-party components developed by other teams/providers/entities. This is a good thing since it means standard features for a very low price. However, these components are very regurarly upgraded (usually several times a year) while Alteryx doesn't upgrade it... this leads to lack of features, performance issues, bugs let uncorrected or worse, safety failures.

Among these third-party components :

- CURL (behind Download tool for API) : on Alteryx 7.15 (2006) while the current release is 8.0 (2023)

- Active Query Builder (behind Visual Query Builder) : several years behind

- R : on Alteryx 4.1.3 (march 2022) while the next is 4.3 (april 2023)

- Python : on Alteryx 3.8.5 (2020) whil the current is 3.10 (april 2023)

-etc, etc....

-

of course, you can't upgrade each time but once a year seems a minimum...

Best regards,

Simon

-

AMP Engine

-

Engine

When developing and/or troubleshooting workflows, I frequently disable the outputs using the checkbox in the Runtime configuration settings to speed up the workflow and prevent sending emails and/or overwriting data in the output sources... however, 9/10 times I forget to turn off this checkbox when I save my workflow back up to the Gallery. This results in countless emails from users to the tune of "I ran the workflow successfully, but there was no output?" 🙂

Would love love love to see some sort of warning notification (similar to the ones that already shown for data sources etc.) when saving to the Gallery if the "Disable All Tools that Write Output" option is selected in the Runtime settings.

Thank you!!

NJ

Hello all,

As of today, we can easily copy or duplicate a table with in-database tool.This is really useful when you want to have data in development environment coming from production environment.

But can we for real ?

Short answer : no, we can't do it in these cases :

-partitions

-any constraints such as primary-foreign keys

But even if these ideas would be implemented, this means manually setting these parameters.

So my proposition is simply a "clone table"' tool that would clone the table from the show create table statement and just allow to specify the destination path (base.table)

Best regards,

Simon

Hi,

I would like to see Global Variable being made available in Alteryx. I have seen the Global Constant being made available under Workflow "User" configuration. But this is constant and needs to be defined at Design time.

How about a Process Id that needs to be auto genearted and the same needs to be available across the formula tools used with in the workflow.

-

Engine

-

General

Idea:

I know cache-related ideas have already been posted (cache macros; cache tools), but I would like it if cache were simply built into every tool, similar to the way it is on the Input Tool.

Reasoning:

During workflow development, I'll run the workflow repeatedly, and especially if there is sizeable data or an R tool involved, it can get really time consuming.

Implementation ideas:

I can see where managing cache could be tricky: in a large workflow processing a lot of data, nobody would want to maintain dozens of copies of that data. But there may be ways of just monitoring changes to the workflow in order to know if something needs to be rebuilt or not: e.g. suppose I cache a Predictive Tool, and then make no changes to any tool preceeding it in the workflow... the next time I run, the engine should be able to look at "cache flags" and/or "modified tool flags" to determine where it should start: basically start at the "furthest along cache" that has no "modified tools" preceeding it.

Anyway, just a thought.

-

Engine

-

Feature Request

-

Tool Improvement

I can't even count how often I looked at an Excel, CSV or even YXDB file, where I KNEW that it was generated by Alteryx, but I couldn't remember the workflow. Currently, I have to simply go through all workflows I ever build and see if I can find it.

Theoretically, I could use a text-search across all workflows and see if I can find the output names - problem here: Most of my output filenames are generated dynamically on the run.

It would be amazing if Alteryx could simply write the Workflow name (maybe even path) into the metadata of a file.

(Screenshot from Google, as my os is set to German)

How about, we write "This file was created with by "Create Controlling Reports.yxmd on 2023-02-06 with Alteryx Designer 2021.4.298434" in the field 'Comments'?

This would make it extremely easy to find what workflow the file generated. I think it would be an option to talk about "filepath" instead of filename, but the filepath could include the local machine name, which might include GDPR information.

@Community: Is there any additional information that you'd like to see in the metadata?

Best

Alex

-

Engine

-

Enhancement

In order to perform audit-trail logging - it would be valuable to have 2 new capabilities

a) environment variables which show the workflow name; filepath; version; run start date and time; etc. For any worklows we build, we need to have a solid audit trail to be SOX compliant, so having this detail available as a data field to write and manipulate is essential

b) A logging component. What would be great is a component that you can drop on a workflow, not connected to anything, which is able to trap the start; end; runtime; version; etc of a workflow; and commit this to any output data format (CSV or ODBC etc). This logging tool would need to be able to capture the full runtime, so it would need to be the last thing that runs (which means it may need to exist in parallel to the main workflow in some way). This is not currently possible with a complex workflow with outputs, because it's not possible to identify when the entire workflow ended; or the runtime (since output tools don't have an onward connector to pass flow-of-control to catch the final end-time)

Again, both of these are necessary to meet audit requirements for workflows and prodcution-quality ETLs for BI data warehouses.

-

Engine

-

Tool Improvement

Hello all,

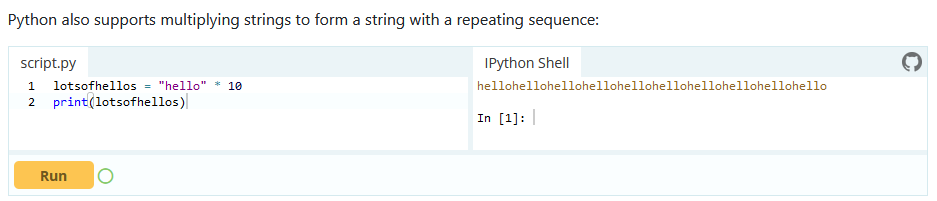

I'm currently learning Pythin language and there is this cool feature : you can multiply a string

Pretty cool, no? I would like the same syntax to work for Tableau.

Best regards,

Simon

In some of our larger workflows it's sometime tedious to run a workflow in order to see some data, when adding something in the beginning of the workflow. Running und stopping it as soon as the tools gets a green border is sometimes an option.

It would be convenient to have an option in the context menu to run a workflow only until a specific tool.

In effect, only this specific tool has an output visible for inspection and only the streams necessary for this tool have been run - everything else is ignored and I'm fine to not see data for the other tools.

This would speed up the development of small parts in a larger workflow much more convenient.

Regards

Christopher

PS: Yes, I can put everything else in a container and deactivate it. But a straight forward way without turning containers on and off would be preferable in my opinion. (I think KNIME as something similar.)

-

Engine

-

Runtime

Hello,

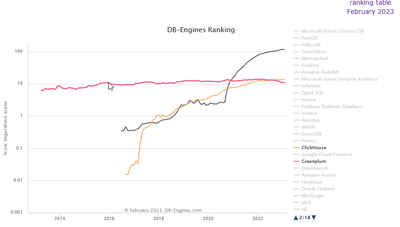

Just like Monetdb or Vertica, Clickhouse is a column-store database, claiming to be the fastest in the world. It's available on Cloud (like Snowflake), linux and macos (and here for free, it's open-source). it's also very well ranked in analytics database https://db-engines.com/en/system/ClickHouse and it would be a good differenciator with competitors.

https://clickhouse.com/

it has became more popular than Greenplum that is supported : (black snowflake, red greenplum, orange clickhouse)

Best regards,

Simon

We see canvasses every day where dozens fields are brought into a canvas or a macro, but never used - and this just creates slowness for no good benefit.

Given that one of the selling features of Alteryx is the speed of processing - could we look at three improvements to the Alteryx engine & designer:

- easiest: Keep track of every field brought in / created - and if they are not used in an output, then throw a warning at the end of the execution process

- For example - you bring in fields a,b,c - you create field d and e during the flow in formula tools

- Field d is never used as an input to any filters or formulae - and it doesn't appear on any output - so it's just waste

- Field a and b are part of the output, so they are fine

- Field c is never used at all - so that's just waste.

- Field e is used to filter the records before output - so this one is fine.

- So we've immediately found 2 fields that we can eliminate and make this canvas faster

- Medium: Ignore the unused fields in the execution engine

- Hardest: Tell the users that their field is unused in Alteryx Designer by doing a lineage analysis of the tools, just like software environments like Visual Studio do. This may require a change to the engine & to designer 'cause we would need to make each tool capture the full detail of the fields that they know in their configuration in order to do this trace.

-

Engine

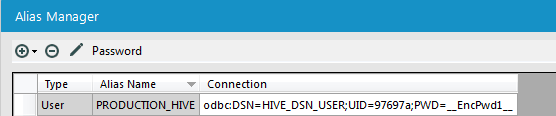

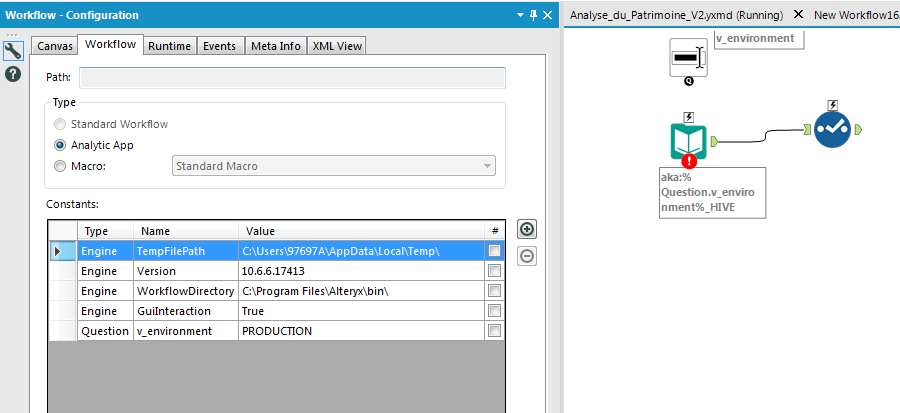

Hello,

we have several environment in our organization : dev, recept, production.

In order to make that change safe we intend to make several connection (standard alias) like

PRODUCTION_HIVE

DEV_HIVE

RECEPT_HIVE

In our workflows, we want to use aka:%Question.v_environment%HIVE

Sadly, this solution does not work despite the value defaut.

-

Engine

-

Feature Request

Hi UX interested parties,

Here are some ideas for you to consider:

1. These lines are BORING and UNINFORMATIVE. I'd like to understand (pic = 1,000 words) more when looking at a workflow.

- A line could communicate:

- Qty of Records

- Size of Data

- Is the data SORTED

- What sort order

- Quality of Data

If you look at lines A, B, C in the picture above. Nothing is communicated. Weight of line, color of line, type of line, beginning line marker/ending line marker, these are all potential ways that we could see a picture of the data without having to get into browse everywhere to see the information. If we hover over the data connection, even more information could appear (e.g. # of records, size of file) without having to toggle the configuration parameters.

2. Wouldn't it be nice to not have to RUN a workflow to know last SAVED metadata (run) of a workflow? I'd like to open a "saved" workflow and know what to expect when I run the workflow. Heck, how long does it take the beast to run is something that we've never seen unless we run it.

3. I'd like to set the metadata to display SORT keys, order. Sort1 Asc, Sort 2 Desc .... This sort information is very helpful for the engine and I'll likely post about that thought. As a preview, when a JOIN tool has sorted data and one of the anchors is at EOF, then why do we need to keep reading from the other anchor? There won't be another matched record (J) anchor. In my example above, we don't ask for the L/R outputs, so why worry about the rest of the join?

4. Have you ever seen a map (online) that didn't display watermark information? I think that the canvas experience should allow for a default logo (like mine above, but transparent) in the lower right corner of the canvas that is visible at all times. Having the workflow name at the top in a tab is nice, but having it display as a watermark is handy.

5. Once the workflow has RUN, all anchors are the same color. How about providing GREY/White or something else on EMPTY anchors instead of the same color? This might help newbies find issues in JOIN configuration too.

6. If the tool has ERRORs you put a RED exclamation mark. I despise warnings, but how about a puke colored question mark? With conversion errors, the lines could be marked to let you know the relative quantity of conversion errors (system messages have a limit)

Just a few top of mind things to consider ....

Cheers,

Mark

-

AMP Engine

-

Engine

Here's a reason to get excited about amp! Create a runtime setting that gets Alteryx working even faster.

when you configure a file input you see 100 records. Imagine the delight that after you run your workflows all input tools are automatically cached. You run so much faster.

now think of the absolute delight that even before you run the workflows that a configured input tool causes a background read off the input data. Whether it is a new workflow or an opened existing flow that reading can start ahead of the time button.

what do you think 🤔?

- New Idea 208

- Accepting Votes 1,837

- Comments Requested 25

- Under Review 150

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

632 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

122 -

Enhancement

275 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- apathetichell on: Github support

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...