Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Access to only MD5 hashes via MD5_ASCII(String) and MD5_UNICODE(String) found under string functions is limiting. Is there a way to access other hashing algorithms, ideally via the crypto algorithms from OpenSSL or the .NET framework?

- https://msdn.microsoft.com/en-us/library/system.security.cryptography.hashalgorithm(v=vs.110).aspx

- https://wiki.openssl.org/index.php/Command_Line_Utilities#Signing_.2F_Digest

Hashing functions are a very useful tool to have. There are many different types of hashes and each one has tradeoffs for different uses. This can range from error checking, privacy shielding, password protection, forensic analysis, message authentication (HMAC) and much more. See: http://stackoverflow.com/questions/800685/which-cryptographic-hash-function-should-i-choose

- For workflows with data containing existing hashes, being able to consistently create hashes from non-hashed data for comparison is useful.

- Hashes are also useful because they are the same outside the Alteryx environment. They can be used to confirm correct operation of a production system or a third party's external process.

Access to only MD5 hashes via MD5_ASCII(String) and MD5_UNICODE(String) found under string functions in the formula tool is a start, but quite limiting.

Further, the ability to use non-cryptographic hashes and checksums would be useful, such as MurmurHash or CRC. https://en.wikipedia.org/wiki/List_of_hash_functions

Having the implementation benefit from hardware acceleration (AES-NI / CUDA) would be a great plus for high volume applications.

For reference, these are some hash algorithms that could be useful in workflows:

SHA-1

SHA-256

Whirlpool

xxHash

MurmurHash

SpookyHash

CityHash

Checksum

CRC-16

CRC-32

CRC-32 MPEG-2

CRC-64

BLAKE-256

BLAKE-512

BLAKE2s

BLAKE2b

ECOH

FSB

GOST

Grøstl

HAS-160

HAVAL

JH

MD2

MD4

MD6

RadioGatún

RIPEMD

RIPEMD-128

RIPEMD-160

RIPEMD-320

SHA-224

SHA-256

SHA-384

SHA-512

SHA-3 (originally known as Keccak)

Skein

Snefru

Spectral Hash

Streebog

SWIFFT

Tiger

-

Category Data Investigation

-

Category Parse

-

Category Transform

-

Desktop Experience

Another seemingly minor one that would just make life a little easier and clean up workflows. I often find myself renaming the Name/Value fields from a Transpose to be more descriptive. Currently this requires a Select tool after each transpose, and it would be nice to put a couple of text boxes at the bottom of the transpose to rename Name/Value directly in the tool itself.

-

Category Transform

-

Desktop Experience

PLEASE add a count function to Formula/Multi-Row Formula/Multi-Field Formula!

I have searched for alternatives but am just confused about how to store the result for the total number of rows from Summarise or Count Records in a variable that can then be used within a Formula tab. It should not be that difficult to just add equivalents to R's nrow() and ncol().

-

Category Input Output

-

Category Transform

-

Data Connectors

-

Desktop Experience

-

Category Transform

-

Desktop Experience

It was discovered that 'Select' transformation is not throwing warning messages for cases where data truncation is happening but relevant warning is being reflected from the 'Formula' transformation. I think it would be good if we can have a consistent logging of warnings/errors for all transformations (at least consistent across the ones based on same use cases - for e.g. when using Alteryx as an ETL tool, 'Select' and 'Formula' tool usage should be common place).

Without this in place, it becomes difficult to completely rely on Alteryx in terms of whether in a workflow which is moving/populating data from source to target truncation related errors/warnings would be highlighted in a consistent manner or not. This might lead to additional overhead of having some logic built in to capture such data issues which is again differing transformation by transformation - for e.g when data passes through 'Formula' tool there is no need for custom error/warning logging for truncation but when the same data passes through 'Select' transformation in the workflow it needs to be custom captured.

-

Category Data Investigation

-

Category Preparation

-

Category Transform

-

Desktop Experience

I'd like to see a tool that can take an input, then send it in different directions (similar to formula tool), but with many options... based on filters and/or formulas and/or fields.

Sometimes I need to perform actions on parts of my data or perform different actions depending on whether the data matches certain criteria and then re-union it later.

Right now, the filter tool only allows true or false. If we could customize further we could optimize our workflows rather than stringing filter tools together as if they are nested if/then.

So either the filter tool could have more options than true/false, and infinite ouputs, or the join multiple tool could be flipped, as shown below.

I envision something that says:

Split workflow:

- By Field: Field Name (perhaps with summarize functions such as min/max, etc.)

- By Formula (same configuration as current)

- By Filter

- Field

- Operator

- Variable

-

Category Interface

-

Category Transform

-

Desktop Experience

It would be great if Alteryx could introduce a tool implementing a Decision Model Notation (DMN)-style Decision Table as an option to remove business logic from a workflow.

Arbitrary business rules are frequently implemented against datasets in Alteryx workflows before actual processing occurs. The implementation of complex business logic frequently results in a spider web of join, filter, formula, and union tools.

-

Category Input Output

-

Category Transform

-

Data Connectors

-

Desktop Experience

Can you add the flexibility to access fields based on its position or index like a[1], a[2],... a[n]. a[1] being the first column. Also an option to get max[a] can give the last column and min[a] give the first column. In this way, we can easily subset the dataset. Most cases, we are handling survey data which has 1000s of columns and when we need to select certain columns, we have to manually select the column checkbox and its painful to select 100s of columns. It will be nice if there is an option to select based on ID or index. It will also be useful while doing multi-field formula with more number of fields, because currently there is no option to write formulaes based on field Name column in it.

Regards,

Jeeva.

-

Category Transform

-

Desktop Experience

I usually have some checks of my workflows. The simplest are row counts at varying points. I use Count Records tools, rename the outputs using a Select tool, Union them, and use a Message tool to calculate and show Deltas. I want to have the ability to control the output field name of the Count Records tool the same way I can control the output field name of a Record ID tool.

-

Category Transform

-

Desktop Experience

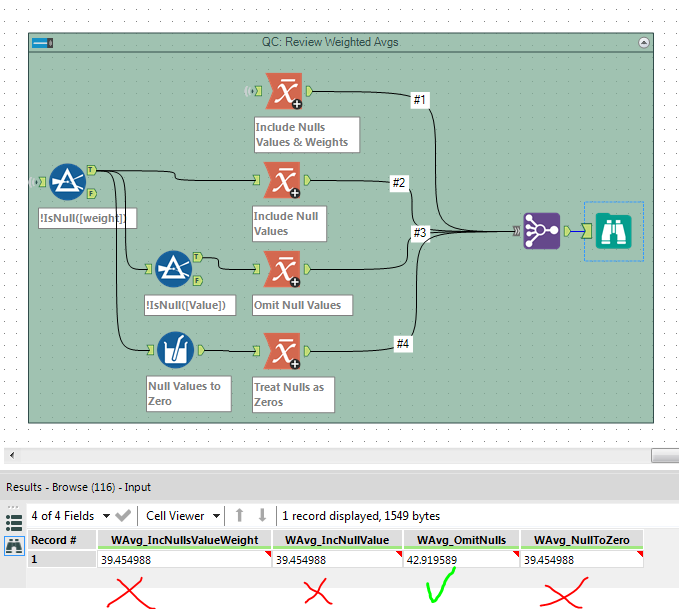

Improve Help Documentation or in-tool options for handling null values in statistical tools like Weighted Average or Linear Regression. For instance, checkbox to remove null value records, or at least warn users.

In the processing of learning to perform linear regression in RStudio and Alteryx, I came across differing outputs depending on how null values were addressed. Take the Weighted Average tool for example.

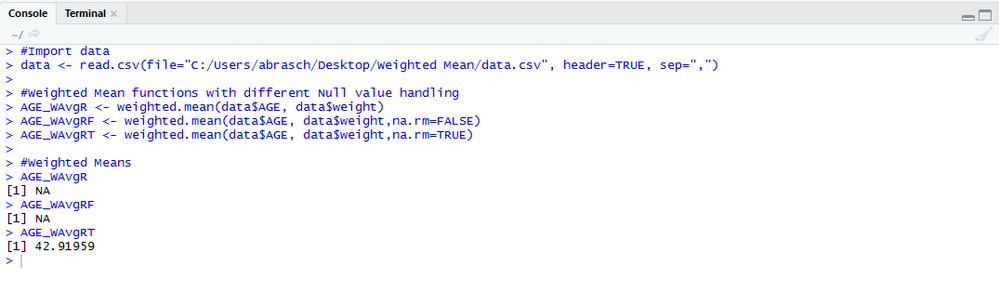

In R, the weighted.mean function treats null values in the variable of interest as if they were not there. If the user does not specify that null values exist, the result is NA. If any null values exist in the weight field, the result is NA.

Since I am more familiar with Alteryx, I originally did the data preparation—including calculating the weighted means—in Alteryx. When comparing these weighted means with those generated in R, I found that Alteryx treats the null values as zeros (i.e. includes them in the calculation). The user would have to know this is incorrect and first filter out the null values. See screenshot examples.

This is also the case within the Linear Regression tool. If null values are not omitted prior to regression, the results are wildly different. Perhaps this is known by more experienced users/statisticians, but this incorrect usage would have gone on unbeknownst to be had I not cross-checked with RStudio.

-

Category Predictive

-

Category Transform

-

Desktop Experience

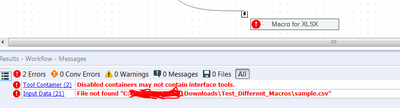

Disabled Containers throw errors if it contains any interface tools. It should not throw any error as the user is intentionally disabling the container.

-

Category Apps

-

Category Input Output

-

Category Transform

-

Data Connectors

Would it be possible to add some additional options to the running total, in particular average (max, st dev. may be useful) to the running sum tool or create a rolling average tool where you can group by multiple fields and have a rolling window for the last x rows, which you select? I know this can be done in the Multi-Row formula and with the moving summarise tool tool (http://www.chaosreignswithin.com/2014/12/moving-summarize.html), however the former is capped by 10,000 rows and alteryx crashes on my mac when trying to click on the multi-row formul tool with 6000 rows. Also it takes a several hours to analyse 1 file, and would like to have a solution to do this on a daily basis. Also I need to be able to so a rolling average over the last 11,999 rows, as this would be the last 20 minutes of data using a 10Hz GPS unit. This would be a great tool I think.

-

Category Transform

-

Desktop Experience

Reduce mouse movement required by bringing up the "Add" menu at the point of the cursor when right-clicking on a field in the Fields window.

-

Category Transform

-

Desktop Experience

Idea:

Some well known scoring methods use optimal binned variables for added robustness. Let's add this capability to Alteryx.

Retionale:

Here's a basic link on why to do that; http://documents.software.dell.com/statistics/textbook/optimal-binning

Current status in Alterys as I'm aware of:

Tile tool or Multi-field Binning tool for completing same task as Tile tool on multiple fields, splits the variables by 5 methods;

Equal Records or Intervals or Sums

Smart Tile

Unique Value

Manual

Unfortunately "equal something" binnings are bad idea, as the values are categorized "blindly" irrespective of the effects on the predictive power of the models.

What to do:

What's needed is to bin both numerical and categorical variables optimally such that the Weights of Evidences (WoE) should present a monotone increasing or decreasing pattern. Maybe at most a V or U shaped "convex" structure.

Quick win:

Without constraining ourselves with monotonicity or convex cases, the easiest practice would be running a C4.5 or CHAID tree algorithm (produces multiple splits rather than binary splits in CART) for a single variable and select the target as the dependent variable and all the resulting nodes will be the bins we are looking for. Doing this for multiple variables at once is the key to the tool to be generated.

Clients:

This capability is sought by risk management departments building robust, stable Basel compliant models in financial industry, especially by banks.

-

Category Predictive

-

Category Preparation

-

Category Transform

-

Desktop Experience

It would be great if Alteryx developed an option to keep data transformations and additions already ran through the module. After adding new tools to the module, then the module would keep all of the data already transformed or added up to that point and would only spend time running the data through any new tools added after that point.

It would save the analyst a lot of time when developing big and complex modules.

-

Category Transform

-

Desktop Experience

Hi,

This feature isn't a must - but would definitely be a nice to have.

Similar to the excel having a tab with key figures like average, count and sum

It would be a really good idea to do something similar within Alteryx just to have a quick glance on key figures/functions (example attached - apologise for the bad paint job but definitely would look good with Alteryx colour scheme)

Thanks

-

Category Data Investigation

-

Category Input Output

-

Category Reporting

-

Category Transform

When CrossTab is used, string data in fields is converted to field names. If the data in the data field has a hyphen in it, this is automatically converted to an underscore when it becomes a field name.

Hyphens are legal in field names, so can we make CrossTab tolerate the string as is without changing it? If that is a breaking change, could a checkbox be added that allows users to get CrossTab to try to use the text as is and exception if the string is illegal as a field name?

Hyphens are required in the field name when using the Download tool, as some header names like "Content-Type" have hyphens in them.

-

Category Transform

-

Desktop Experience

Ever since Alteryx 11 came out, the way dates and DateTimes are handled and computed changed from v10. Formulas that I had working before no longer work. The single biggest culprit I tend to see for this problem is that Alteryx 11 no longer seems to be able to intelligently compare Date and DateTime formats. This is kind of annoying because it forces me to run a DateTime function on all my Date fields for doing comparisons.

For example, I have a formula that I use to calculate if a date is the beginning of the month. That formula is:

IF DateTimeTrim([Snapshot Date],"month") = [Snapshot Date] THEN 1 ELSE 0 ENDIF

Where in the above, Snapshot Date is a date field with data incoming in a format like "2017-01-01".

In Alteryx 10, this formula returned as expected, true. However, in Alteryx 11, it returns false. When I dove into this a bit more, I noted that DateTimeTrim will always return a DateTime format, so the formula is attempting to compare "2017-01-01 00:00:00" to "2017-01-01". For some reason, Alteryx now doesn't think this comparison will result to true.

To address this, I now have to do:

IF DateTimeTrim([Snapshot Date],"month") = DateTimeTrim([Snapshot Date], "day") THEN 1 ELSE 0 ENDIF

My suggestion: Let comparisons between Date and DateTime formats work with the assumption that any Date field is as of midnight that day. In the example above, Alteryx would implicitly assume that "2017-01-01" is "2017-01-01 00:00:00" for any comparisons to DateTime, like it did in the past.

-

Category Preparation

-

Category Transform

-

Desktop Experience

As my Alteryx workflows are becoming more complex and involve integrating and conforming more and more data sources it is becoming increasingly important to be able to communicate what the output fields mean and how they were created (ie transformation rules) as output for end user consumption; particular the file target state output.

It would be great if Alteryx could do the following:

1. Produce a simple data dictionary from the Select tool and the Output tool. The Select tool more or less contains everything that is important to the business user; It would be awesome to know of way to export this along with the actual data produced by the output tool (hopefully this is something I've overlooked and is already offered).

Examples:

- using Excel would be to produce the output data set in one sheet and the data dictionary for all of its attributes in the second sheet.

- For an odbc output you could load the data set to the database and have the option to either create a data dictionary as a database table or csv file (you'd also want to offer the ability to append that data to the existing dictionary file or table.

2. This one is more complex; but would be awesome. If the workflow used could be exported into a spreadsheet Source to Target (S2T) format along with supporting metadata / data dictionary for every step of the ETL process. This is necessary when I need to communicate my ETL processes to someone that cannot afford to purchase an alteryx licence but are required to review and approved the ETL process that I have built. I'd be happy to provide examples of how someone would likely want to see that formatted.

-

Category Transform

-

Desktop Experience

- New Idea 205

- Accepting Votes 1,840

- Comments Requested 25

- Under Review 147

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,491 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

175 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow