Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I’ve been using the Regex tool more and more now. I have a use case which can parse text if the text inside matches a certain pattern. Sometimes it returns no results and that is by design.

Having the warnings pop up so many times is not helpful when it is a genuine miss and a fine one at that.

Just like the Union tool having the ability to ignore warnings, like Dynamic Rename as well, can we have the ignore function for all parse tools?

That’s the idea in a nutshell.

-

Category Parse

-

Desktop Experience

-

New Request

It would be great if you could include a new Parse tool to process Data Sets description (Meta data) formatted using the DCAT (W3C) standard in the next version of Alteryx.

DCAT is a standard for the description of data sets. It provides a comprehensive set of metadata that can be used to describe the content, structure, and lineage of a data set.

We believe that supporting DCAT in Alteryx would be a valuable addition to the product. It would allow us to:

- Improve the interoperability of our data sets with other systems (M2M)

- Make it easier to share and reuse our data sets

- Provide a more consistent way to describe our data sets

- Bring down the costs of describing and developing interfaces with other Government Entities

- Work on some parts of making our data Findable – Accessible – Interopable - Reusable (FAIR)

We understand that implementing support for this standards requires some development effort (eventually done in stages, building from a minimal viable support to a full-blown support). However, we believe that the benefits to the Alteryx Community worldwide and Alteryx as a top-quality data preparation tool outweigh the cost.

I also expect the effort to be manageable (perhaps a macro will do as a start) when you see the standard RDF syntax being used, which is similar to JSON.

DCAT, which stands for Data Catalog Vocabulary, is a W3C Recommendation for describing data catalogs in RDF. It provides a set of classes and properties for describing datasets, their distributions, and their relationships to other datasets and data catalogs. This allows data catalogs to be discovered and searched more easily, and it also makes it possible to integrate data catalogs with other Semantic Web applications.

DCAT is designed to be flexible and extensible, so they can be used to describe a wide variety. They are both also designed to be interoperable, so they can be used together to create rich and interconnected descriptions of data and knowledge.

Here are some of the benefits of using DCAT:

- Improved discoverability: DCAT makes it easier to discover and use KOS, as they provide a standard way of describing their attributes.

- Increased interoperability: DCAT allows KOS to be integrated with other Semantic Web applications, making it possible to create more powerful and interoperable applications.

- Enhanced semantic richness: DCAT provides a way to add semantic richness to KOS , making it possible to describe them in a more detailed and nuanced way.

Here are some examples of how DCAT is being used:

- The DataCite metadata standard uses DCAT to describe data catalogs.

- The European Data Portal uses DCAT to discover and search for data sets.

- The Dutch Government made it a mandatory standard for all Dutch Government Agencies.

As the Semantic Web continues to grow, DCAT is likely to become even more widely used.

DCAT

- Reference Page: https://www.w3.org/TR/vocab-dcat/

- Dutch (NL) Standard: https://forumstandaardisatie.nl/open-standaarden/dcat-ap-donl

- WIKI Pedia on DCAT: https://en.wikipedia.org/wiki/Data_Catalog_Vocabulary

RDF

- Reference Page: https://www.w3.org/TR/REC-rdf-syntax/

- Dutch (NL) Standard: https://forumstandaardisatie.nl/open-standaarden/rdf

- WIKI Pedia on DCAT: https://en.wikipedia.org/wiki/Resource_Description_Framework

-

Category Parse

-

New Request

It would be great if you could include a new Parse tool to process Business Glossary concepts formatted using the SKOS (W3C) standard in the next version of Alteryx.

SKOS is a widely used standard for the representation of concept and term relationships. It provides a consistent way to define and organize concepts (including versioning), which is essential for the interoperability of these data.

We believe that supporting SKOS in Alteryx would be a valuable addition to the product. It would allow us to:

- Improve the interoperability of our data sets with other systems (M2M)

- Make it easier to share and reuse our data sets

- Provide a more consistent way to describe our data sets

- Bring down the costs of describing and developing interfaces with other Government Entities

- Work on some parts of making our data Findable – Accessible – Interopable - Reusable (FAIR)

We understand that implementing support for this standards requires some development effort (eventually done in stages, building from a minimal viable support to a full-blown support). However, we believe that the benefits to the Alteryx Community worldwide and Alteryx as a top-quality data preparation tool outweigh the cost.

I also expect the effort to be manageable (perhaps a macro will do as a start) when you see the standard RDF syntax being used, which is similar to JSON.

SKOS, which stands for Simple Knowledge Organization System, is a W3C Recommendation for representing controlled vocabularies in RDF. It provides a set of classes and properties for describing concepts, their relationships, and their labels. This allows KOS to be shared and exchanged more easily, and it also makes it possible to use KOS data in Semantic Web applications.

SKOS is designed to be flexible and extensible, so they can be used to describe a wide variety. They are both also designed to be interoperable, so they can be used together to create rich and interconnected descriptions of data and knowledge.

Here are some of the benefits of using SKOS:

- Improved discoverability: SKOS makes it easier to discover and use KOS, as they provide a standard way of describing their attributes.

- Increased interoperability: SKOS allows KOS to be integrated with other Semantic Web applications, making it possible to create more powerful and interoperable applications.

- Enhanced semantic richness: SKOS provides a way to add semantic richness to KOS , making it possible to describe them in a more detailed and nuanced way.

Here are some examples of how SKOS and DCAT are being used:

- The Library of Congress uses SKOS to describe its controlled vocabularies.

- The Dutch Government made it a mandatory standard for all Dutch Government Agencies.

As the Semantic Web continues to grow, SKOS is likely to become even more widely used.

SKOS

- Reference Page: https://www.w3.org/TR/skos-reference/

- Dutch (NL) Standard: https://forumstandaardisatie.nl/open-standaarden/skos

- WIKI Pedia on SKOS: https://en.wikipedia.org/wiki/Simple_Knowledge_Organization_System

- Video: https://youtu.be/pbTRDDQ43Sw?si=RG3f-jPBsx2OK8IQ

- Tools: https://csiro-enviro-informatics.github.io/info-engineering/semantic-tools.html

RDF

- Reference Page: https://www.w3.org/TR/REC-rdf-syntax/

- Dutch (NL) Standard: https://forumstandaardisatie.nl/open-standaarden/rdf

- WIKI Pedia on DCAT: https://en.wikipedia.org/wiki/Resource_Description_Framework

-

Category Parse

-

New Request

- TEXT TO COLUMN TOOL : Check Mark for “Output/No-Output” next to “OUTPUT ROOT NAME”

Most of the time I don't want/need the column that I parsed. Provide a check box for if you want the root column output.

-

Category Parse

-

Desktop Experience

Idea removed, regex will do the job.

-

Category Parse

-

Desktop Experience

Many of today's APIs, like MS Graph, won't or can't return more than a few hundred rows of JSON data. Usually, the metadata returned will include a complete URL for the NEXT set of data.

Example: https://graph.microsoft.com/v1.0/devices?$count=true&$top=999&$filter=(startswith(operatingSystem,'W...') or startswith(operatingSystem,'Mac')) and (approximateLastSignInDateTime ge 2022-09-25T12:00:00Z)

This will require that the "Encode URL" checkbox in the download tool be checked, and the metadata "nextLevel" output will have the same URL plus a $skiptoken=xxxxx value. That "nextLevel" url is what you need to get the next set of rows.

The only way to do this effectively is an Iterative Macro .

Now, your download tool is "encode URL" checked, BUT the next url in the metadata is already URL Encoded . . . so it will break, badly, when using the nextLevel metadata value as the iterative item.

So, long story short, we need to DECODE the url in the nextLevel metadata before it reaches the Iterative Output point . . . but no such tool exists.

I've made a little macro to decode a url, but I am no expert. Running the url through a Find Replace tool against a table of ASCII replacements pulled from w3school.com probably isn't a good answer.

We need a proper tool from Alteryx!

Someone suggested I use the Formula UrlEncode ability . . .

Unfortunately, the Formula UrlEncode does NOT work. It encodes things based upon a straight ASCII conversion table, and therefore it encodes things like ? and $ when it should not. Whoever is responsible for that code in the formula tool needs to re-visit it.

Base URL: https://graph.microsoft.com/v1.0/devices?$count=true&$top=999&$filter=(startswith(operatingSystem,'W...') or startswith(operatingSystem,'Mac')) and (approximateLastSignInDateTime ge 2022-09-25T12:00:00Z)

Correct Encoding:

-

Category Parse

-

Desktop Experience

Add ability to name the columns for the text to column fields tool.

-

Category Parse

-

Desktop Experience

Although this could also effect ANY formula, the RegEx tool does not support a flag for Multiline. Often incoming data contains multiple lines of data and the user must replace new lines and carriage returns with a space or other delimiter in order to operate a regular expression on all of the data.

RegEx has a multiline flag (for Alteryx it would be a checkbox) that allows it to handle each line separately.

domain.com

test.com

site.com

if these are individual records, then \w+\.com$ works wonderfully, if these are all contained in a single EXCEL cell, then you need to write to community to figure out what to do.

Cheers,

Mark

-

Category Parse

-

Desktop Experience

Checkbox ability to ‘not’ output the original column on a text to columns tool

-

Category Parse

-

Desktop Experience

Can a function be added to the Text-to-Column tool that allows selecting "split on entire entry" or "split on entry-as-a-whole" for the delimiters field?

Background:

Currently if we type vs. in the delimiters field, it'll look for each character separately including spaces.

The recommendation in the tool help is to use RegEx for splitting on whole words, but for some, RegEx is quite intimidating and adding this function would be a big help for new users.

Proposed Change:

2 Radio Buttons added to the Text-to-Column tool

- Split by Each Entry

- Current functionality

- Should be default

- Splits on every letter, space, punctuation, etc. separately

- Split by Entire Entry

- Allow splitting by using entire entry in the field

- Still includes spaces, letters, and punctuation, but now sees as "whole-word"

Example of function:

- Radio button set to "split by entire entry"

- Delimiter field has: vs.

- Tool sees ______ vs. ______ in a column in the data

- Tool splits ______ and ______ into new columns leaving out the entire vs. including the spaces entered around it

Thank you!

-

Category Parse

-

Desktop Experience

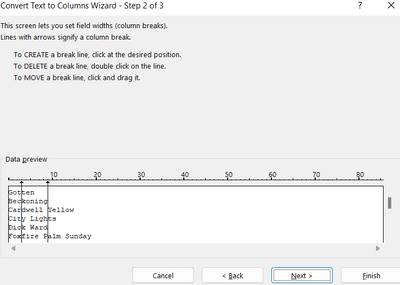

SOOOOoooooo many times it'd be great to just dictate the character length/count (fixed width) for the parse (just like you can in excel), instead of being constrained by a delimiter or being obligated to go create (potentially complex) REGEX. Ideally you could go into the column and insert the <break> (multiple times if needed) after the given character where you'd like the parse to occur. Anything past the last <break> would all be included in the final parse section/field.

You could also do it a little less visual and just identify/type the character count you want for each column. If you really want to enhance this idea, you could also include the ability to name the fields and prescribe the data type. Those would just be gravy on the meat of the idea however, which is, provide the ability to parse by fixed length fields.

-

Category Parse

-

Desktop Experience

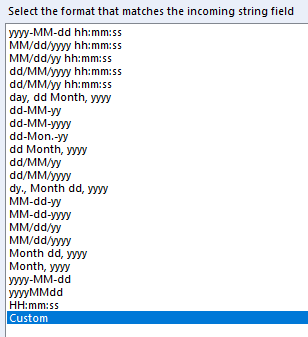

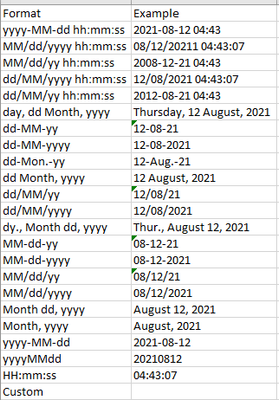

In Japan, the prople usually use the date format "yyyy/mm/dd". But there is no preset in Date tool. So I usually use custom setting, but it is the waste of time.

So please add yyyy/mm/dd format to the preset in Date tool configuration for Japanese people.

-

Category Parse

-

Desktop Experience

The XML Parse tool has a checkbox to ignore errors and continue. This idea works for all options that allow you to ignore errors. It would be great if XML Parse had 2 outputs, 1 for successful records and another for the errored records. This would make it much easier to identify and update (if necessary) errored records.

In my view this would make it more similar to other tools like Filter or Spatial Match where records that don't fit your criteria follow a different flow.

Thanks for considering

-

Category Parse

-

Desktop Experience

We've got your metadata. Suppose the "SELECT COLUMNT TO SPLIT" question is considered by Alteryx and this happens:

- A field is selected because it contains similar \W (regex for non-word characters) patterns in the data (e.g. count of delimiters in the field are roughly equal).

- The delimiter (MODE delimiter or BEST) is defaulted

- Split # of columns = MODE count + 1

If a user updates the Field/Delimiter, the metadata could suggest the right Number of columns based upon the delimiter.

This all belongs in the automagically idea bank. It saves me the time of counting delimiters or trial and error counts.

Cheers,

Mark

-

Category Parse

-

Desktop Experience

Prezados, boa tarde. Espero que estejam todos bem.

A sugestão acredito eu pode ser aplicada tanto na ferramenta de entrada de dados, quanto na ferramenta de texto para colunas.

Existem colunas com campos de texto aberto e que são cadastrados por áreas internas aqui da empresa. Já tentamos alinhar para que esses caracteres, que muitas das vezes são usadas como delimitadores, não sejam usados nesses campos. Porém achei melhor buscar uma solução nesse sentido, para evitar qualquer erro nesse sentido.

A proposta é ser possível isolar essa coluna que existem esses caracteres especiais, para que não sejam interpretadas como delimitadores pelo alteryx, fazendo pular colunas e desalinhando o relatório todo.

Obrigado e abraços

Thiago Tanaka

-

Category Parse

-

Desktop Experience

Prezados boa tarde. Espero que estejam bem.

Minha ideia/sugestão vem para aprimoramento da ferramenta "Texto para Colunas" (Parse), onde podemos delimitar colunas com caracteres de delimitação.

Atualmente, a delimitação não ocorre pro cabeçalho, tendo que ser necessário outros meios para considerar o cabeçalho como uma linha comum, para depois torná-lo como cabelho, ou tratar somente o cabeçalho de forma separada.

Seria interessante que a propria ferramenta de texto para coluna já desse a opção de delimitar a coluna de cabeçalho da mesma forma.

Obrigado e abraços

Thiago Tanaka

-

Category Parse

-

Desktop Experience

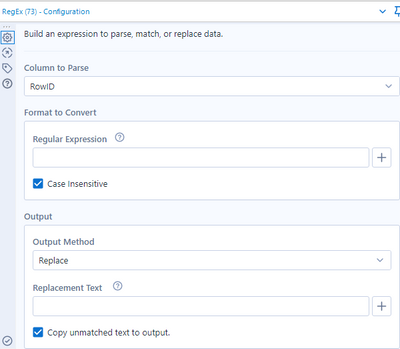

It would be absolutely marvellous if the ability to use a field as the replace value could be incorporated into the Regex tool. Currently the "Replacement Text" field is a hardcoded text value, and so to make that dynamic you have to wrap the tool in a batch and feed in the value as a Control Parameter. If we could just select a field to use as the replacement value, that would be spiffy.

M.

-

Category Parse

-

Desktop Experience

Currently I find myself always wanting to replace the DateTime field with a string or visa verse.

It would be nice to have a radio button to pick whether to append the parsed field to replace the current field with the parsed field.

I understand that all you need is a select tool after, this would be a nice QoL change especially where the field may be dynamically updated.

-

Category Parse

-

Desktop Experience

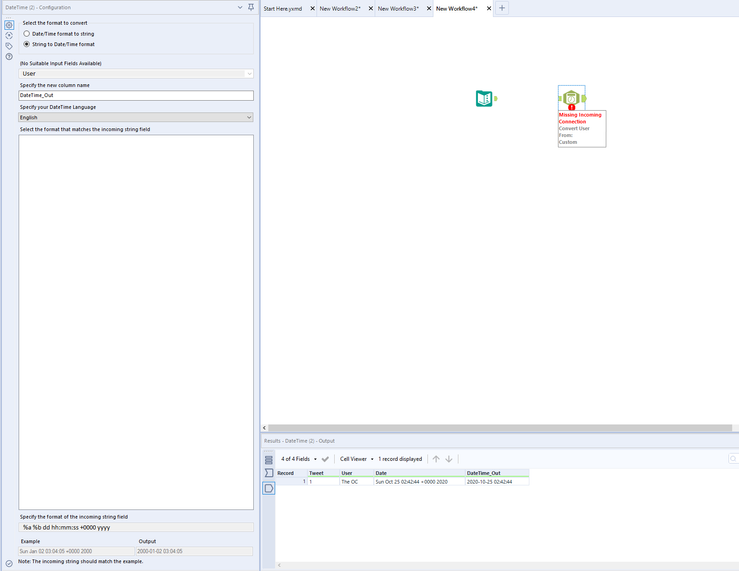

The top screenshot shows the DateTime tool and the incoming string formats. It does not show examples. Please shows examples like the bottom screenshot. Thank you.

-

Category Parse

-

Desktop Experience

When copying and pasting the datetime tool, or disconnecting its input, it loses its configuration. This can be really frustrating if it has an unusual formula, and can result in looking up the datatime functions again: https://help.alteryx.com/current/designer/datetime-functions and working out how to reconfigure the tool.

Steps to reproduce this issue:

1. Set up datetime tool

2. Delete Input Connector:

3. Reconnect and notice the formula is missing and it has been reset to a completely fresh DateTime Tool:

Proposed solution:

The Datetime tool should remember the previous configuration, and go back to this when the input is deleted or it is copy pasted. It will then be able to be reconnected.

Steps in proposed solution:

1. Setup Datetime tool

2. Delete input Connector (Note the DateTime Config and Annotation Remaining, but still being not editable)

3. Reconnect the tool for it to still be configured for the data

-

Category Parse

-

Desktop Experience

- New Idea 207

- Accepting Votes 1,837

- Comments Requested 25

- Under Review 150

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow