Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

The current (version 7.0.x) Google Analytics connector in the Analytics Gallery is not very robust. I had issues maintaining credentials and was forced to frequently reconnect and reconfigure the GA tools in my flow. I ended up resorting to other means of downloading the data from GA.

It would be helpful if Alteryx created a "first-class" data connector as exists for other cloud-based data sources such as Salesforce.

-

Category Input Output

-

Data Connectors

By being able to connect to views in BQ we'd be able to skip the dependency on scheduling table refreshes within BQ and keep all of the dependencies in Alteryx.

-

Category Connectors

-

Category Input Output

-

Data Connectors

When using the SQL output "delete and append" option, I noticed that this is not transactional. I.e. it deletes the data first, then inserts the new data. If an issue happens which prevents the new data insert from happening, then you have lost all the data in the table but it hasn't been replaced by anything new.

I tested this by revoking insert permissions from the login I was using - the insert failed, but the delete had already occurred. This could also occur if there was a network error or connection drop in the middle of the execution.

I use delete and append because the replace if new option is unutterably slow (takes about 5 minutes to complete on ~3000 rows, instead of 0.5 seconds)

I think the delete and append option should either be enclosed in an explicit transaction, or a combination of temp tables and copying should be employed. This behaviour could maybe be offered as an option in case of extremely large datasets.

-

Category Input Output

-

Data Connectors

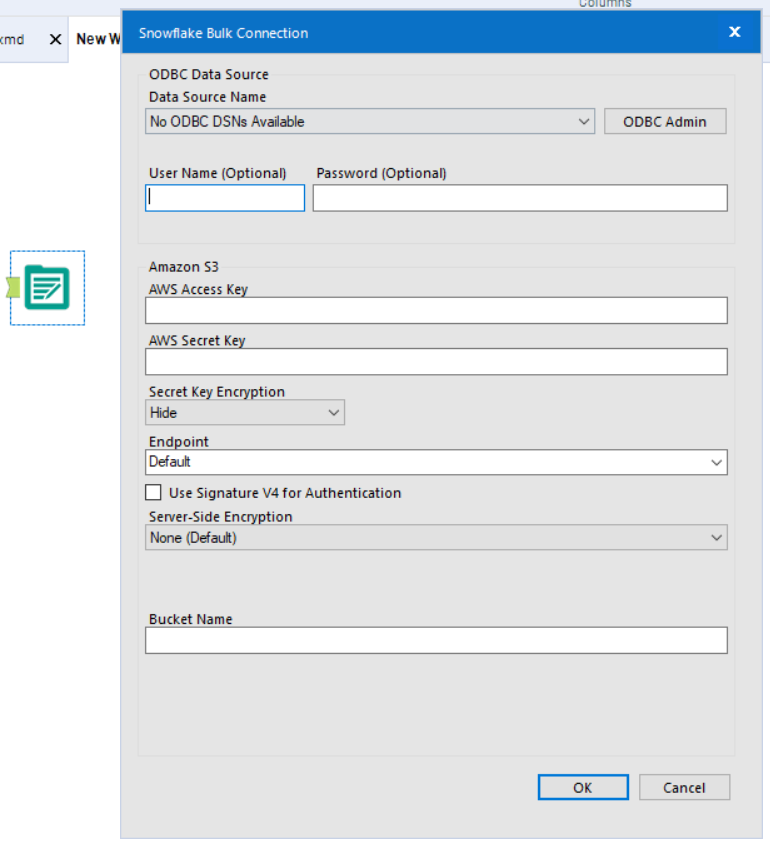

Request to enhance the Snowflake bulk loader upload tool to allow output to be saved on s3 bucket subfolders not only on the root folder

-

Category Input Output

-

Data Connectors

When connecting to a database (Oracle or other) with an Input tool, Alteryx gives you the ability to select a table from a list of available tables in the database. The Output tool has you type the table name in. What if you want to write to an existing table but type the name wrong by mistake? You could unintentionally write to a table that doesn't exist or write to the wrong table . I think it would be valuable for the Output and Write Data In-DB tools to have the option to pick from a list of available tables in the database just like the Input tool.

Extension: It would be great if Alteryx could compare the meta data of the data stream coming into the Output tool and the meta data of the database table itself to see if the data that will be written to the database table misaligns with the configuration of the database table. In other words to prevent the following from occurring: I was replacing the .yxdb output of a large process to output to an existing Oracle table instead. Alteryx successfully deleted the entire contents of the Oracle table, but when it began to write records, it errored because the field lengths of the Oracle table were not long enough. This wouldn't be a big deal except that the workflow takes 20 minutes to run and the database tables were wiped before the error was fully realized! I've heard that other ETL tools warn you against data that may violate the configuration of the database table that you're trying to write to.

Thank you for listening!

-

Category Input Output

-

Data Connectors

Hi,

I'm not sure if it's just my computers (I've tried on a desktop & laptop), but Alteryx doesn't seem to have the "select all" ability in the input/output windows. Meaning if there is text already in the output window, the user should be able to click once to drop the cursor, click twice to select the whole word, and click three times to select the whole line. Currently, it just goes does the first two.

Since I usually have long file paths, I can't see the whole path, so instead of just clicking 3 times to select everything, I have to click once to get the cursor there, then hit the Home button, then hold down Shift and hit the End button, then do my copy/paste. I think having the 3 click select all basic function would be very useful. Have a great day!

-

Category Input Output

-

Category Interface

-

Data Connectors

-

Desktop Experience

It'd be nice if there was a way to add an autofill function. Say I was using a text tool and got tired of always typing the full name in (lazy, I know) or just didn't know the correct spelling. It'd be nice if there was a check box where the app creator could allow for autofill or autoselect. As the user typed in the text a drop down list would show changing as he user typed until the user saw what they were going to fully type. They would then select that text from the drop down list. Be a nice little feature.

-

Category Input Output

-

Category Preparation

-

Data Connectors

-

Desktop Experience

Hello - does anyone know if it's possible to to place text both above and below a table in the report text tool (in this instance i'm using it to feed an automated email)

I have some text which is the body of the email but also want to add some text with a hyperlink to unsubscribe to the email, below a table but as far as I can tell, there's not a way to input the table in between text, only above/below/left/right

Thanks in advance for the help,

Harry

-

Category Input Output

-

Category Reporting

-

Data Connectors

-

Desktop Experience

Hi,

Recently I was helping a client to design the workflow to do transformation. In the middle of the work, I feel a bit lost on handling so many fields and thinking it would be great if there is a feature that allow me to track the field actions along the workflow. It could be something like a configuration on the canvas, user activate it only when they want to.

And when it is activated, the workflow could become:

So it is easier to find the path of certain field along the whole workflow.

Or is there any method to achieve this at the moment?

Thanks.

Kenneth

-

Category Input Output

-

Category Transform

-

Data Connectors

-

Desktop Experience

Allow Input Data tool to accept variable length (ie., variable number of fields) per record. I have a file with waypoints of auto trips; each record has a variable number of points, eg., lat1, lon1, lat2, lon2, etc. Right now I have to use another product to pad out all the fields to the maximum number of fields in order to bring it into Alteryx.

-

Category Input Output

-

Data Connectors

I am having large denormalized tables as input, and each time I need to scroll down approx 700+ fields to get an exhaustive view of fields that are selected (even if I have selected 10 out of 700 fields).

It would be helpful if along with having a sort on field name and field type, I can have an additional sort on selected/deselected fields. Additionally if I can get sort by more than one options i.e sort within an already sorted list that will help too - i.e. sorted selected first and inside that selected by field name.

I can get an idea of selected fields from any tool down the line (following the source transformation), but I would like to have an exhaustive view of both selected and unselected fields so that I can pick/remove necessary fields as per business need.

-

Category Input Output

-

Category Preparation

-

Data Connectors

-

Desktop Experience

Can you add .tsv files as a file format in input/output tools in Alteryx? Can it also be recognized as an 'All Data Files' format?

Thanks!

-

Category Input Output

-

Data Connectors

-

Category Connectors

-

Category Input Output

-

Data Connectors

I have a module that queries a large amount of data from Redshift (~40 GB). It appears that the results are stored in memory until the query completes; consequently, my machine, which has 30 GB memory, crashes. This is a shame because Alteryx is good with maintaining memory <-> HDD balance.

Idea: Create a way to offload the query results onto the HDD as they are received.

-

Category Input Output

-

Data Connectors

I want my Save Setting to stick on the Browse tool when I save output. I almost always save to Excel. The default is to save the output as an Alteryx .yxdb. That is useless unless I going to further slice and dice in Alteryx - which I'm not - which it why it being output to Excel to go to the end user. Once a file type is chosen - let that file type stick for future exports as most people save there output in the same file type each time. I find myself inadvertently saving .yxdb and then needing to resave in .xlsx.

-

Category Input Output

-

Data Connectors

Lets say we have data for 3 different regions coming from input file/DB. Asia, LA and EMEA region data are all in one file.

After the transformation, checks which were needed, now I want to output my results per region.

Due to user access to data, we have designed 3 different folders - one for each region.

So, Asia region output should go to --> ASIA Folder, and similarly for rest of the regions.

Currently, there is no option to dynamically select and change the output folder path in output tool.

It would be great to have a way to be able to choose output folder based on a field value.

-

Category Input Output

-

Data Connectors

I got the requirement to read the file with same structure from different path and different sheet, so I worked and identified a way to achieve this requirement.

Step 1: List the files that are required to read from the different path and create it Excel with the file name and respective path.

Step 2 : Create batch Macro give control parameter as filepath and mention the correct template.

Step 3 : Create a workflow add source input and Map Source file i.e. list of files with path.

Finally you can see the all the data are merged together write in your output as single file. You can additional transformation if you want process any business logic further and write in output.

Note: Adding additional file name source path will be enough for additional file processing.

-

Category Input Output

-

Data Connectors

For example, Input Data Tool can output a list of sheets name from excel files.

Our client would like to output a list of sheets name by Google Sheets Input Tool as like excel files.

-

Category Input Output

-

Data Connectors

Just like the File Geodatabase, Esri has an enterprise version for servers, in our case, Oracle. We would like the option to output to an Oracle GDB like we can to a normal Oracle DB. This would greatly assist in our process flows.

-

Category Input Output

-

Data Connectors

Hi,

So I was working on a project which uses the "Download" tool. I needed to measure precisely the response time for each record so I set up a "timestamp" value using the DateTimeNow() function before the actual download. After download was complete, i tried to measure the response time by using the DateTimeDiff() function. However, using this method, i was not able to get a precise (up to a millisecond) performance reading since the DateTime format gets rounded to a second.

It would be great to have a way of precisly measure the time taken for each record to go through a tool or a set of tool and having that value be a part of the output file

-

Category Input Output

-

Category Time Series

-

Data Connectors

-

Desktop Experience

- New Idea 205

- Accepting Votes 1,839

- Comments Requested 25

- Under Review 148

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,491 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

175 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow