Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello all,

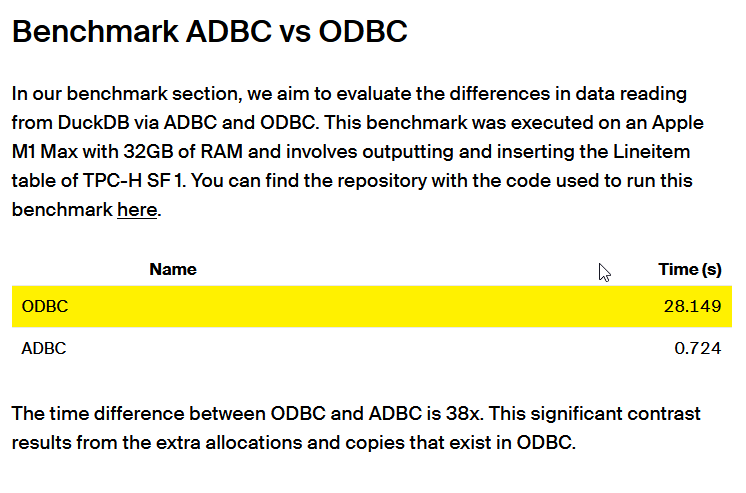

ADBC is a database connection standard (like ODBC or JDBC) but specifically designed for columnar storage (so database like DuckDB, Clickhouse, MonetDB, Vertica...). This is typically the kind of stuff that can make Alteryx way faster.

more info in https://arrow.apache.org/blog/2023/01/05/introducing-arrow-adbc/

Here a benchmark made by the guys at DuckDB : 38x improvement

https://duckdb.org/2023/08/04/adbc.html

Best regards,

Simon

-

Category In Database

-

Category Input Output

-

New Request

Hello all,

It's really frustrating to have an "alteryx field type" in In-Database Select. It doesn't even make sense since we're manipulating only data in SQL database where those types does not exist. What we should see is the SQL field type.

Best regards,

Simon

-

Category In Database

-

Enhancement

Hello all,

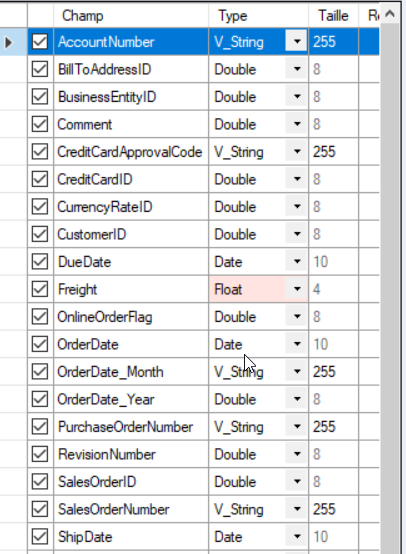

When using in-database, all you have in select or formula are the Alteryx field types (V_String, etc..).

However, since you're mostly writing in database, in the end, there is a conversion of Alteryx field types to real SQL field types (like varchar). But how is it done ? As of today, it's a total black box. Some documentation would be appreciated.

Best regards,

Simon

-

Category In Database

-

Documentation

-

New Request

Hello all,

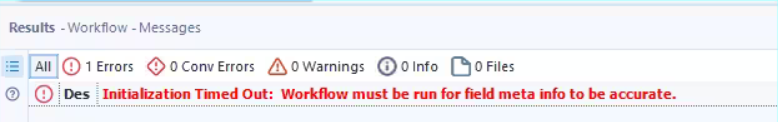

Sometimes, when you have too much time to retrieve your tables metadas, you can have this message

Initialization Timed Out: Workflow must be run for field meta info to be accurate.

From what I understand, it's Alteryx and the source system that drives the time out value. However, I have some cases where the long time is "normal" and that really hurts the user experience.

So, I would like the ability in settings to change the default value.

Best regards,

Simon

-

Category In Database

-

Category Input Output

-

New Request

-

User Settings

Hello all,

Apache Doris ( https://doris.apache.org/ ) is a modern datawarehouse with a lot of ambitions. It's probably the next big thing.

You can read the full doc here https://doris.apache.org/docs/get-starting/what-is-apache-doris but to sum it up, it aims to be THE reference solution for OLAP by claiming even better performance than Clickhouse, DuckDB or MonetDB. Even benchmarks from the Clickhouse team seem to agree.

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Category Input Output

-

New Request

Our company has a need to link a new data source in Athena. We have been able to establish a connection using the input functionality however the connection is so slow it is unusable. We need to have Alteryx build an In Database option for Athena to allow us to link our data lake to Alteryx.

-

Category In Database

-

Data Connectors

Hello all,

A few weeks ago Alteryx announced inDB support for GBQ. This is an awesome idea, however to make it run, you should use Oauth2 Authentication means GBQ API should be enabled. As of now, it is possible to use Simba ODBC to connect GBQ. My idea is to enhance the connection/authentication method as we have today with Simba ODBC for Google BigQuery and support inDB. It is not easy to implement by IT considering big organizations, number of GBQ projects and to enable API for each application. By enhancing the functionality with ODBC, this will be an awesome solution.

Thank you for voting

Albert

-

Category In Database

-

Enhancement

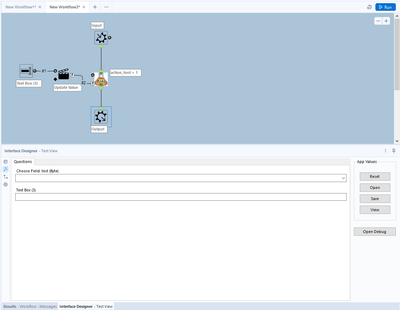

When making any type of macro, it's important to test the functionality of the macro via a debug. This is accomplished successfully with normal tools, however there's a bug that will not allow the user to debug In-DB macros that use either of the following standard Alteryx tools:

- Macro Input In-DB

- Macro Output In-DB

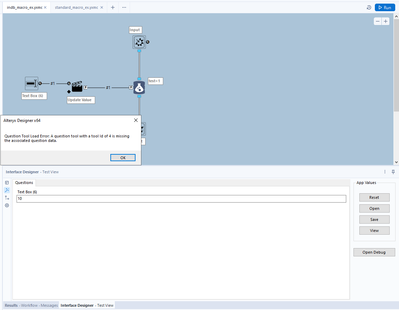

If either of these tools are included in the macro you are building, an error message will appear not allowing you to open a debug.

Error message: Question Tool Load Error: A question tool with a tool id of XXX is missing the associated question data.

Of course, Macro input and output tools do not require any specific action/question tool associated with it. This is a bug. A user pointed out the XML issue almost 3 years ago here:

In summary: "It appears that the tool itself inserts a hidden Question attribute into the XML which can also be seen in Workflow Configuration"

Source:

Examples....

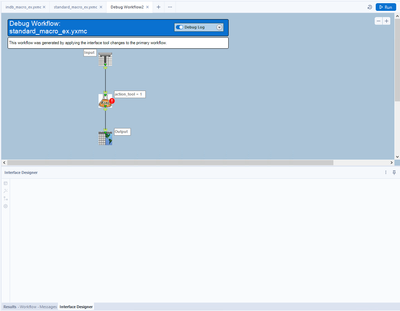

A normal macro, using standard tools:

After debugging a standard macro, the Macro Input/Output tools correctly change to a Text Input and a Browse tool. This allows the macro author to test the macro.

However, when trying the same thing with In-DB tools in a macro, an error message appears:

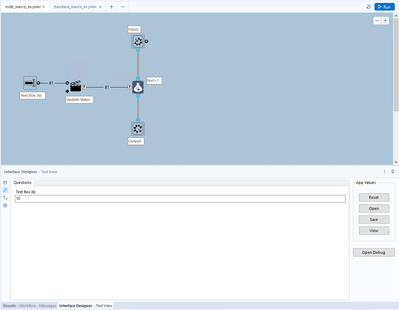

In-DB macro 1:

In-DB Macro error message (after clicking "Open Debug"):

-

Category In Database

-

Enhancement

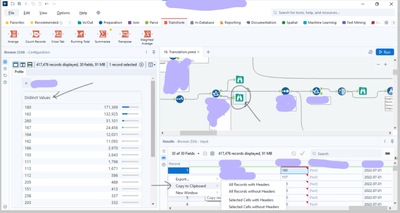

it would be beneficial to add the same action available in the message result window to be added in the brows tool for the distinct value.

like the copy options.

-

Category In Database

-

Enhancement

-

UX

Hello all,

According to wikipedia https://en.wikipedia.org/wiki/Materialized_view

In computing, a materialized view is a database object that contains the results of a query. For example, it may be a local copy of data located remotely, or may be a subset of the rows and/or columns of a table or join result, or may be a summary using an aggregate function.

The process of setting up a materialized view is sometimes called materialization.[1] This is a form of caching the results of a query, similar to memoization of the value of a function in functional languages, and it is sometimes described as a form of precomputation.[2][3] As with other forms of precomputation, database users typically use materialized views for performance reasons, i.e. as a form of optimization.

So, I would like to create that in Alteryx, for obvious performance reasons in some use cases.

This is not a duplicate of https://community.alteryx.com/t5/Alteryx-Designer-Desktop-Ideas/In-DB-Create-View/idi-p/157886

Best regards,

Simon

-

Category In Database

-

New Request

Hello all,

According to wikipedia :

https://en.wikipedia.org/wiki/Embedded_database

An embedded database system is a database management system (DBMS) which is tightly integrated with an application software; it is embedded in the application.

It's often like a single file/dll that you can use inside an application without the user having to connect (or at least to configure it) to it (it's all done inside the application). So, it's widely portable.

Why it does matter ?

As of today, there is not a single example of in database workflow because all the supported databases need the user to:

1/install an odbc driver (most of time, he won't have the rights to do so)

2/configure an odbc connection (sometimes, he doesn't have the rights to)

3/configure a connection on Alteryx (ok, he can)

So it requires IT action, which can be pretty long (in ùany organization, it requires several weeks !!). And even with all of that,the users must be granted privilege to access database and the customer need to develop its own examples and write its own specific documentation.

Well, this is not efficient.

What I suggest is Alteryx to use one of embedded database for training support/one tool examples. SQLlite seems good, maybe a more analytics oriented (like DuckDB ) would be more efficient.

The requirement are, I think, the following :

-OpenSource and free

-Fast

-SQL compliant

-With a bulk load ability

Best regards,

Simon

-

Category In Database

-

New Request

Hello all,

As of now, you have two very distinct kinds of connection :

-in memory alias

-in database alias

It happens than every single time I use a in-database alias I have to create the same for in memory since some operations cannot be realized in in-database (such as pre-sql or interface tools)

What does that mean for us :

-more complex settings operations/training/tests

-unefficient worflows that have to deal with two kinds of alias.

What I propose :

-a single "connection alias", that can be used either for in-db either for in-memory,

-one place to configure

-the in-db or in-memory being dependant on the tools you use

Best regards,

Simon

-

Category In Database

-

Category Input Output

-

New Request

-

User Settings

Hello all,

As of today, we can easily copy or duplicate a table with in-database tool.This is really useful when you want to have data in development environment coming from production environment.

But can we for real ?

Short answer : no, we can't do it in these cases :

-partitions

-any constraints such as primary-foreign keys

But even if these ideas would be implemented, this means manually setting these parameters.

So my proposition is simply a "clone table"' tool that would clone the table from the show create table statement and just allow to specify the destination path (base.table)

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

With data sharing, you can share live data with relative security and ease across Amazon Redshift clusters, AWS accounts, or AWS Regions for read purposes.

Data sharing can improve the agility of your organization. It does this by giving you instant, granular, and high-performance access to data across Amazon Redshift clusters without the need to copy or move it manually.

aws Datasharing feature in Alteryx. It's not working in Alteryx v 2020.4

We would like to know whether Alteryx is support redshift data share or not ?

it uses same redhshift ODBC or Simba ODBC drivers only but the functionality would be sharing the data across the clusters. Earlier we have seen a limitation from Alteryx end (not able to read data share objects) so we wanted to check if it’s resolved in newer versions of Alteryx. Reference link

https://docs.aws.amazon.com/redshift/latest/dg/datashare-overview.html

-

Category In Database

-

Data Connectors

There should be an option where an existing SQL query or a complex logic is converted by Alteryx intelligently into an Alteryx high level workflow with tools suggestion which can be modified by the developers.

For e.g. Salesforce Einstein Analytics has an option where an existing dataflow (traditional way of performing data prep.) can be converted to a recipe (premium version of a dataflow with advanced features) using a single click. It gives an option for the user to make additional modifications/enhancements on top of it.

-

Category In Database

-

Data Connectors

Introduce CTE Functions and temp tables reading from SQL databases into Alteryx.I have faced use cases where I need to bring in table from multiple source tables based on certain delta condition. However, since the SQL queries turn to be complex in nature; I want to leverage an option to wrap it in a CTE function and then use the CTE function as an input for In-DB processing for Alteryx workflows.

-

Category In Database

-

Data Connectors

Hello,

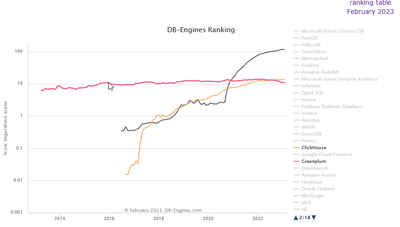

Just like Monetdb or Vertica, Clickhouse is a column-store database, claiming to be the fastest in the world. It's available on Cloud (like Snowflake), linux and macos (and here for free, it's open-source). it's also very well ranked in analytics database https://db-engines.com/en/system/ClickHouse and it would be a good differenciator with competitors.

https://clickhouse.com/

it has became more popular than Greenplum that is supported : (black snowflake, red greenplum, orange clickhouse)

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

Hello all,

MonetDB is a very light, fast, open-source database available here :

https://www.monetdb.org/

Really enjoy it, works pretty well with Tableau and it's a good introduction to column-store concepts and analytics with SQL.

It has also gained a lot of popularity these last years :

https://db-engines.com/en/ranking_trend/system/MonetDB

Sadly, Alteryx does not support it yet.

Best regards

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

Since we can use Snowflake udfs in Alteryx, when do you think Snowflake stored procedures will be available.

-

Category In Database

-

Data Connectors

Hello all,

As of today, you can populate the Drop Down tool in the interface category with a query launched from a in-memory connection. I would really appreciate the ability to use instead an in-db connection.

Why ?

It means managing two connections instead of one, and finding ways to manage it on server for both of them, etc etc.. Simplicity is key.

Best regards,

Simon

-

Category In Database

-

Category Interface

-

Data Connectors

-

Desktop Experience

- New Idea 206

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,492 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

176 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 41 | |

| 31 | |

| 20 | |

| 10 | |

| 7 |