Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Would be nice if Alteryx had the ability to run a Teradata stored procedure and/or macro with a the ability to accept input parameters. Appears this ability exists for MS SQL Server. Seems odd that I can issue a SQL statement to the database via a pre or post processing command on an input or output, but can't call a stored procedure or execute a macro. Only way we can seem to call a stored procedure is by creating a Teradata BTEQ script and using the Run Command tool to execute that script. Works, but a bit messy and doesn't quite fit the no-coding them of Alteryx.

-

API SDK

-

Category Developer

-

Category In Database

-

Data Connectors

While In-db tools are very helpful and cut down the time needed to write complex SQL , there are some steps that are faster by directly writing SQL like window functions- OVER (PARTITION BY .....). In Alteryx, we need to create multiple joins and summaries to perform a window function. It would be immensely helpful if there was a SQL editor tool for in-db workflows where we can edit the SQL code at any point in the workflow, or even better, if they can add an "edit" function to every in-db tool where we can customize the SQL code generated and then send to the next tool.

This will cut down the time immensely and streamline the workflow to make Alteryx a true contender for the ETL solution space.

-

API SDK

-

Category Developer

-

Category In Database

-

Category Transform

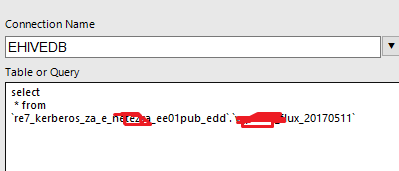

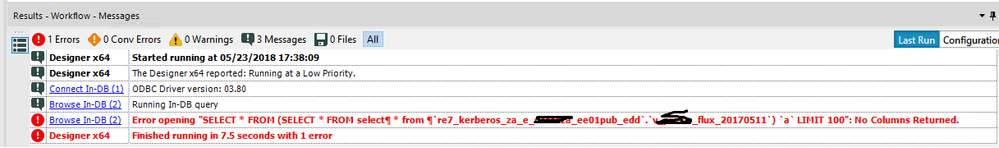

use case : much of our users copy paste a formatted query to the Alteryx tools such as Connect in Db or Input Data (especially to reduce the data).

However, some of the formatting such a Carrier Return does not work

select * from `re7_kerberos_za_e_*******_ee01pub_edd`.`v_*******_flux_20170511`

-

Category In Database

-

Category Input Output

-

Category Preparation

-

Data Connectors

The "Browse HDFS" for the Spark Direct connection does not allow to specify a File Format at "ORC". Could you please add these feature?

-

Category In Database

-

Category Input Output

-

Data Connectors

The SQL compiler within the Input Tool places a space between operators causing a SQL syntax error.

Eg.

SELECT * FROM [DataSource] WHERE [Dimension] != 'abc'

Becomes

SELECT * FROM [DataSource] WHERE [Dimension] ! = 'abc'

The compiler should not add a space in this instance as it violates the syntax rules and triggers an error.

-

Category In Database

-

Data Connectors

-

Enhancement

Hi there,

When you connect to a SQL server in 11, using the native SQL connection (thank you for adding this, by the way - very very helpful) - the database list is unsorted. This makes it difficult to find the right database on servers containing dozens (or hundreds) of discrete databases.

Could you sort this list alphabetically?

-

Category In Database

-

Data Connectors

Please create an In-DB version of Dynamic Rename Tool. Or at least, suggest some alternative.

Renaming 45+ columns manually is just insane.

In-DB version are very fast & there isnt any need to stream-out or stream-in. So i hope to do this ETL in-db

-

Category In Database

-

Data Connectors

Preface: I have only used the in-DB tools with Teradata so I am unsure if this applies to other supported databases.

When building a fairly sophisticated workflow using in-DB tools, sometimes the workflow may fail due to the underlying queries running up against CPU / Memory limits. This is most common when doing several joins back to back as Alteryx sends this as one big query with various nested sub queries. When working with datasets in the hundereds of millions and billions of records, this can be extremely taxing for the DB to run as one huge query. (It is possible to get arround this by using in-DB write out to a temporary table as an intermediate step in the workflow)

When a routine does hit a in-DB resource limit and the DB kills the query, it causes Alteryx to immediately fail the workflow run. Any "temporary" tables Alteryx creates are in reality perm tables that Alteryx usually just drops at the end of a successful run. If the run does not end successfully due to hitting a resource limit, these "Temporary" (perm) tables are not dropped. I only noticed this after building out a workflow and running up against a few resource limits, I then started getting database out of space errors. Upon looking into it, I found all the previously created "temporary" tables were still there and taking up many TBs of space.

My proposed solution is for Alteryx's in-DB tools to drop any "temporary" tables it has created when a run ends - regardless of if the entire module finished successfully.

Thanks,

Ryan

-

Category Connectors

-

Category In Database

-

Data Connectors

-

Engine

Hi Alteryx Team!

Think an easy/useful tool enhancement would be to add a search bar on the "Tables" tab in the "Choose Table or Specify Query" popup when connecting to an In-DB source.

Current state, you have to scroll through all your tables to find the one you're looking for. Would be a HUGE help and time saver if I could just go in and search for a key word I know is in my table name.

Thanks!

-

Category Connectors

-

Category In Database

-

Data Connectors

Hi Team,

Can we use IN DB to connect to Sybase IQ to optimize data extraction and transformation.

-

Category In Database

-

Data Connectors

Hi,

we use a lot the in-db tools to join our database and filter before extracting (seems logic), but to do it dynamically we have to use the dynamic input in db, which allows to input a kind of parameter for the dates, calculated locally and easily or even based on a parameter table in excel or whatever, it would be great to be able to dynamically plug a not in db tools to be able to have some parameters for filters or for the connect in-db. The thing is when yu use dynamic input in-db, you loose the code-free part and it can be harder to maintain for non sql users who are just used to do simple queries.

You could say that an analytic application could do the trick or even developp a macro to do so, but it would be complicated to do so with hundreds of tables.

Hope it will be interesting for others!

-

Category In Database

-

Data Connectors

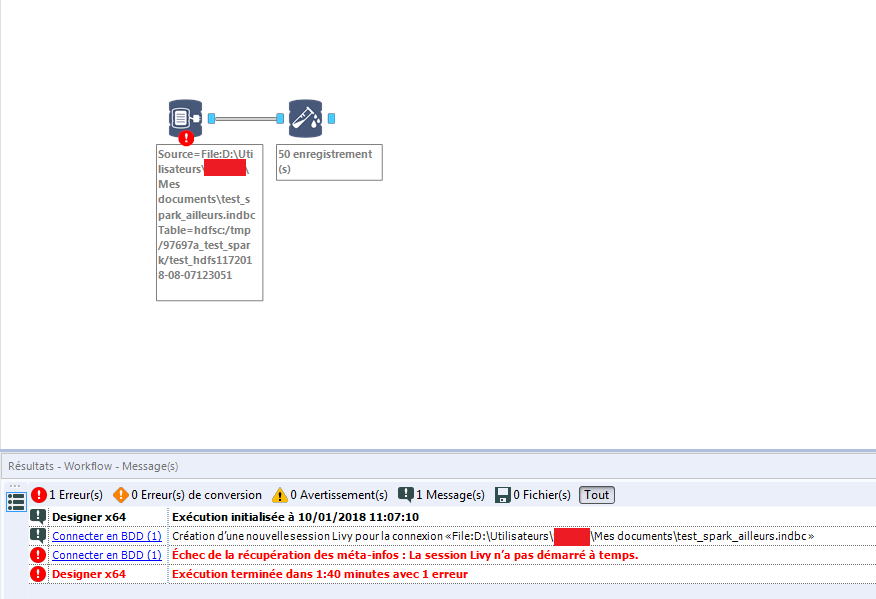

Alteryx creates a Livy Session when connecting to Spark Direct

I just want to identify easily the session.

-

Category In Database

-

Data Connectors

We've been researching snowflake and are eager to try this new cloud database tool but are holding off till Alteryx supports in-database tools for that environment. I know it's a fairly new service and there probably aren't tons of users, but it seems like a perfect fit since it's fully SQL complaint and is a truely native clouad, SAAS tool. It's built from scratch for AWS, and claims to be faster and cheaper.

Snowflake for data storage, Alteryx for loading and processing, Tableau for visualization - the perfect trio, no?

Has anyone had experience/feedback with snowflake? I know it supports ODBC so we could do basic connections with Alteryx, but the real key would obviously be enabling in-database functionality so we could take advantage of the computation power of the snowflake.

Anyway, I just wanted to mention the topic and find out if it's in the plans or not.

Thanks,

Daniel

-

Category Connectors

-

Category In Database

-

Data Connectors

Hello all,

In addition to the create index idea, I think the equivalent for vertica may be also useful.

On vertica, the data is store in those projections, equivalent to index on other database... and a table is linked to those projections. When you query a table, the engine choose the most performant projection to query.

What I suggest : instead of a create index box, a create index/projection box.

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

Please add Mode as an option in the IN-DB summarize tool. It is helpful to be able to summarize by mode before streaming out to reduce the number of records.

-

Category In Database

-

Data Connectors

When dealing with very large tables (100M rows plus), it's not always practical to bring the entire table back to the designer to profile and understand the data.

It would be very useful if the power of the field summary tool (frequency analysis; evaluating % nulls; min & max values; length of strings; evaluating if the type is appropriate or could be compressed; whether there is whitespace before or after) could be brought to large DB tables without having to bring the whole table back to the client.

Given that each of these profiling tasks can be done as a discrete SQL query; I would think that this would be MASSIVELY faster than doing this client-side; but it would be a bit of a pain to write this tool.

If there is interest in this - I'm more than happy to work with the Alteryx team to look at putting together an initial mockup.

Cheers

Sean

-

API SDK

-

Category Developer

-

Category In Database

-

Data Connectors

Hi all,

In the formula tool and the SQL editors, it would be great to have a simple bracket matcher (like in some of the good SQL tools or software IDEs) where if you higlight a particular open bracket, it highlights the accompanying close bracket for you. That way, I don't have to take my shoes and socks off to count all the brackets open and brackets closed if I've dropped one 🙂

It would also be great if we could have a right-click capability to format formulae nicely. Aqua Data Studio does a tremendous job of this today, and I often bring my query out of Atlteryx, into Aqua to format, and then pop it back.

Change:

if X then if a then b else c endif else Z

To:

if X

Then

if A

then B

Else C

else Z

Endif

or Change from

Select A,B from table c inner join d on c.ID = D.ID where C.A>10

to

Select

A

,B

From

Table C

Inner Join D

on C.ID = D.ID

Where

C.A>10

Finally - intellitext in all formulae and SQL editing tools - could we allow the user to bring up intellitext (hints about parameters, with click-through to guidance) like it works in Visual Studio?

Thank you

Sean

-

Category In Database

-

Category Preparation

-

Data Connectors

-

Desktop Experience

A question has been coming up from several users at my workplace about allowing a column description to display in the Visual Query Builder instead of or along with the column name.

The column names in our database are based on an older naming convention, and sometimes the names aren't that easy to understand. We do see that (if a column does have a column description in metadata) it shows when hovering over the particular column; however, the consensus is that we'd like to reverse this and have the column description displayed with the column name shown on hover.

It would be a huge increase to efficiency and workflow development if this could be implemented.

-

Category Connectors

-

Category Data Investigation

-

Category In Database

-

Category Input Output

Currently the Databricks in-database connector allows for the following when writing to the database

- Append Existing

- Overwrite Table (Drop)

- Create New Table

- Create Temporary Table

This request is to add a 5th option that would execute

- Create or Replace Table

Why is this important?

- Create or Replace is similar to the Overwrite Table (Drop) in that it fully replaces the existing table however, the key differences are

- Drop table completely removes the table and it's data from Databricks

- Any users or processes connected to that table live will fail during the writing process

- No history is maintained on the table, a key feature of the Databricks Delta Lake

- Create or Replace does not remove the table

- Any users or processes connected to that table live will not fail as the table is not dropped

- History is maintained for table versions which is a key feature of Databricks Delta Lake

- Drop table completely removes the table and it's data from Databricks

While this request was specific to testing on Azure Databricks the documentation for Azure and AWS for Databricks both recommend using "Replace" instead of "Drop" and "Create" for Delta tables in Databricks.

-

Category In Database

-

Data Connectors

Database driver versions are a constant trauma for the admin team and for our Designer users. We have situations where drivers are different between two peers who are working on the same flow, or where the flow breaks as soon as it's posted to the gallery etc.

What would really help is if Alteryx were able to create a driver pack (working with the major vendors), which contains the latest version of the major database drivers. We could then roll this out to all the designer & server machines to make sure that we have consistency across the user base.

This would need to include:

- DB2

- MS SQL

- Mongo

- Sybase

- Postgress

- Apache Kudu / Spark

Plus a few others, I'm sure

This is a pain for Alteryx to do this centrally, I know, but it's orders of magnitude less painful than having to do this manually (by searching for the latest drivers on every manufacturer's sites) on every designer workstation across the firm.

-

Category In Database

-

Data Connectors

- New Idea 207

- Accepting Votes 1,837

- Comments Requested 25

- Under Review 150

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow