Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello all,

Some Database, including Hive, support natively scheduled queries (yes, the scheduling configuration is inside the database, not through etl/dataprep system). I think this would be an interesting feature for in-db workflow output : you play the worflow once and then only have to run it when it changes, the database do the scheduling.

https://cwiki.apache.org/confluence/display/Hive/Scheduled+Queries

Intro

Executing statements periodically can be usefull in

- Pulling informations from external systems

- Periodically updating column statistics

- Rebuilding materialized views

Best regards,

Simon

-

Category In Database

-

Data Connectors

There should be an option where an existing SQL query or a complex logic is converted by Alteryx intelligently into an Alteryx high level workflow with tools suggestion which can be modified by the developers.

For e.g. Salesforce Einstein Analytics has an option where an existing dataflow (traditional way of performing data prep.) can be converted to a recipe (premium version of a dataflow with advanced features) using a single click. It gives an option for the user to make additional modifications/enhancements on top of it.

-

Category In Database

-

Data Connectors

Dear Users, Fans, Compatriots, and Fellow Alteryx Nerds:

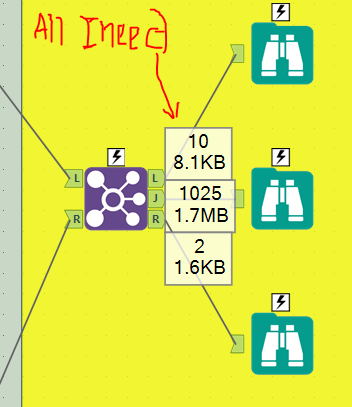

One of my favourite parts of using Alteryx is that in all the in-memory tools, there is a quick-and-dirty count in each of your tools' output nodes. You know, you use these all the time and when you switch back into SQL, you get frustrated with having to run the query two or three times just to see the count in each of your join outputs.

One thing I'm missing as an INDB user is that I have to employ a manual workaround to see what is happening. INDB tools are a bit black-box in that we don't see the counts.

I've been using this workaround for a little over a year now and I haven't found it to be incredibly taxing on my resources, so I'm wondering if Alteryx may be able to look into doing this on the back end to make the INDB experience that much closer to the in-memory experience. I just want those numbers above; I don't need to know the byte count, just the record count.

Now, I imagine this is not implemented already for a Very Good Reason. But, enough is enough! Let's shoot for the moon and make this tool all that much better!! Anyone with me?

-Cedric Justice

Cambia Healthcare

-

Category In Database

-

Category Interface

-

Category Join

-

Data Connectors

Hi Alteryx Team,

Now, Connect In-DB cannot use the data connection in gallery.

User need to input those DB info as well as the login and password.

I suggest to enhance the Connect In-DB tool, so that can select/use the gallery data connection.

From enterprise point of view:

1. No database credentials and connection properties be shared to designer user. It can reduce the risk from abnormal access.

2. Easy to manage the access control by Alteryx Admin in gallery. Can assign the data connections to different group of users. More convenience for audit.

3. Easy to maintain the data connection by Alteryx Admin in gallery. For example, reset the database password or update the connection properties .

On the other hand, it is better to setup in-DB data connection in gallery.

Best regards,

Samuel

-

Category In Database

-

Data Connectors

Bulk Load for Vertica (especially with the gzip compressed format) is very powerful, I can upload several dozens of millions of rows in a few minutes. Can we have it please?

-

Category In Database

-

Data Connectors

There are a number of requests for bulk loaders to DBs and Im adding MySQL to the list.

Really every DB connection (on prem and cloud) need some bulk loader capabilities to be added (if they don't have it already)

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

Hi,

Standard In-DB connection configuration for PostgreSQL / Greenplum makes "Datastream-In" In-DB tool to load data line by line instead of using Bulk mode.

As a result, loading data in a In-DB stream is very slow.

Exemple

Connection configuration

Workflow

100 000 lines are sent to Greenplum using a "Datastream-in" In-DB tool.

This is a demo workflow, the In-DB stream could be more complex and not replaceable by an Output Data In-Memory.

Load time : 11 minutes.

It's slow and spam the database with insert for each lines.

However, there is a workaround.

We can configure a In-Memory connection using the bulk mode :

And paste the connection string to the "write" tab of our In-DB Connection :

Load time : 24 seconds.

It's fast as it uses the Bulk mode.

This workaround has been validated by Greenplum team but not by Alteryx support team.

Could you please support this workaround ?

Tested on version 2021.3.3.63061

-

Category In Database

-

Data Connectors

In-database enables large performance benefits on big datasets, it would be great to incorporate multirow and multifield formulas for use within the in-database funcions for redshift

-

Category In Database

-

Category Input Output

-

Data Connectors

Hello all,

Despite a few limitations, Alteryx is great when you work with full table (i.e when you rewrite entirely the table). But in real life, very few workflows work like that :

Here are some real life use cases that should be easy to deal with on Alteryx :

-delta on a key

-delta on a key + last record based on a date

-update records

-start_date and end_date for a value

etc

Best regards,

Simon

-

Category In Database

-

Data Connectors

Enable Gallery Server Connections as Input for In-DB Tools. Currently, we can only create file connections, and we'd like to centralize all connections to our Gallery Connections.

-

Category In Database

-

Data Connectors

The designing interface is very slow when we design an in-db workflow.

The reason of that is that Alteryx connects everytime he needs to refresh the data. Example on Hive :

Mar 20 15:28:49.453 DEBUG 6048 HardyConnection::Connect: Default branding specific auth mech: 2

Mar 20 15:28:49.453 DEBUG 6048 HardyHiveClientFactory::CreateClient: Create HS2 client.

Mar 20 15:28:49.453 DEBUG 6048 HardyHiveClientFactory::GetBackendCxnPool: Create session manager.

Mar 20 15:28:49.453 DEBUG 6048 HardyHiveClientFactory::GetBackendCxnPool: Create backend connection pool.

Mar 20 15:28:49.453 DEBUG 6048 HardyHiveCxnPool::GetHS2Cxn: Create HS2 connection.

Mar 20 15:28:49.453 DEBUG 6048 HardyHiveCxnPool::GetCxnFactory: Create backend connection factory.

Mar 20 15:28:49.453 DEBUG 6048 HardyHiveCxnFactory::CreateHS2Cxn: Create HS2 HTTP transport.

Mar 20 15:28:49.453 DEBUG 6048 HardySessionManager::GetSession: Getting new session handle.

Mar 20 15:28:50.399 DEBUG 6048 HardyTCLIServiceThreadSafeClient::OpenSession: TOpenSessionReq

client_protocol = HIVE_CLI_SERVICE_PROTOCOL_V1Maybe we could have an option on the IN DB Connection configuration to stay connected while designing (maybe with a limit time).

(PS : we also tried the option to Disable Auto Configure, it's clearly not he solution)

-

Category In Database

-

Data Connectors

As you may know, the interrogation of Hive to get the Metadata is actually very slow on Alteryx

A first step of improvement (at least in the Visual Query Builder) has been proposed here

But the real issue for Hive is that the way Alteryx queries the Metadata : it passes "Show table" queries for all the databases. On our cluster, it means more than 400 queries that last each avout 0.5 seconds. The user has to to wait about 4 minutes.

A solution : using an API in java to ask the Hive metastore if it exists (it may be an other tab in the In database configuration). Our cluster admin has an example of a Thrift API in java that we can give you.

Result : 2 seconds for a 38700 tables in more than 500 databases !!

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

There is a need when visualizing in-Database workflows to be able to visualize sorted data. This sorting could be done 1 of 2 ways: In a browse tool, or as a stand-alone Sort tool. Either would address the need. Without such a tool being present, the only way to sort the data is to "Data Stream Out" and then visualize the data in Alteryx. However, this process violates the premise of the usefulness of the in-DB toolkit, which is to keep your data in-DB and process using the DB engine. Streaming out big data in order to add a sort is not efficient.

Granted, the in-DB processing doesn't care whether data is sorted or not. However, when attempting to find extreme values after an aggregation, or when trying to identify something as simple as whether null values are present in a field, then a sort becomes extremely useful, and a necessary tool for human consumption of data (regardless of the database's processing needs).

Thanks very much for hearing my idea!

-

Category Data Investigation

-

Category In Database

-

Data Connectors

-

Desktop Experience

Currently we can't use any PaaS MongoDB products (MongoDB Atlas / CosmosDB) as Alteryx Gallery doesn't support SSL for connecting to the MongoDB back end.

SSL is good security practice when splitting the MongoDB onto a different machine too.

-

Category In Database

-

Data Connectors

Not sure if any of you have a similar issue - but we often end up bringing in some data (either from a website or a table) to profile it - and then an hour in, you realise that the data will probably take 6 weeks to completely ingest, but it's taken in enough rows already to give us a useful sense.

Right now, the only option is to stop (in which case all the profiling tools at the end of the flow will all give you nothing) and then restart with a row-limiter - or let it run to completion. The tragedy of the first option is that you've already invested an hour or 2 in the data extract, but you cannot make use of this.

It feels like there's a third option - a option to "Stop bringing in new data - but just finish the data that you currently have", which terminates any input or download tools in their current state, and let's the remainder of the data flush through the full workflow.

Hopefully I'm not alone in this need 🙂

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

When converting data types while In-DB, it would be really helpful if I could change the data type with the "Select In-DB" tool in a similar manner to the "Select" tool. Currently, we are having to use the "Formula In-DB" tool in order to create a "Cast" Statement.

-

Category In Database

-

Data Connectors

I would like to see In-DB batch macros, currently we are joining tables with 30 million+ records and we are having to run it through standards tools because we are unable to process via In-DB, which has a 20% improvement in processing speed based on the peformance profiling.

-

Category In Database

-

Category Macros

-

Data Connectors

-

Desktop Experience

While I strongly support the S3 upload and download connectors, the development of AWS Athena has changed the game for us. Please consider opening up an official support of Athena compute on S3 like support already show for Teradata, Hadoop Hive, MS SQL, and other database types.

-

Category In Database

-

Data Connectors

Not sure what detail needs to be added. This is obviously a widely used RDBMS.

-

Category In Database

-

Data Connectors

Hello,

As of today, if you want to add a PostgreSQL in database connection, you may feel embarrased :

However, the help states that PostgreSQL is supported by in-database.

https://help.alteryx.com/current/In-DatabaseOverview.htm

Whaaaaaaaaat?

oh, I forgot to mention : with a little luck, you can find tis help page : https://help.alteryx.com/current/DataSources/PostgreSQL.htm

Yep, you have to configure a "greenplum" connection if you want to use a PSQL.

i think this is not user-friendly and can lead to mistake, errors, frustration and even lack of sales for Alteryx :

Also, Greeenplum and PSQL will have separate features so I think having two separate entries in the menu is pertinent.

Best regards,

Simon

-

Category In Database

-

Data Connectors

- New Idea 207

- Accepting Votes 1,837

- Comments Requested 25

- Under Review 150

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow