Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello,

Here a use case :

I work on the projects A and B with Alteryx inj IN DB mode.

My coworker works only on project B and have no rights to the data of project A.

When using temporary table in Alteryx, we both create the temporary tables in the default database. The issue is my coworker can see my temporary data of project A, which is not safe.

Solution : allow me to specify the database/schema when I create my temporary table.

-

Category In Database

-

Data Connectors

Hello,

As I mentioned in this previous idea : https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Generic-In-database-connection-please-stop-i...

field mapping in generic in-db connection is based on Microsoft Sql Server. Given the specificity of MSQL Server field types, I would like to change that in order to at least be able to use another database. Without that, this feature has no sense at all.

Best regards,

Simon

-

Category In Database

-

Data Connectors

It would be great to have the below functionality in Alteryx.

A workflow is built in Alteryx and button click in Alteryx can be used to generate SQL code that can be ran on a specific database platform, such as SQL Server to run external editors such as SQL Server Management Studio. Thanks.

-

API SDK

-

Category Connectors

-

Category Developer

-

Category In Database

It would be awesome if there was a cross tab in DB option because right now I have to stream out millions of records to build a cross tab.

-

Category In Database

-

Data Connectors

Hi,

Currently loading large files to Postgres SQL(over 100 MB) takes an extremely long time. For example writing a 1GB file to Postgres SQL takes 27 minutes! This is serious impacting our ability to use Alteryx as an ETL tool for loading our target Postgres Data Warehouse. We would really like to see the bulk load capacity to Postgres supported by Alteryx to help alleviate the performance issues.

Thanks,

Vijaya

-

Category Connectors

-

Category In Database

-

Data Connectors

Hello,

As of today, the in db connexion window is divided into :

-write tab

-read tab

However, writing means two different thing : inserting and in-db writing. Alteryx has already 2 different tools (Data Stream In and Write Data).

Si what I propose is to divide the window into :

-read

-write

-insert

Best regards,

Simon

-

Category In Database

-

Category Input Output

-

Data Connectors

Hello,

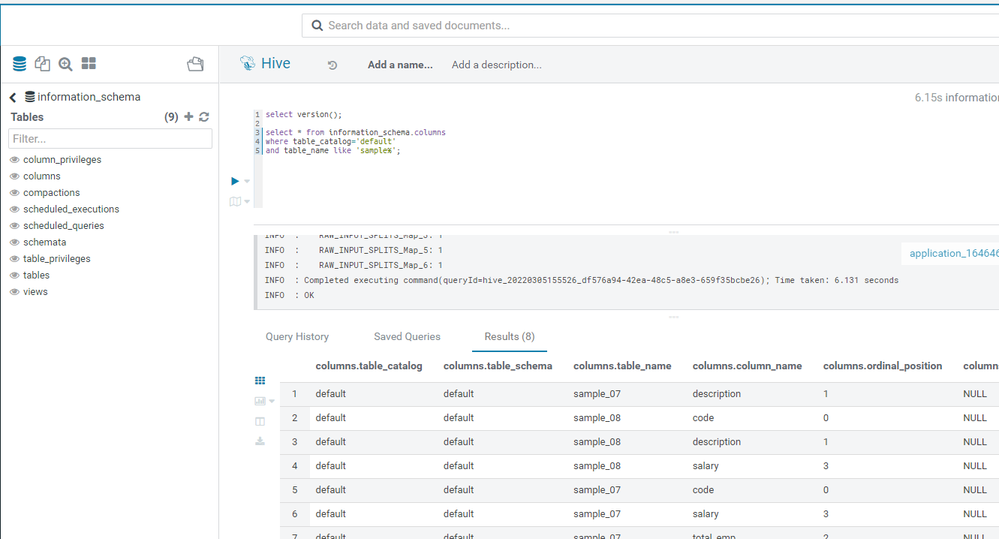

As of today, when you connect o, a database, you go through a batch of queries to retrieve which database it is ( cf https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Smart-Visual-Query-Builder-for-in-db-less-te... where I suggest a solution to speed up the process) and then, Alteryx queries the metadata. In order to get the column in each table, Alteryx use a SHOW TABLES and then loop on each table. This is really slow.

However, since Hive 3.0, an information_schema with the list of columns for each table is now available. I suggest to use the information_schema.columns instead of the time-consuming loop.

PS : I don't know if it's linked to the Active Query Builder, the third-party tool behind the Visual Query Builder. In that case, it would be a good idea to update it as suggested here https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Update-Query-Builder-component/idi-p/799086

Best regards,

Simon

-

Category In Database

-

Category Input Output

-

Data Connectors

TIBCO Data Virtualization is a Data Virtualization product focused on creating a virtual data store consolidating data from throughout the enterprise. It can be accessed via a SQL query engine, and has a variety of supported connectors, including an ODBC driver.

This data source can be connected to via ODBC in Alteryx today, but error messaging is unclear/unhelpful, and attempting to use the Visual Query Builder causes Alteryx to crash.

Adding TIBCO Data Virtualization as a supported ODBC connection would empower business users to leverage this product and easily utilize this enterprise data store, enhancing the value of the Alteryx platform as a consumer of this data.

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

The Transpose In-db stands in the "Laboraty" for years now. I understand Alteryx invested some time and money to develop that but sadly we still can't use that tool for sensitive workflows. Did you get some bugs on it? Can you please correct it and make this tool an "official" tool?

Thanks

-

Category In Database

-

Data Connectors

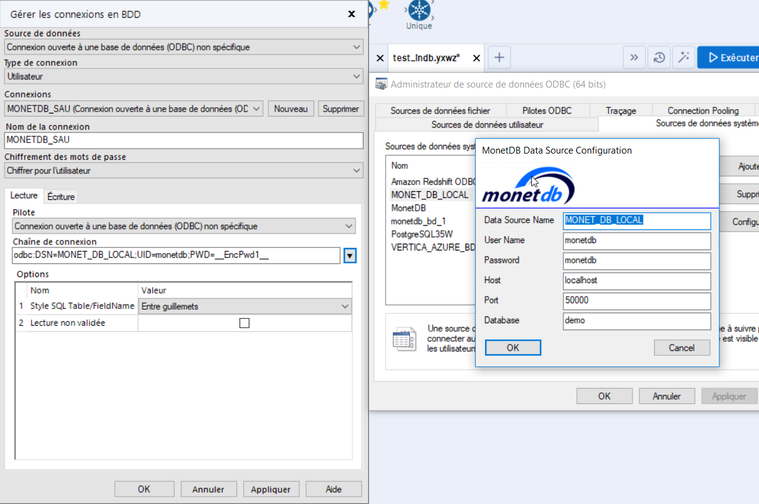

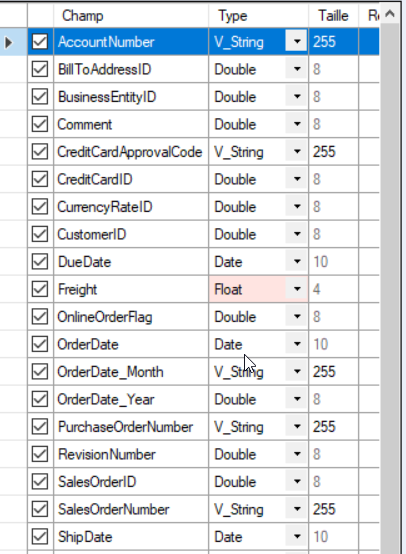

Hello,

My issue is very easy to solve. I want to use the generic ODBC In database for a specific base (monetdb here but it isn't important).

The connexion works just fine. However, I cannot create table because the data types are changed and does not even exist. Here is my data with some Date type :

And here the error in my data stream in give me this very interesting message :

Error: Entrée du flux de données (2): Erreur lors de la création de la table "formation.temp1" : [MonetDB][ODBC Driver 11.31.11]Type (datetime) unknown in: "create table "formation"."temp1" ("AccountNumber" varchar(255),"BillToAddressID"

syntax error, unexpected IDENT in: ""Freight""

CREATE TABLE "formation"."temp1" ("AccountNumber" varchar(255),"BillToAddressID" float,"BusinessEntityID" float,"Comment

" float,"CreditCardApprovalCode" varchar(255),"CreditCardID" float,"CurrencyRateID" float,"CustomerID" float,"DueDate" datetime,"Freight" real,"OnlineOrderFlag" float,"OrderDate" datetime,"OrderDate_Month" varchar(255),"OrderDate_Year" float,"PurchaseOrderNumber" varchar(255),"RevisionNumber" float,"SalesOrderID" float,"SalesOrderNumber" varchar(255),"ShipDate" datetime,"ShipMethodID" float,"ShipToAddressID" float,"Status" float,"SubTotal" float,"TaxAmt" float,"TotalDue" float)

1/ My field is a date, why do you want to convert it in Datetime??

2/ Datetime is not even a usual field type in sql database (at least not supported by monetdb, vertica, postgresql, oracle, etc, etc...)... it should obviously be timestamp

Currently, this non-specific in database ODBC connexion cannot be used at all!

-

Category In Database

-

Category Input Output

-

Data Connectors

As simple as the title : an In-Database Block Until Done would be a pretty nice feature to control the execution of a workflow.

-

Category In Database

-

Data Connectors

From Wikipedia

Druid is a column-oriented, open-source, distributed data store written in Java. Druid is designed to quickly ingest massive quantities of event data, and provide low-latency queries on top of the data.[1] The name Druid comes from the shapeshifting Druid class in many role-playing games, to reflect the fact that the architecture of the system can shift to solve different types of data problems. Druid is commonly used in business intelligence/OLAP applications to analyze high volumes of real-time and historical data.[2] Druid is used in production by technology companies such as Alibaba,[2] Airbnb,[2] Cisco,[3] eBay,[4] Netflix,[5] Paypal,[2], Yahoo.[6] and Wikimedia Foundation [7]

More and more companies are going from Hive to Druid for Dataviz needs, maybe it's time to look for Druid Integration with Alteryx?

-

Category In Database

-

Data Connectors

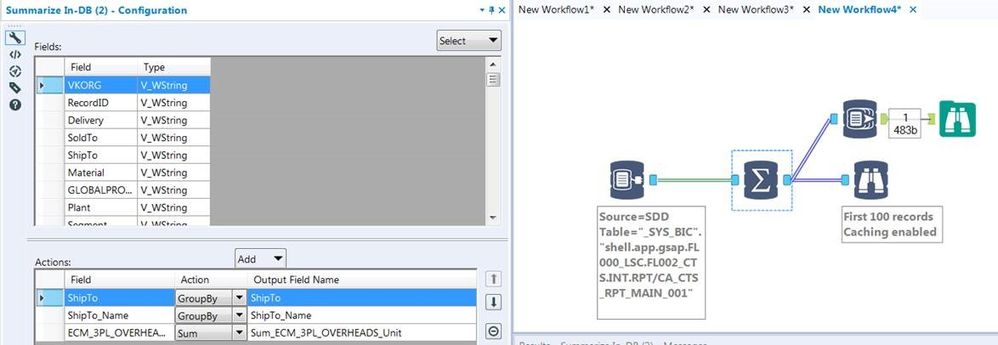

I reported this to the support team but was told it was by design and to post here.

In-DB Inefficient SQL

I would like to report that the In-DB tools are generating horribly inefficient SQL code for simple operations. It seems no matter what tools you use every statement is starting with a nested 'Select * From'.

Example Simple workflow:

This is a simple Select and Group by but the SQL Generated is:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM (SELECT * FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001") AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

This is taking a very long time to execute:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 15.752 seconds (server processing time: 15.699 seconds)

Whereas if I take the same query and remove the nested Select *:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001" AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

It is very quick:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 1.211 seconds (server processing time: 1.157 seconds)

So Alteryx is generating queries up to x13 slower than they should be thereby defeating the point of using In-DB. As you can imagine in a workflow where we have multiple Connect In-DB tools this is a really substantial amount of time. Example used above is from SAP HANA DB has 1.9m rows and ~90 columns but we have much bigger tables/views than this.

If you look you will see its same behaviour for all In-DB tools where each tool creates another nested Select with its particular operator.

MY SUGGESTION:

So my suggestion is that Alteryx should combine the SQL of the first few tools and avoid using SELECT * completely unless no Select tools have been used. So it should combine:

- Connect In-DB + Select

- Connect In-DB + Filter

- Connect In-DB + Summarise

Preferably it should combine/flatten everything up until the first join or union. But Select + Filter are a must!

Note it seems some DB's can cope OK with un-nesting these big nested queries in the query plans for some Tables but normally not for Views. But some cannot cope at all and so the In-DB tools cannot even be used to Browse 100 records (due to select *).

-

Category In Database

-

Data Connectors

Alteryx doesnt support querying tables within Apache Ignite via Ignite ODBC connector. Connectivity from Ignite being an in memory database with Alteryx would help in better connectivity via ODBC.

-

Category In Database

-

Data Connectors

Where it stands now, only a file input tool can be used to pull data from Google BigQuery tables. The issue here is that the data is streamed and processed locally, meaning the power of BigQuery processing isn't actually being leveraged.

Adding BigQuery In-Database as a connection option would appeal to a wide audience. BigQuery is also standard SQL compliant with the SQL 2011 standard, so this may make for an even easier integration.

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

The idea is to store credentials, login/pw in a "credential alias".

Then, those credential aliases can be used in :

-traditional aliases/connection

-in database aliases/connection

-hdfs aliases/connection

-API

-on user aliases for connected controllers/gallery

...etc.

The idea is that I only have to change the credentials once for all the connection type (on Hive, I have the in db alias, the traditional alias and even an HDFS alias using exactly the same credentials !! and I have to change all that manually).

-

Category In Database

-

Category Input Output

-

Data Connectors

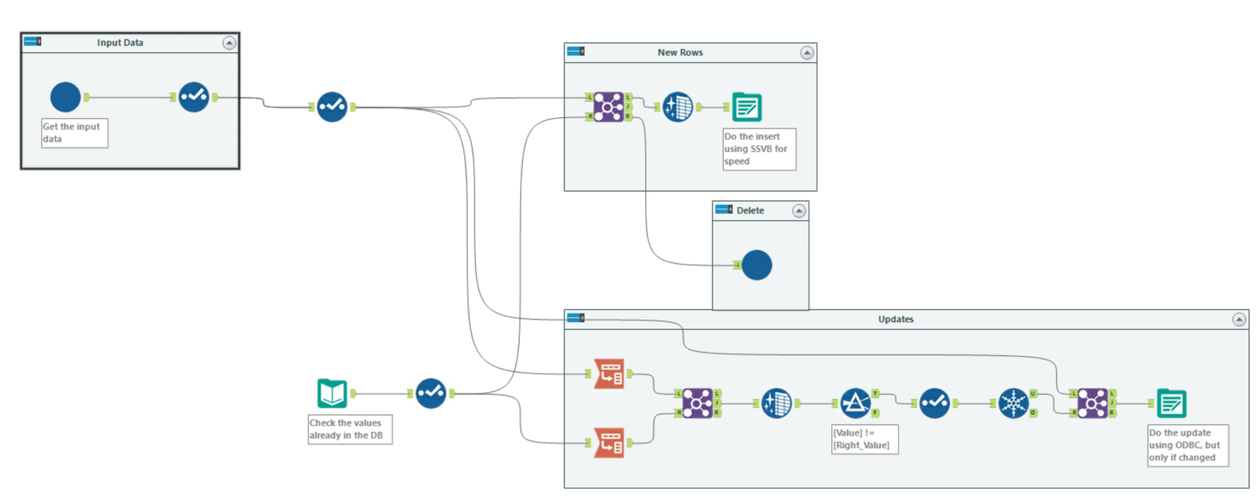

Hi there Alteryx team,

When we load data from raw files into a SQL table - we use this pattern in almost every single loader because the "Update, insert if new" functionality is so slow; it cannot take advantage of SSVB; it does not do deletes; and it doesn't check for changes in the data so your history tables get polluted with updates that are not real updates.

This pattern below addresses these concerns as follows:

- You explicitly separate out the inserts by comparing to the current table; and use SSVB on the connection - thereby maximizing the speed

- The ones that don't exist - you delete, and allow the history table to keep the history.

- Finally - the rows that exist in both source and target are checked for data changes and only updated if one or more fields have changed.

Given how commonly we have to do this (on almost EVERY data pipe from files into our database) - could we look at making an Incremental Update tool in Alteryx to make this easier? This is a common functionality in other ETL platforms, and this would be a great addition to Alteryx.

-

Category In Database

-

Category Macros

-

Data Connectors

-

Desktop Experience

The Tableau Hyper API supports regular SQL queries, see https://help.tableau.com/current/api/hyper_api/en-us/reference/sql/index.html and https://help.tableau.com/current/api/hyper_api/en-us/docs/hyper_api_reference.html for more information. Being able to use the In-database tools for querying Hyper would let us take advantage of Hyper's internal optimizations just like other databases.

-

Category In Database

-

Category Input Output

-

Data Connectors

DELETE from Source_Data Where ID in

SELECT ID from My_Temp_Table where FLAG = 'Y'

....

Essentially, I want to update a DB table with either an update or with the deletion of rows. I can't delete all of the data. My work around will be to create/insert into a table the keys that i want to delete and try to use a input/output tool with SQL that performs the delete. Any other suggestions are welcome, but a tool is best.

Thanks,

Mark

-

API SDK

-

Category Developer

-

Category In Database

-

Category Input Output

Hello all,

From https://www.sqltutorial.org/sql-triggers/

Introduction to SQL Triggers

A trigger is a piece of code executed automatically in response to a specific event occurred on a table in the database.

A trigger is always associated with a particular table. If the table is deleted, all the associated triggers are also deleted automatically.

A trigger is invoked either before or after the following event:

- INSERT – when a new row is inserted

- UPDATE – when an existing row is updated

- DELETE – when a row is deleted.

When you issue an INSERT, UPDATE, or DELETE statement, the relational database management system (RDBMS) fires the corresponding trigger.

In some RDMBS, a trigger is also invoked in the result of executing a statement that calls the INSERT, UPDATE, or DELETE statement. For example, MySQL has the LOAD DATA INFILE, which reads rows from a text file and inserts into a table at a very high speed, invokes the BEFORE INSERT and AFTER INSERT triggers.

On the other hand, a statement may delete rows in a table but does not invoke the associated triggers. For example, TRUNCATE TABLE statement removes all rows in the table but does not invoke the BEFORE DELETE and AFTER DELETE triggers.

So basically, I would like to create some triggers from in db tools in Alteryx.

Best regards,

Simon

-

Category In Database

-

Data Connectors

- New Idea 206

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,492 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

176 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 41 | |

| 31 | |

| 20 | |

| 10 | |

| 7 |