Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Alteryx Designer is slow when using In-DB tools.

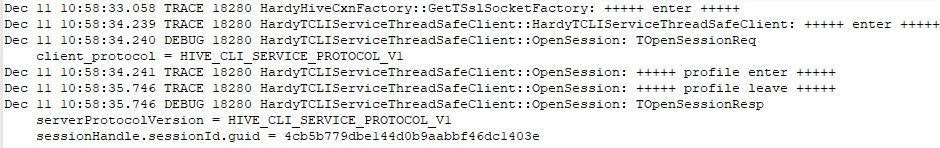

We use Alteryx 2019.1 on Hive/HortonWords with the Simba ODBC Driver configured with SSL enabled.

Here is a compare In-DB / in Memory :

We found that Alteryx open a new connection for each action :

- First link to joiner = 1 connection.

- Second ling to joiner = 1 connection.

- Click on the canevas = 1 connection.

Each connection take about 2,5 sec... It really slow down the Designer :

Please, keep alive the first connection instead of closing it and creating a new one for each action on the Designer.

-

Category In Database

-

Data Connectors

Hello all,

According to wikipedia :

https://en.wikipedia.org/wiki/Join_(SQL)

CROSS JOIN returns the Cartesian product of rows from tables in the join. In other words, it will produce rows which combine each row from the first table with each row from the second table.[1]

Example of an explicit cross join:

SELECT *

FROM employee CROSS JOIN department;

Example of an implicit cross join:

SELECT *

FROM employee, department;

The cross join can be replaced with an inner join with an always-true condition:

SELECT *

FROM employee INNER JOIN department ON 1=1;

For us, alteryx users, it would be very similar to Append Fields but for in-db.

Best regards,

Simon

-

Category In Database

-

Data Connectors

Hello all,

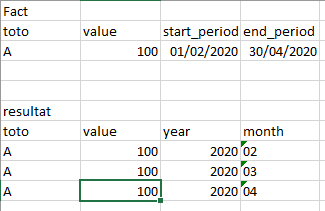

as of today, a join in-db can only be done with an equal operator.

Example : table1.customer_id = table2.customer_id

It's sufficient most of the time. However, sometimes, you need to perform another kind of join operation, (especially with calendar, period_table, etc).

Here an example of clause you can find in existing sql

inner join calendar on calendar.id_year_month between fact.start_period and fact.end_period

helping to solve that case :

(the turnaround I use to day being : I make a full cartesian product with a join on 1=1 and then I filter the lines for the between)

or <,>, .... et caetera.

It can very useful to solve the most difficult issues. Note that a product like Tableau already offers this feature.

Best regards,

Simon

-

Category In Database

-

Data Connectors

Hello,

We use the pre-sql statement of the input to set some parameters of connections. Sadly, we cannot do that in a in-db workflow. This would be a total game-changing feature for us.

Best Regards,

Simon

-

Category In Database

-

Data Connectors

Hello all,

A few weeks ago Alteryx announced inDB support for GBQ. This is an awesome idea, however to make it run, you should use Oauth2 Authentication means GBQ API should be enabled. As of now, it is possible to use Simba ODBC to connect GBQ. My idea is to enhance the connection/authentication method as we have today with Simba ODBC for Google BigQuery and support inDB. It is not easy to implement by IT considering big organizations, number of GBQ projects and to enable API for each application. By enhancing the functionality with ODBC, this will be an awesome solution.

Thank you for voting

Albert

-

Category In Database

-

Enhancement

Hello,

SQLite is :

-free

-open source

-easy to use

-widely used

https://en.wikipedia.org/wiki/SQLite

It also works well with Alteryx input or output tool. 🙂

However, I think a InDB SQLite would be great, especially for learning purpose : you don't have to install anything, so it's really easy to implement.

Best regards,

Simon

-

AMP Engine

-

Category In Database

-

Data Connectors

-

Engine

Currently Alteryx does not support writing to SharePoint document libraries.

However there are success sometimes but not at other times.

Please see attachment where we ran into an issue.

See this link for additional information.

We need official support for reading and writing to SharePoint document libraries.

It's an important Output target, and will becoming more so, as Alteryx enhances its reporting capabilities.

-

Category Connectors

-

Category In Database

-

Data Connectors

From Wikipedia :

In a database, a view is the result set of a stored query on the data, which the database users can query just as they would in a persistent database collection object. This pre-established query command is kept in the database dictionary. Unlike ordinary base tables in a relational database, a view does not form part of the physical schema: as a result set, it is a virtual table computed or collated dynamically from data in the database when access to that view is requested. Changes applied to the data in a relevant underlying table are reflected in the data shown in subsequent invocations of the view. In some NoSQL databases, views are the only way to query data.

Views can provide advantages over tables:

Views can represent a subset of the data contained in a table. Consequently, a view can limit the degree of exposure of the underlying tables to the outer world: a given user may have permission to query the view, while denied access to the rest of the base table.

Views can join and simplify multiple tables into a single virtual table.

Views can act as aggregated tables, where the database engine aggregates data (sum, average, etc.) and presents the calculated results as part of the data.

Views can hide the complexity of data. For example, a view could appear as Sales2000 or Sales2001, transparently partitioning the actual underlying table.

Views take very little space to store; the database contains only the definition of a view, not a copy of all the data that it presents.

Depending on the SQL engine used, views can provide extra security.I would like to create a view instead of a table.

-

Category In Database

-

Data Connectors

For in-DB use, please provide a Data Cleansing Tool.

-

Category In Database

-

Data Connectors

Sometimes I need to connect to the data in my Database after doing some filtering and modeling with CTEs. To ensure that the connection runs quicker than by using the regular input tool, I would like to use the in DB tool. But is doesn't working because the in DB input tool doesn't support CTEs. CTEs are helpful for everyday life and it would be terribly tedious to replicate all my SQL logic into Alteryx additionally to what I'm already doing inside the tool.

I found a lot of people having the same issue, it would be great if we can have that feature added to the tool.

-

Category In Database

-

Data Connectors

Hello,

According to wikipedia :

A partition is a division of a logical database or its constituent elements into distinct independent parts. Database partitioning is normally done for manageability, performance or availability reasons, or for load balancing. It is popular in distributed database management systems, where each partition may be spread over multiple nodes, with users at the node performing local transactions on the partition. This increases performance for sites that have regular transactions involving certain views of data, whilst maintaining availability and security.

Well, basically, you split your table in several parts, according to a field. it's very useful in term of performance when your workflows are in delta or when all your queries are based on a date. (e.g. : my table helps me to follow my sales month by month, I partition my table by month).

So the idea is to support that in Alteryx, it will add a good value, especially in In-DB workflows.

Best regards,

Simon

-

Category In Database

-

Category Input Output

-

Data Connectors

I would like to suggest creating a fix to allow In-DB Connect tool's custom SQL to read Common Table Expressions. As of 2018.2, the SQL fails due to the fact that In-DB tools wrap everything in a select * statement. Since CTE's need to start with With, this causes the SQL to error out. This would be a huge help instead of having to write nested sub selects in a long, complex SQL code!

-

Category In Database

-

Data Connectors

As of today, for a full refresh, I can :

-create a new table

-overwrite a table. (will drop and then create the new table)

But sometimes, the workflow fails and the old table is dropped while the new one is not created. I have to modify the tool (setting "create a new table")to launch it again, which may be a complex process in companies. After that, I have to modify it again back to "overwrite".

What I want :

-create a new table-error if table already exists

-overwrite a table-error if table doesn't exist

-overwrite a table-no error if table doesn't exist (easy in sql : drop if exists...)

Thanks!

-

Category In Database

-

Category Input Output

-

Data Connectors

Hi all,

Something really interesting I found - and never knew about, is there are actually in-DB predictive tools. You can find these by having a connect-indb tool on the canvas and dragging on one of the many predictive tools.

For instance:

boosted model dragged on empty campus:

Boosted model tool deleted, connect in-db tool added to the canvas:

Boosted Model dragged onto the canvas the exact same:

This is awesome! I have no idea how these tools work, I have only just found out they are a thing. Are we able to unhide these? I actually thought I had fallen into an Alteryx Designer bug, however it appears to be much more of a feature.

Sadly these tools are currently not searchable for, and do not show up under the in-DB section. However, I believe these need to be more accessible and well documented for users to find.

Cheers,

TheOC

-

Category In Database

-

Data Connectors

Please could you enhance the Alteryx download tool to support SFTP connections with Private Key authentication as well. This is not currently supported and all of our SFTP use cases use PK.

-

Category Connectors

-

Category In Database

-

Data Connectors

Statistics are tools used by a lot of DB to improve speed of queries (Hive, Vertica, etc...). It may be interesting to have an option on the write in db or data stream in to calculate the statistics. (something like a check box for )

Example on Hive : analyse {table} comute statistics; analyse {table} compute statistics for columns;

-

Category In Database

-

Category Input Output

-

Data Connectors

Currently, when one uses the Google BigQuery Output tool, the only options are to create a table, or append data to an existing table. It would be more useful if there was a process to replace all data in the table rather than appending. Having the option to overwrite an existing table in Google BigQuery would be optimal.

-

Category In Database

-

Data Connectors

When I create a new table in a in-Db workflow, I want to specify some contraints, especially the Primary Key/Foreign Key

For PK/FK, the UX could be either the selection of some fields of the flow or a free field (to let the user choose a constant).

From wikipedia :

In the relational model of databases, a primary key is a specific choice of a minimal set of attributes (columns) that uniquely specify a tuple (row) in a relation (table).[a] Informally, a primary key is "which attributes identify a record", and in simple cases are simply a single attribute: a unique id.

So, basically, PK/FK helps in two ways :

1/ Check for duplicate, check if the value inserted is legit

2/ Improve query plan, especially for join

-

Category In Database

-

Category Input Output

-

Data Connectors

I noticed through the ODBC driver log that Alteryx doesn't care about the kind of base I precise. It tests every single kind of base to find the good one and THEN applies the queries to get the metadata info.

Here an example. I have chosen an Hive in db connection. If I read the simba logs, i can find those lines :

Mar 01 11:37:21.318 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER(), APPLICATION_ID() from system.iota

Mar 01 11:37:22.863 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER as USER_NAME from SYSIBM.SYSDUMMY1

Mar 01 11:37:23.454 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from rdb$relations

Mar 01 11:37:23.546 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select first 1 dbinfo('version', 'full') from systables

Mar 01 11:37:23.707 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select #01/01/01# as AccessDate

Mar 01 11:37:23.868 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: exec sp_server_info 1

Mar 01 11:37:24.093 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select top (0) * from INFORMATION_SCHEMA.INDEXES

Mar 01 11:37:24.219 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT SERVERPROPERTY('edition')

Mar 01 11:37:24.423 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select DATABASE() as `database`, VERSION() as `version`

Mar 01 11:37:24.635 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from sys.V_$VERSION at where RowNum<2

Mar 01 11:37:25.230 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select cast(version() as char(10)), (select 1 from pg_catalog.pg_class) as t

Mar 01 11:37:25.415 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select NAME from sqlite_master

Mar 01 11:37:25.756 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select xp_msver('CompanyName')

Mar 01 11:37:26.156 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select @@version

Mar 01 11:37:26.376 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from dbc.dbcinfo

Mar 01 11:37:26.522 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT @@VERSION;

I can understand that when Alteryx doesn't know the kind of base he tries everything.. (eg : in memory visual query builder) but here, I have selected the Hive database and I have to loose more than 5 seconds for nothing.

-

Category In Database

-

Data Connectors

Alteryx has the ability to connect to data sources using fat clients and ODBC but not JDBC. If the ability to use JDBC could be added to the product it could remove the need to install fat clients.

-

Category In Database

-

Category Input Output

-

Category Reporting

-

Data Connectors

- New Idea 206

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,492 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

176 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 41 | |

| 30 | |

| 19 | |

| 10 | |

| 7 |