Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I have a problem where bulk upload is failing because the last column of the table that the data is being imported into, is using the DEFAULT data type option. I am not passing through any value to this column as I want the DEFAULT value specified to always be applied.

The COPY command fails in this scenario if you don't specify an explicit field list

More details of the problem can be seen in this post, along with a workaround:

A tick box option should at least be added to the bulk upload tool to enable explicit field list specification based on column names coming into the bulk upload tool

-

Category In Database

-

Data Connectors

for the past 8 months I have been using alteryx and mostly working with the connect in db components , there are many issues which I am facing and i this this can be improved

1. there is no such flexibility of creating a table with the keys defined which is the most important pillar in database, also the options provided are limited , i.e. to create a new table , delete and append, drop table and recreate,

now, there are many times where in we need to update the tables based on the keys, which i find missing. Also how the option is defined is create a new table, next time if the job is run it states that the table is already created, for which we need to manually change the option in the next run.

2.which switching between in db and alteryx , if the records are more the alteryx lags completely and the job keeps running for hours , how can we achieve the flexibility of alteryx designer if there is such bottleneck.

3.the flexibility that is provided with alteryx designer should also be given to in db components.

4.the parameters defined in the workflow can not be accessed in the in db formula tools but can be used in the designer formula tools. this reduces the flexibility.

please look into the same

-

Category In Database

-

Data Connectors

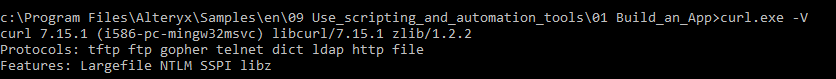

Curl currently doesn't have Secure protocols supported. Please find below screen-shot. We are currently using Alteryx 11.7.6

Can Alteryx take this as feature request and add the secure libraries to existing cURL tool so that it can support the secure SFTP protocol.

-

Category Connectors

-

Category In Database

-

Data Connectors

Was thinking with my peers at work that it might be good to have join module expanded both for desktop and in-database joins.

As for desktop join: left and right join shows only these records that are exclusive to that side of operation. Would it be possible to have also addition of data that is in common?

As for in-db join: db join acts like classic join (left with matching, right with matching data). Would it be possible to get as well only-left, only-right join module?

-

Category In Database

-

Category Join

-

Category Parse

-

Category Transform

Greenplum is a new in-db capability; which our company has started using frequently. GP is a distributed database, across many nodes, much like how Redshift and other new database technology works.

In order to properly use these databases the Alteryx tools MUST have a way to distribute our tables across the nodes how we desire. Currently the tools for In-DB will distribute by the database default (almost always taking the first column in the table to distribute) which has terrible affects on performance. The work around has been to run SQL outside of the Alteryx tools to run ALTER TABLE DISTRIBUTE BY statements for all tables; also this cannot work on TEMP tables as those tables are created and deleted at the end of the workflow.

The idea I want is an additional option for Distributed DB's to choose the distribution column OR choose the "RANDOMLY" option for distribution of tables [DISTRIBUTED BY statement]. This would make my DBAs very happy and make it possible for me to run even more challenging requests to my system.

-

Category In Database

-

Data Connectors

We recently upgraded our SQL server to 2016 to enable us to use R Server for predictive analytics. We were excited about the more powerful algorithms and the fact that parallel processing will make things faster on bigger data sets.

We often use stepwise logistic regression, especially in cases where we need to show which attributes are most significant. The one drawback about the upgrade was that stepwise is not available when running logistic regression in-database. I know there are ways to get around this e.g. PC etc. but it would be nice to have the ability to do stepwise in-database.

I hope there are others like me that will vote this up. I think it will help a lot of data scientists out there and is probably one of the easier suggestions :-).

-

Category In Database

-

Category Predictive

-

Data Connectors

-

Desktop Experience

Try to create an oracle 32 bit connection. Type in something wrong in the descriptor or user id or password. You get a silent failure with no underlying notice about where you went wrong.

You can get meaningful error messages from other database types, I got meaningful errors for ODBC connections to mysql and sql server while poking at this.

Granted, it's only useful for jerks like me who don't type things in correctly and still have 32 bit Oracle instances to get to but somewhere down the chain the Oracle client is telling you that the user id was bad or the tns name couldn't be found or whatever, but you never get that metadata pushed back up to the user.

-

Category In Database

-

Data Connectors

I hope this request will be easy to implement.

One of the handy features I really like about Alteryx's standard Join tool is the way you can select and rename fields right in the join tool. It would be great if this feature could be added to the IN-DB join tool. Whenever I perform a join in DB, I ALWAYS have to add a select tool after it since you always want to de-select one of the redundant fields that the join was based on i.e. (ID and R_ID).

Behind the scenes, I'm sure the select feature would still have to be handled as if it were a separate select tool, but it would just be convenient if the user interface could combine those two features.

-

Category In Database

-

Data Connectors

Clustering your data on a sample and then appending clusters is a common theme

especially if you are in customer relations and marketing related divisions...

When it comes to appending clusters that you have calculated form a 20K sample and then you're going to "score" a few million clients you still need to download the data and use the append cluster...

Why don't we have an In-db append cluster instead, which will quicken the "distance based" scoring that append cluster does on SQLServer, Oracle or Teradata... |  |

Best

-

Category Connectors

-

Category In Database

-

Data Connectors

Hi All,

It would be great if Alteryx 10.5 supports connectivity to SAS server.

Regards,

Gaurav

-

Category Connectors

-

Category In Database

-

Data Connectors

The object name is too long in NFD/NFC when connected to Teradata Error occurs when you use SQL comments above the SELECT statement within the Connect In-DB customer query box. Once the comments are removed the problem is resolved. It would be great if the Connect In-DB tool could recognise comments as what they are.

-

Category In Database

-

Category Input Output

-

Data Connectors

Issue:

Currently there is no "In - Database" node to perform action queries

Work around

Use of the pre / post SQL in "Output data" node to perform action queries

Impact

Alteryx ease of use of "In - Database" nodes to work on large scale databases

Currently use of Knmie and SSIS is preferred instead of Alteryx

Action Requested

Please add an action query node to "In - Database" group.

-

Category In Database

-

Data Connectors

I'm using Alteryx with an Hadoop Cluster, so I'm using lots of In-DB tools to build my workflows using Simba Hive ODBC Driver.

My Hadoop administrator set some king of default properties in order to share the power of the cluster to many people.

But some intensive workflow request need to override some properties. For instance, I must adjust the size of the TEZ container in setting specific values for hive.tez.container.size and hive.tez.java.opts.

A work-arround is to set those properties in the server side properties panel in ODBC Administrator but if I have many different configuration, I will have a lot of ODBC datasource which is not the ideal.

If I could directly set those properties in the Connect In-DB tool, it would be nice.

-

Category In Database

-

Data Connectors

Currently we resort to using a manual create table script in redshift in order to define a distribution key and a sort key in redshift.

See below:

http://docs.aws.amazon.com/redshift/latest/dg/tutorial-tuning-tables-distribution.html

It would be great to have functionality similar to the bulk loader for redshift whereby one can define distribution keys and sort keys as these actually improve the performance greatly with larger datasets

-

Category In Database

-

Category Input Output

-

Data Connectors

We don't have Server. Sometimes it's easy to share a workflow the old fashioned way - just email a copy of it or drop it in a shared folder somewhere. When doing that, if the target user doesn't have a given alias on their machine, they'll have issues getting the workflow to run.

So, it would be helpful if saving a workflow could save the aliases along with the actual connection information. Likewise, it would then be nice if someone opening the workflow could add the aliases found therein to their own list of aliases.

Granted, there may be difficulties - this is great for connections using integrated authentication, but not so much for userid/password connections. Perhaps (if implemented) it could be limited along these lines.

-

Category Connectors

-

Category In Database

-

Data Connectors

Hello all,

It will be great if there is an option to specify sql statement or delete based on condition in write In-DB tool. We have to delete all record even though when we are trying to delete and append only a subset of records. If it allows for "WHERE" statement atleast, it will be very much useful. I have a long post going on about this requirement in http://community.alteryx.com/t5/Data-Preparation-Blending/Is-there-a-way-to-do-a-delete-statement-in... .

Regards,

Jeeva.

-

Category In Database

-

Data Connectors

Is it possible to add some color coding to the InDB tool. I am building out models InDB and I end up with a sea of navy blue icons. Maybe they could generally correspond to the other tools. For example the summary would be orange. Etc Formula Lime Green.

-

Category In Database

-

Data Connectors

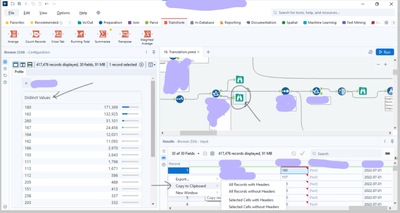

it would be beneficial to add the same action available in the message result window to be added in the brows tool for the distinct value.

like the copy options.

-

Category In Database

-

Enhancement

-

UX

Introduce CTE Functions and temp tables reading from SQL databases into Alteryx.I have faced use cases where I need to bring in table from multiple source tables based on certain delta condition. However, since the SQL queries turn to be complex in nature; I want to leverage an option to wrap it in a CTE function and then use the CTE function as an input for In-DB processing for Alteryx workflows.

-

Category In Database

-

Data Connectors

Alteryx really needs to show a results window for the InDB processes. It is like we are creating blindly without it. Work arounds are too much of a hassle.

-

Category In Database

-

Data Connectors

- New Idea 206

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,492 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

176 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 41 | |

| 31 | |

| 20 | |

| 10 | |

| 7 |