Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Would it be possible to update the SalesForce input tool to support API version 49 or later.

Changes were made to the way recurring events are handled in the SalesForce lightning update and the current salesforce input connector does not include all events when extracting.

-

Category Connectors

-

Data Connectors

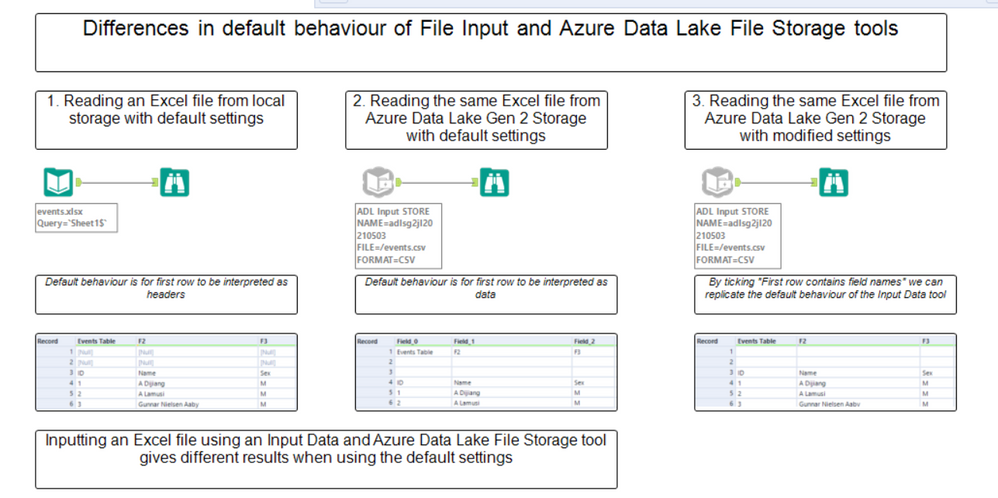

When inputting a CSV file via the Azure Data Lake File Storage tool the default behaviour is for the first row to be interpreted as data.

When reading the same file locally using the File Input tool the default behaviour is for the first row to be interpreted as headers.

Since the majority of files will include headers on the first row, it would be helpful to have the "First row contains field names" option selected by default in the Azure Data Lake File Storage tool, and this would also bring the defaults of this tool in line with the standard File Input tool.

Illustration below showing the issue:

-

Category Connectors

-

Data Connectors

Hello,

Regarding the Amazon S3 tools in Alteryx Designer, only 4 file formats are supported.

We would like to see also the following formats: .xls and .xlsx

Regards.

-

Category Connectors

-

Data Connectors

There are a number of requests for bulk loaders to DBs and Im adding MySQL to the list.

Really every DB connection (on prem and cloud) need some bulk loader capabilities to be added (if they don't have it already)

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

I know that incoming and outgoing connections can be wired and wireless, and that they will highlight when one clicks on a tool. However, it would be very useful to be able to highlight a particular connector in a particular colour (selected from a palette, perhaps, from the drop down window, or from the configuration). This would be especially useful when there are many connectors originating from a single tool.

Thanks

-

Category Connectors

-

Data Connectors

I have had multiple instances of needing to parse a set of PDF files. While I realize that this has been discussed previously with workarounds here: https://community.alteryx.com/t5/Alteryx-Knowledge-Base/Can-Alteryx-Parse-A-Word-Doc-Or-PDF/ta-p/115...

having a native PDF input tool would help me significantly. I don't have admin rights to my computer (at work) so downloading a new app to then use the "Run Command" tool is inconvenient, requires approval from IT, etc. So, it would save me (and I'm sure others) time both from an Alteryx workflow standpoint each time I need it, but also from an initial use to get the PDFtoText program installed.

-

Category Connectors

-

Category Input Output

-

Data Connectors

I noticed that Tableau has a new connector to Anaplan in the upcoming release.

Does Alteryx have any plans to create an Anaplan connector?

-

Category Connectors

-

Data Connectors

Please follow above link...

We are seeing a huge requests from users to support this feature...

Till 2019.4 version, Alteryx can't connect to a table if complete read access is NOT granted to it....

Other DataConnector tools des this action except Alteryx...

Please consider this as a Feature Request ..

-

Category Connectors

-

Data Connectors

Hello Alteryx,

It seems that the Endpoint parameter for the Amazon S3 Upload tool only support "Path Like" URL. It would be great if the Endpoint parameter could also take into account "Virual Hosted" URL.

When we enter a "Virtual Hosted" URL, the "Bucket Name" and "Object Name" parameters don't respond correctly.

The three dots option for the "Bucket Name" parameter returns the bucket name and the object name at the same time. And the three dots option for the "Object Name" parameter doesn't suggest any object name.

We can enter those manually but we lose some of the Alteryx functionnality.

It would be a great improvement that the Endpoint parameter takes into account "Virtual Hosted" URL so we keep "Bucket Name" and "Object Name" suggestions once the Endpoint is registered.

Is it in the roadmap?

François

-

Category Connectors

-

Data Connectors

When we try to call external web site from Alteryx Designer Download tool, our company proxy server failed the authentication because Alteryx uses the basic login/password authentication. This has happened to multiple applications that need to interact with external partners. Will like to request an enhancement to enable Alteryx to authenticate using Kerberos or NTLM.

-

API SDK

-

Category Connectors

-

Category Developer

-

Data Connectors

As you may know, the interrogation of Hive to get the Metadata is actually very slow on Alteryx

A first step of improvement (at least in the Visual Query Builder) has been proposed here

But the real issue for Hive is that the way Alteryx queries the Metadata : it passes "Show table" queries for all the databases. On our cluster, it means more than 400 queries that last each avout 0.5 seconds. The user has to to wait about 4 minutes.

A solution : using an API in java to ask the Hive metastore if it exists (it may be an other tab in the In database configuration). Our cluster admin has an example of a Thrift API in java that we can give you.

Result : 2 seconds for a 38700 tables in more than 500 databases !!

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

Our company is implementing an Azure Data Lake and we have no way of connecting to it efficiently with Alteryx. We would like to push data into the Azure Data Lake store and also pull it out with the connector. Currently, there is not an out-of-the-box solution in Alteryx and it requires a lot of effort to push data to Azure.

-

Category Connectors

-

Data Connectors

Not sure if any of you have a similar issue - but we often end up bringing in some data (either from a website or a table) to profile it - and then an hour in, you realise that the data will probably take 6 weeks to completely ingest, but it's taken in enough rows already to give us a useful sense.

Right now, the only option is to stop (in which case all the profiling tools at the end of the flow will all give you nothing) and then restart with a row-limiter - or let it run to completion. The tragedy of the first option is that you've already invested an hour or 2 in the data extract, but you cannot make use of this.

It feels like there's a third option - a option to "Stop bringing in new data - but just finish the data that you currently have", which terminates any input or download tools in their current state, and let's the remainder of the data flush through the full workflow.

Hopefully I'm not alone in this need 🙂

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

Pushing data to Salesforce from Oracle would bemuch easier if we were able to perform an UPSERT (Update if existing, Insert if not existing) function on any unique ID field in Salesforce. Instead of us having to do a filter to find the records that have or don't have an ID and run an Update or Insert based on the filter.

-

Category Connectors

-

Data Connectors

On shared collection , users have access to the collection shared by other team members. When users copy the ‘Publish to Tableau Server ‘ tool from one workflow to another it copies with the credentials embedded in the tool as well.

As user John Doe’s workflow publishes data on to tableau server with Peter’s credentials as the publish to dashboard tool was copied from Peter’s workflow.

The concern really is Users copying tools from one workflow can really copy the credentials as well. Enhancement to the publish to Tableau tool would be much appreciated.

-

Category Connectors

-

Data Connectors

I recently began using the SharePoint Files v2.0.1 tools to read and write data. The SharePoint Files Output tool allows you to take a sheet or filename from a column but that column is still included in the output. The standard Output Data tool has a "Keep Field in Output" checkbox that allows you to control if the column stays in the XLSX of CSV file. It would be great if this same functionality could be included in the SharePoint Files Output tool.

-

Category Connectors

-

Data Connectors

My company has recently purchased some Alteryx licences with the hope of advancing their Data Science capability. The business is currently moving all their POS data from in-premise to cloud environment and have identified Azure Cosmos DB as a perfect enviornment to house the streaming data. Having purchased the Alteryx licences, we have now a challenge of not being able to connect to the Azure Cosmos DB environment and we would like Alteryx to consider speeding up the development of this process.

-

Category Connectors

-

Category In Database

-

Data Connectors

I would like to raise the idea of creating a feature that resolves the repetitive authentication problem between Alteryx and Snowflake

This is the same issue that was raised in the community forum on 11/6/18: https://community.alteryx.com/t5/Alteryx-Designer-Desktop-Discussions/ODBC-Connection-with-ExternalB...

Can a feature be added to store the authentication during the session and eliminate the popup browser? The proposed solution eliminates the prompt for credentials; however, it does not eliminate the browser pops up. For the Input/Output function, this opens four new browser windows, one for each time Alteryx tests the connection.

-

Category Connectors

-

Data Connectors

As Tableau has continued to open more APIs with their product releases, it would be great if these could be exposed via Alteryx tools.

One specifically I think would make a great tool would be the Tableau Document API (link) which allows for things like:

- Getting connection information from data sources and workbooks (Server Name, Username, Database Name, Authentication Type, Connection Type)

- Updating connection information in workbooks and data sources (Server Name, Username, Database Name)

- Getting Field information from data sources and workbooks (Get all fields in a data source, Get all fields in use by certain sheets in a workbook)

For those of us that use Alteryx to automate much of our Tableau work, having an easy tool to read and write this info (instead of writing python script) would be beneficial.

-

Category Connectors

-

Category Reporting

-

Data Connectors

-

Desktop Experience

- New Idea 205

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,491 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

175 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 40 | |

| 27 | |

| 16 | |

| 8 | |

| 7 |