Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

We aren't getting a huge amount of help from support on this, so I'm posting this idea to raise awareness for the product teams responsible for the Salesforce connectors and the embedded Python environment.

This post from user Dubya describes the issue in detail:

I have a workflow with several salesforce tools in it, which works fine on my machine. But we need another alteryx user in our office to be able to access, run and maintain the workflow too, via their machine and copy of alteryx designer.

However we're finding that the salesforce inputs and outputs can only be authenticated on one machine at a time.

When the other new user opens the original workflow from the shared network location, the salesforce tools display an error "Salesforce Input (1): {'error': 'invalid_grant', 'error_description': 'authentication failure'}" and the tools fail to load any data. But we can see the full query in the tool and we can even set the custom query option and validate the query successfully, which suggests the source is being correctly connected to and queried, but we just cant run the tool.

The only way to run the tool successfully is to change the credentials and re-authenticate the tool. However this then de-authenticates the original machine, and when we open up the workflow on there and try to run ying the workflow brings back the same error.

We've both tried this authentication back and forth on our own machines and each time one of us re-authenticates, it de-authenticates the other, leading to it triggering the error.

Can someone help explain what's going on and how to fix it, as this doesn't bode well for our collaboration.

We're both running:

The latest build of version of designer 2021.2 (original machine also running desktop automation)

Salesforce Input Tool v4.1.0

Salesforce Output Tool v1.3.0

My response here identifies that this is a problem for our organization as well:

We're experiencing the same issue. It appears to be related to how the tool handles password and security token decryption. I've found that when you modify the related registry entry from "true" to "false", you can see in the tool's xml that the encrypted password and security token are still in there. I'm not sure what else is going on behind the scenes beyond that, but that ought to be addressable by the product teams handling the Salesforce connectors and the Python installation embedded in Designer.

The only differences in our environment compared to u/Dubya's are that we're running on 2020.4 and attempting to use Salesforce Input Tool v4.2.4.

This is a must have for anyone who needs the ability to share workflows among multiple users. This is part of a series of problems that these updated connectors have been plagued with since introducing them years ago, and no one at Alteryx seems to care enough to truly fix the problems. Salesforce is a core system for our organization, so having tools that utilize the latest version of Salesforce's APIs is very important to us. The additional features that the Input tool provides are welcome, but these bugs have to be sorted out in order for us to extract any kind of value out of them. If the "deprecated" Salesforce tools were ever to be removed from Designer while there are issues with the "new" connectors, we would have no choice other than to never upgrade Designer/Server again and be forced to look for another product to serve as our ETL platform.

Please, please, please address this.

-

API SDK

-

Category Connectors

-

Data Connectors

-

Desktop Experience

It would be great to have the below functionality in Alteryx.

A workflow is built in Alteryx and button click in Alteryx can be used to generate SQL code that can be ran on a specific database platform, such as SQL Server to run external editors such as SQL Server Management Studio. Thanks.

-

API SDK

-

Category Connectors

-

Category Developer

-

Category In Database

Hi all,

Currently, only the Sharepoint list tool (deprecated) is working with DCM, it would be amazing to add the Sharepoint files input/output to also work with DCM.

Thank you,

Fernando Vizcaino

-

Category Connectors

-

Data Connectors

After talking with support we found out that Oracle Financial Cloud ERP is not listed among supported Data Sources as stated in the url below:

We would like this added as our company will begin working heavily with Oracle Financial Cloud ERP to bring data from that into our SQL servers. Is there a reason why that connection is not currently being investigated and set up?

Thanks,

Chris

-

Category Connectors

-

Data Connectors

Hi,

Currently loading large files to Postgres SQL(over 100 MB) takes an extremely long time. For example writing a 1GB file to Postgres SQL takes 27 minutes! This is serious impacting our ability to use Alteryx as an ETL tool for loading our target Postgres Data Warehouse. We would really like to see the bulk load capacity to Postgres supported by Alteryx to help alleviate the performance issues.

Thanks,

Vijaya

-

Category Connectors

-

Category In Database

-

Data Connectors

Would like to direclty query Hyperion Cube / Essbase data source directly - please propose functionality in next release or add a user macro to the gallery. Thanks -cb

-

Category Connectors

-

Data Connectors

-

Category Connectors

-

Data Connectors

-

Category Connectors

-

Data Connectors

While Alteryx allows for a proxy username and password in the settings, these are not passed properly to an NTLM proxy. Support for NTLM authentication would be incredibly useful for a number of corporations who utilize this firewall setup.

We currently have to either download via Python or cURL through batch commands called by Alteryx. Since Alteryx uses a cURL back-end, this should be a fairly simple addition to the existing download tool by allowing a selection of proxy server, port, and authentication method in addition to the proxy username and password. This could be done either in the tool itself or in User Settings.

-

API SDK

-

Category Connectors

-

Category Developer

-

Data Connectors

TIBCO Data Virtualization is a Data Virtualization product focused on creating a virtual data store consolidating data from throughout the enterprise. It can be accessed via a SQL query engine, and has a variety of supported connectors, including an ODBC driver.

This data source can be connected to via ODBC in Alteryx today, but error messaging is unclear/unhelpful, and attempting to use the Visual Query Builder causes Alteryx to crash.

Adding TIBCO Data Virtualization as a supported ODBC connection would empower business users to leverage this product and easily utilize this enterprise data store, enhancing the value of the Alteryx platform as a consumer of this data.

-

Category Connectors

-

Category In Database

-

Category Input Output

-

Data Connectors

I have recently added an Azure data lake v2. The Azure input/output connectors do not work with this version of the Azure data lake.

It appears that Alteryx adds ".azuredatalakestore.net" to the file path. This works for V1, but not needed for V2

any plans to configure a connector for Azure data lake v2?

-

Category Connectors

-

Data Connectors

Single point of maintenance for Salesforce Input tool connection to Salesforce

This prevents user maintenance every time their password (and token) changes which requires them to update every Tool with new credentials

Also logged as issue under Alteryx, Inc Case # 00252975: Connection to Salesforce Issue

-

Category Connectors

-

Data Connectors

Alteryx Server was recently updated to allow TLS-mediated connections to the MongoDB persistence layer. This allowed us to switch off of the embedded MongoDB to a highly-available MongoDB Atlas cluster. To our surprise after the switch, when we went to edit our workflows that make use of the persistence layer's data (Server Usage Report, etc.) to hit the new Atlas cluster, we found that the MongoDB Input tool does not support TLS connections. This absolutely needs to be changed. Based on organizational constraints, Atlas is our only option for a HA persistence layer. We absolutely have to have TLS support for the MongoDB Input tool. There is no other way for us to natively query our server persistence layer in Designer. Please bring the MongoDB Input tool into alignment with the MongoDB connections that are supported by Alteryx Server.

-

Category Connectors

-

Data Connectors

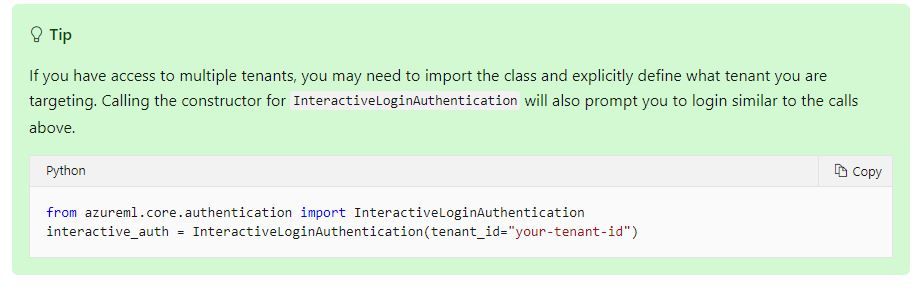

Introducing: The Azure Machine Learning Training and Scoring Tools

We tried to use this tool but can't log in to Azure ML correctly. We have several Tenant ID then log in to another tenant for office 365 not Azure ML.

====================== <Error Message> ==========================================================

Message: You are currently logged-in to 55f0a...-.............................................. tenant. You don't have access to d846a...-............................................. subscription, please check if it is in this tenant. All the subscriptions that you have access to in this tenant are =

[SubscriptionInfo(subscription_name='Microsoft Azure Enterprise', subscription_id='754c5...-...........................')].

Please refer to aka.ms/aml-notebook-auth for different authentication mechanisms in azureml-sdk.

InnerException None

ErrorResponse

=======================================================================================================

Microsoft states that tenant needs to be specified if we have access to multiple tenants.

Set up authentication for Azure Machine Learning resources and workflows

Could you add Tenant ID into Azure credentials so that we can use this tool?

-

Category Connectors

-

Data Connectors

-

Enhancement

Hi there,

When connecting to data sources using DCM - could we please add the ability to make JDBC connections?

see:

https://community.alteryx.com/t5/Engine-Works/JDBC-Connections-in-Alteryx/ba-p/968782

As mentioned in these threads - JDBC is very common in large enterprises - and in many cases is better supported by the technology teams / developer community and so is much easier to make a connection. Added to this - there are many databases (e.g. DB2) where JDBC connections are just much easier

Please could you add JDBC connections to the DCM tooling?

Thank you

Sean

cc: @wesley-siu @_PavelP

-

Category Connectors

-

Enhancement

-

New Request

-

Scheduler

Request: Google Drive Output Tool to be able to set the maximum records per file and create multiple files

For the regular Alteryx Output Tool, we're able to set maximum records per file. This is helpful in a variety of ways - we use it as part of a workflow where the output gets uploaded into SalesForce and we can only load 5,000 records at a time. I also use this to split up large csv files to be under Excel's ~1M line limit so my teammates without Alteryx can open their reports and not lose data.

The Google Drive Output does not have this ability to split based on the number of records. If I use the RecordID Tool plus a Filter, it crashes Alteryx due to a Bug with RecordID + GDrive Output (it's currently in Accepted Defect stage)

It would be very helpful to have this same functionality that we can with the regular Output Tool

-

Category Connectors

-

Data Connectors

Hi All,

Was very happy to see the Bulk Loader introduced for Snowflake during last release. This bulk loader is specifically available for Snowflake environments that are hosted on AWS, but does not provide functionality for those environments using Azure. As Snowflake continues to build momentum, I imagine this will be a common request. Is there something in the pipeline to add this functionality?

For an interim solution, we will be working toward developing some generic scripts/snowsql to mimic that bulk load, but ultimately we'd love to have this as part of the tool.

Best,

devKev

-

API SDK

-

Category Connectors

-

Category Developer

-

Category Input Output

Please update the Publish to Tableau Server connector tool to support Tableau's Ask Data feature. The data source must be recognized as an extract on Tableau Server in order for the Ask Data feature to work. Currently, all data source published using version 2.0 of the connector tool are recognized as a live data source. The work around is cumbersome and requires multiple copies of data sources to be created and managed.

-

Category Connectors

-

Data Connectors

Hello,

I had a business case requiring a cost effective and quick storage solution for real time online sourced survey data from customers. A MongoDB instance would fit the need, so I quickly spun up a cluster on Mongo Atlas. Atlas was launched by MongoDB in 2016 as a database-as-a-service deployed on AWS. All instances for Atlas require TLS/SSL to connect. Currently, the Alteryx MongoDB connector does not support TLS/SSL connections and doesn't work against Atlas. So, I was left with a breakdown in my plan that would require manual intervention before ingesting data to Alteryx (not ideal).

Please consider expanding this functionality on all connectors. I am building Alteryx out in my agency as a data platform that handles sensitive customer information (name, address, email, etc.). Most tools I use to connect to secure servers today support this type of connection and should be a priority for Alteryx to resolve.

Thanks,

Mike Schock

-

Category Connectors

-

Data Connectors

Please add Parquet data format (https://parquet.apache.org/) as read-write option for Alteryx.

Apache Parquet is a columnar storage format available to any project in the Hadoop ecosystem, regardless of the choice of data processing framework, data model or programming language.

Thank you.

Regards,

Cristian.

-

Category Connectors

-

Category Input Output

-

Data Connectors

- New Idea 207

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

75 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,493 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

177 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow