Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

The actual Mongo output tool does not allow to specify field type (except for the primary _id field). The tool just assumes that all fields are string. Many of our CSV files incorporate string representation of ObjectId (ex: "56df422c08420b523aa00a77").

When we import those CSV we have to run a an additional script that will convert all the id into ObjectId fields. Same thing with the date -> Mongo Date.

If the tool would allow us to do this, it would save us a billion time across all our ETL processes.

Regards,

-

Category Connectors

-

Data Connectors

Our development team prefers that we connect to their MongoDB server using a private key through a .pem file, instead of a username/password. Could this option be built into the MongoDB Input?

-

Category Connectors

-

Data Connectors

Clustering your data on a sample and then appending clusters is a common theme

especially if you are in customer relations and marketing related divisions...

When it comes to appending clusters that you have calculated form a 20K sample and then you're going to "score" a few million clients you still need to download the data and use the append cluster...

Why don't we have an In-db append cluster instead, which will quicken the "distance based" scoring that append cluster does on SQLServer, Oracle or Teradata... |  |

Best

-

Category Connectors

-

Category In Database

-

Data Connectors

Hi All,

It would be great if Alteryx 10.5 supports connectivity to SAS server.

Regards,

Gaurav

-

Category Connectors

-

Category In Database

-

Data Connectors

I want to use Alteryx to pull data from a SharePoint List. This shouldn't be a problem, but I use SharePoint Content Types. Alteryx won't allow me to import any list that has Content Types enabled; thus rendering the SP list input type not usable.

My interim workaround is to create a data connection thru excel to the list and then pull the data in that way, but optimally, I would like to pull directly from the list.

Content types are a best practice in SharePoint, so any list or library in my site collection contains them.

Please update the SharePoint list input to support content types.

thank you,

Someone else inquired about this but I didn't see an idea entered /

-

Category Connectors

-

Category Input Output

-

Data Connectors

Hi All,

It would be a given wherein IT would have invested effort and time building workflows and other components using some of the tools which became deprecated with the latest versions.

It is good to have the deprecated versions still available to make the code backward compatible, but at the same time there should be some option where in a deprecated tool can be promoted to the new tool available without impacting the code.

Following are the benefits of this approach -

1) IT team can leverage the benefits of the new tool over existing and deprecated tools. For e.g. in my case I am using Salesforce connectors extensibly, I believe in contrast to the existing ones the new ones are using Bulk API and hence are relatively much faster.

2) It will save IT from reconfiguring/recoding the existing code and would save them considerable time.

3) As the tool keeps forward moving in its journey, it might help and make more sense to actually remove some of the deprecated tool versions (i.e. I believe it would not be the plan to have say 5 working set of Salesforce Input connectors - including deprecated ones). With this approach in place I think IT would be comfortable with removal of deprecated connectors, as they would have the promote option without impacting exsiting code - so it would ideally take minimal change time.

In addition, if it is felt that with new tools some configurations has changed (should ideally be minor), those can be published and as part of

promotions IT can be given the option to configure it.

Thanks,

Rohit Bajaj

-

Category Connectors

-

Category Transform

-

Data Connectors

-

Desktop Experience

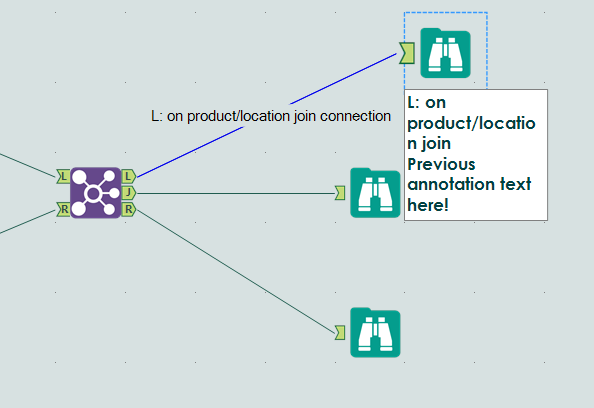

I find that when I'm using Alteryx, I'm constantly renaming the tool connectors. Here's my logic, most of the time:

I have something like a Join and 3 browses.

- I name the L join something like "L: on product/location join"

- I then copy that descriptor, and past it in the Annotation field

- I then copy that descriptor, select the wired connector, and paste that in the connection configuration

MY VISION:

Have a setting where I could select the following options:

- Automatically annotate based on tool rename

- Automatically rename incoming connector based on tool rename

If I rename a tool, and "Automatically annotate based on tool rename" is enacted, it will insert that renaming at the top of the annotation field. If there is already data in that field, it will be shifted down. If I rename a tool and "Automatically rename incoming connector..." is on, then the connection coming into it gets [name string]+' connection' put into its name field. I included a picture of the end game of my request.

Thanks for your ear!

-

Category Connectors

-

Category Interface

-

Data Connectors

-

Desktop Experience

When users who have no idea what the Field API Name is try to pull data from Salesforce it can be problematic. A simple solution would be to add the Field Label to the Query Window to allow users to pick the fields based on API NAME or FIELD LABEL.

-

Category Connectors

-

Data Connectors

When pulling data from Oracle and pushing to Salesforce, there are many times where we have an ID field in Oracle and a field containing this ID in Salesforce in what is called an external ID field. Allowing us to match against those external ID fields would save us a lot of time and prevent us from having to do a query on the entire object in Salesforce to pull out the ID of the records we need.

-

Category Connectors

-

Data Connectors

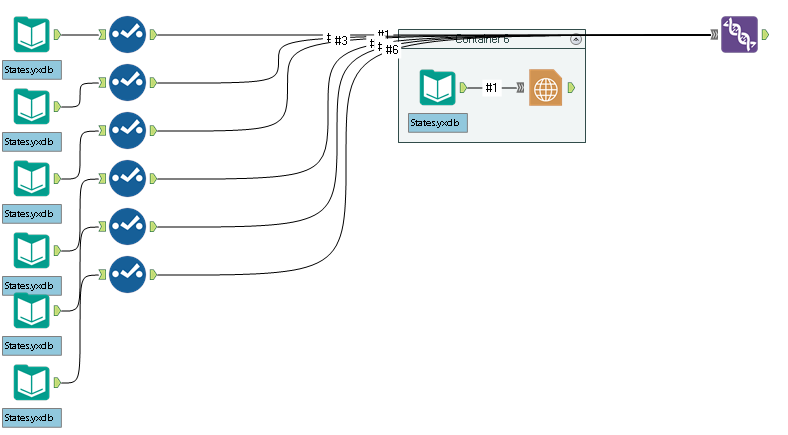

Sometimes in a crowded workflow, connector lines bunch up and align across the title bar of a tool container. This blocks my view of the title, but also makes it hard to 'grab' the tool container and move it.

Could Alteryx divert lines around tool containers that they don't connect into, or make tool containers 'grab-able' at locations other than the title bar?

-

Category Connectors

-

Data Connectors

Hi,

When you create a data model in excel you can create measures (aka KPI). These is then something you can then use when you pivot the data and measure would dynamically be updated as you segment the data in your pivot table.

By example, let's say you have a field with the customer name and a field with the revenue, you could create a measure that will calculate the average revenue per customer (sum of revenue / disctintcount of customer)

Now if you have a 3rd field in your data that inidcates your region, the measure would allow to see the average revenue by customer and by region (but the measure formula would remain the same and wouldn't refer to the region field at all)

Excel integrates well with PowerBI and currently these measures flow into PowerBI.

While we have a "Publish to PowerBI" in Alteryx I haven't seen any way to create such measures and export them to PowerBI.

Hence I still need to load to Excel to create these measures before I can publish to PowerBI, it'd be great to avoid that intermediate tool.

Thanks

Tibo

-

Category Connectors

-

Data Connectors

We don't have Server. Sometimes it's easy to share a workflow the old fashioned way - just email a copy of it or drop it in a shared folder somewhere. When doing that, if the target user doesn't have a given alias on their machine, they'll have issues getting the workflow to run.

So, it would be helpful if saving a workflow could save the aliases along with the actual connection information. Likewise, it would then be nice if someone opening the workflow could add the aliases found therein to their own list of aliases.

Granted, there may be difficulties - this is great for connections using integrated authentication, but not so much for userid/password connections. Perhaps (if implemented) it could be limited along these lines.

-

Category Connectors

-

Category In Database

-

Data Connectors

I have run into an issue where the progress does not show the proper number of records after certain pieces in my workflow. It was explained to me that this is because there is only a certain amount of "cashed" data and therefore the number is basd off of that. If I put a browse in I can see the data properly.

For my team and me, this is actually a great inconvenience. We have grown to rely on the counts that appear after each tool. The point of the "show progress" is so that I do not have to insert a browse after everything I do so that it takes up less space on my computer. I would like to see the actual number appear again. I don't see why this changed in the first place.

-

Category Connectors

-

Data Connectors

Improve HIVE connector and make writable data available

Regards,

Cristian.

Hive

| Type of Support: | Read-only |

| Supported Versions: | 0.7.1 and later |

| Client Versions: | -- |

| Connection Type: | ODBC |

| Driver Details: | The ODBC driver can be downloaded here. Read-only support to Hive Server 1 and Hive Server 2 is available. |

-

Category Connectors

-

Category Input Output

-

Data Connectors

Hello,

I think it would be extremely useful to have a switch connector available in Alteryx. What I mean by a switch connector is a connecting line with an on/off state that will block the data stream through it when off. Something like below:

Switch Connector in an "Off" state

This would be extremely useful when you only want data to flow down some of the paths. In the example above, I might turn the switch connector to off because I want to see the Summarize results without outputting to a document.

The current methods for having a path/set of tools present but unused are insufficient for my needs. The two methods I and Alteryx support were able to find were:

2. Putting the tools in a disabled tool container - I cannot see the tools when the container is disabled. I want to be able to see my tool set-up even when I am not using it.

This is inspired by the use of switches in electrical circuit design, such as:

Please comment if you also think this would be useful, or if you have ideas for ways to improve it further. Thank you!

-

Category Connectors

-

Data Connectors

Hi Team,

With Sharepoint Tool 2.3.0 , We are unable to connect Sharepoint Lists with service Principal Authentication as it requires SharePoint - Application permission - Sites.Read.All and Sites.ReadWrite.All in Microsoft Azure App. However, as those permissions will gets access to all sites in respective Organization community, it is impossible for any company to provide as it leaks data security. Kindly provide any alternative or change in permsiions for Sharepoint Connectivity with thumbprint in Alteryx.

Regerence Case with Alteryx Support : Case #00619824

Thanks & Regards

Vamsi Krishna

-

Category Connectors

-

Enhancement

HI,

Not sure if this Idea was already posted (I was not able to find an answer), but let me try to explain.

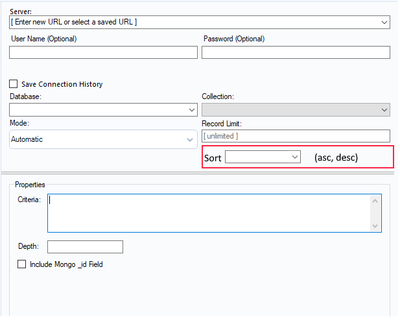

When I am using Mongo DB Input tool to query AlteryxService Mongo DB (in order to identify issues on the Gallery) I have to extract all data from Collection AS_Result.

The problem is that here we have huge amount of data and extracting and then parsing _ServiceData_ (blob) consume time and system resources.

This solution I am proposing is to add Sorting option to Mongo input tool. Simple choice ASC or DESC order.

Thanks to that I can extract in example last 200 records and do my investigation instead of extracting everything

In addition it will be much easier to estimate daily workload and extract (via scheduler) only this amount of data we need to analyze every day ad load results to external BD.

Thanks,

Sebastian

-

Category Connectors

-

Data Connectors

When you import a csv file, I sometimes use a "TAB" as delimiter. In section 5 Delimiters I want that as an option.

I have learned that it is possible to wright "\t" but a normal choice would bed nice.

-

Category Connectors

-

Data Connectors

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/BigQuery-Input-Error/td-p/440641

The BigQuery Input Tool utilizes the TableData.List JSON API method to pull data from Google Big Query. Per Google here:

- You cannot use the TableDataList JSON API method to retrieve data from a view. For more information, see Tabledata: list.

This is not a current supported functionality of the tool. You can post this on our product ideas page to see if it can be implemented in future product. For now, I would recommend pulling the data from the original table itself in BigQuery.

I need to be able query tables and views. Not sure I know how to use tableDataList JSON API.

-

Category Connectors

-

Data Connectors

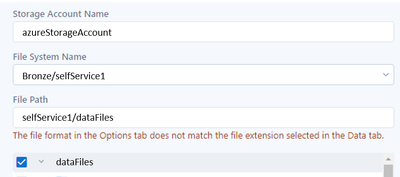

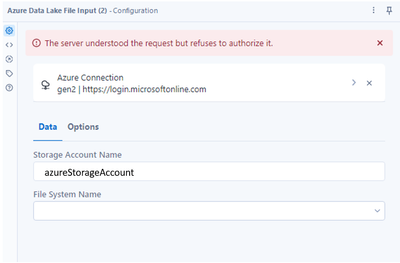

The current Azure Data Lake tool appears to lack the ability to connect if fine grained access is enabled within ADLS using Access Control Lists (ACL)

It does work when Role Based Access Control (RBAC) is used by ACL provides more fine grained control to the enviroment

For example using any of the current auth types: End-User Basic, End-User (advanced) or Service-to-Service if the user has RBAC to ADLS the connector would work

In that scenario though the user would be granted to an entire container which isn't ideal

- azureStorageAccount/Container/Directory

- Example: azureStorageAccount/Bronze/selfService1 or azureStorageAccount/Bronze/selfService2

- In RBAC the user is granted to the container level and everything below so you cannot set different permissions on selfService 1 or selfService2 which may have different use cases

The ideal authentication would be to the directory level to best control and enable self service data analytic teams to use Alteryx

- In this access pattern the user would only be granted to the directory level (e.g. selfService1 or selfService2 from above)

The existing tool appears to be limited where if don't have access at the container level but only at the directory level then the tool cannot complete the authentication request. This would require the input for the tool to be able to select a container (aka file system name) from the drop down that included the container+ the directory

- Screenshot example A below shows how the file system name would need to be input

- Screenshot example B below shows what happens if you have ACL access to ADLS at the directory level and not at the container level

Access control model for Azure Data Lake Storage Gen2 | Microsoft Docs

Example A

Example B

-

Category Connectors

-

Data Connectors

- New Idea 206

- Accepting Votes 1,838

- Comments Requested 25

- Under Review 149

- Accepted 55

- Ongoing 7

- Coming Soon 8

- Implemented 473

- Not Planned 123

- Revisit 68

- Partner Dependent 4

- Inactive 674

-

Admin Settings

19 -

AMP Engine

27 -

API

11 -

API SDK

217 -

Category Address

13 -

Category Apps

111 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

239 -

Category Data Investigation

75 -

Category Demographic Analysis

2 -

Category Developer

206 -

Category Documentation

77 -

Category In Database

212 -

Category Input Output

631 -

Category Interface

236 -

Category Join

101 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

74 -

Category Predictive

76 -

Category Preparation

384 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

80 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

87 -

Configuration

1 -

Data Connectors

948 -

Desktop Experience

1,492 -

Documentation

64 -

Engine

121 -

Enhancement

274 -

Feature Request

212 -

General

307 -

General Suggestion

4 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

10 -

Localization

8 -

Location Intelligence

79 -

Machine Learning

13 -

New Request

176 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

21 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

73 -

UX

220 -

XML

7

- « Previous

- Next »

- vijayguru on: YXDB SQL Tool to fetch the required data

- Fabrice_P on: Hide/Unhide password button

- cjaneczko on: Adjustable Delay for Control Containers

-

Watermark on: Dynamic Input: Check box to include a field with D...

- aatalai on: cross tab special characters

- KamenRider on: Expand Character Limit of Email Fields to >254

- TimN on: When activate license key, display more informatio...

- simonaubert_bd on: Supporting QVDs

- simonaubert_bd on: In database : documentation for SQL field types ve...

- guth05 on: Search for Tool ID within a workflow

| User | Likes Count |

|---|---|

| 41 | |

| 31 | |

| 20 | |

| 10 | |

| 7 |