Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Cached Data prior to running workflow

Cached Data prior to running workflow

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi all,

I run a workflow that contains two very large datasets. One of which I receive a few weeks before the other. However, when I receive the second dataset (which is about 1/8th the size of the first dataset) I need to complete the workflow pretty quickly. I takes quite a bit of time to run the workflow when I have to read all of the data into the workflow. I was wondering, the day before, can I cache the first source of data up to the point right before it interacts with the second source of data? Then, once I get the second source I can run the workflow. Since the first dataset is already cached I have essentially saved myself that amount of time. Does it work like this? Please let me know if you have any questions.

EDIT: It takes a few hours to read in the large dataset so my intuition tells me that if I cache this data and the manipulations I need to perform beforehand then I will be saved all that time when I actually run the full workflow. I just am not sure if I leave Alteryx open for a day with all that info cached will that cause any unexpected issues.

- Labels:

-

Input

-

Tips and Tricks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi,

I don't think what you're asking is possible. I would rather look at a few things to speed up your process. Example areas are:

- Can you store the data set (even better, an aggregated version of the dataset) to .yxdb format

- Can you use In-database tools?

- Can you store less data - only what you need - in a database/.yxdb after the extraction?

- Look at why the extract is taking so long? If it's from a database, have you done all joins & filters in the extract itself?

Those are some of the immediate points where I would start to look.

Regards,

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

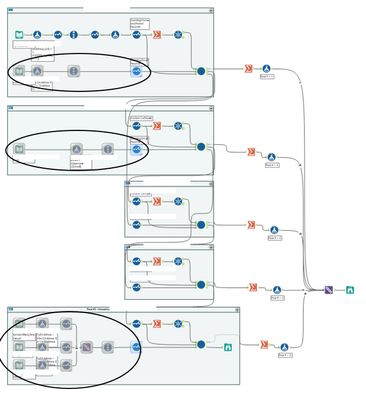

Can I not just do as I have done in the picture below? I have cached my workflow in three spots (circled in black). As you can see these are just additional data sources that do not interact with my initial data source immediately. Then once I get my last input file (The top input file that is not circled) I place it in there and run. The black portions were already ran and cached previously so it doesn't need to perform those steps again, it only needed to bring in the cached data.

To answer your questions:

- Can you store the data set (even better, an aggregated version of the dataset) to .yxdb format

- I am not exactly sure what you mean by this.

- Can you use In-database tools?

- I am not sure what you mean but this either

- Can you store less data - only what you need - in a database/.yxdb after the extraction?

- I have to bring in all the data because I am performing matches at each stage and at the end I need to return the full main file with appended fields. I am basically doing a vlookup to find a match and bring over a field from the circled inputs into the not-circled input.

- Look at why the extract is taking so long? If it's from a database, have you done all joins & filters in the extract itself?

- I am not sure what you mean by 'extract'. But the reason why this is taking so long is due to sheer size of the data files. Loading the files take a few hours and then the details within each macro takes the majority of the remainder of time (joins and fuzzy matches).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @trevorwightman ,

I think the good news is that we can probably make your life a lot better by working through this.

It probably won't be a quick single answer, but I'm happy to stick with you on this one ... this could save you an enormous amount of time.

Firstly, to answer your question, you could try to cache it and wait a day, but it's awfully dangerous - a sudden reboot and maybe the files have changed when you reload, the memory toll on your machine etc. Definitely don't recommend it.

Secondly, let me try to clarify some points:

1. A .yxdb is the extension of the Alteryx data format type - think of it like .xlsx is to Excel, except that this format is much more efficient for Alteryx to process. So, rather than caching (as you're doing in your image), you can output the data into a new .yxdb file (with, say, the date appended automatically) so you keep copies of what you ran the day before. I don't know your workflow, but the endpoint is likely to be the end of the circle you drew on the image. If you had access to a database, this data could also be output to a database (e.g. SQL Server).

2. In database tools apply when you are extracting data from a database. My hunch is that you're not doing that, but if you were, the In Database tools (there is a section for this in the menu in Alteryx Designer) offer in-database processing, i.e. the logic is run before it reaches Alteryx. Alteryx then doesn't have to do the crunching and only pulls the result into memory, so uses the power of the database.

3. With the "extract less" point, if you're doing a vlookup, can you extract only key fields, e.g. a name, an address or a key etc.?) The less fields you extract from the source the faster it will run

4. An extract is another name for the workflow you're building - i.e. the whole process. The key here is to break it into granular pieces as much as possible to save you time. For example:

- Could you extract each file source into a .yxdb file / database first? This simply requires an input & output tool and saving the output as "Alteryx Database" format. If you do that for each source and use the .yxdb as input into the larger workflow (your image), the workflow will run faster

- I notice you're doing a union on the bottom section. Similar story, can that be stored in a file first prior to this workflow?

- Fuzzy matching is very expensive in Alteryx computing terms. So doing multiple matches in a single workflow is likely to take a lot of time. I would take each one of your containers and make them a separate workflow, with each workflow outputting a file. The next workflow then picks up that file and matches etc. That's if you're not using a database

- I would look at the fuzzy matching itself. If you're running matches on multiple columns with lots of characters, it will be dead slow. This is a topic on it's own, but what would be helpful is if you can point out which part of the workflow is running the longest: the file extracts or the output from the matching?

Think the above is plenty to think about - let me know if you'd like to chat some more.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Wow, thanks for all the great info! Here is my response to each of your points.

1. Ah, I understand now. Yes currently I am reading these files from CSV. I ran a quick test and read a file with about 300m records. It took 3 minutes to read the file as a CSV and only 1 minute to read it as an Alteryx Database. So it looks like it can at least read the data much quicker. Does the benefit of the Alteryx Database extend beyond just read the file? For example, when performing other manipulations or submitting the data through a batch macro will all of those processes happen faster because the source was an Alteryx DB and not just a CSV?

2. I see, I am not using a database in this instance.

3. Got it. Yes I drop fields where I can so I don;t carry unnecessary data through my workflow

4.

1st point: Yes I can turn all of my input files into Alteryx Databases (I will do that now!). If the benefit of using an Alteryx Database extends beyond just reading the file faster I will turn all of them into this. If it does not extend beyond that benefit then I won't bother turning the last file I receive into the Alteryx Database.

2nd point: Great point! Although I think this will be changing a little bit as I am trying to have fewer input files (those three files were just a subset of three of the input files from above that I had split off in another workflow). Instead of splitting the files beforehand and running the workflow with all of the separate files I will just split the files with a filter tool at the top of my workflow. This would still require a blend tool though. At the end of the day I am trying to run my whole process in one workflow so I don't have to have bits and pieces everywhere.

3rd point: I don't think I can split my workflow up as each subsequent container uses "leftovers" from the above container. Plus I was just hoping to to hit run and walk away.

4th point: I agree that I am probably using too many fields for fuzzy matching and can probably remove some as they are somewhat redundant.

Once I make some of the above changes I will report back and let you know how they went. Then I can go further in depth with how many macros are working. But I don;t want to ask those questions as I have a lot to do for now. Hopefully the above provides more context as I am not able to send over the actual workflow. I really appreciate the time you have taken to help me though!

EDIT: I ran a test on a subset of the data. With CSV as input the workflow took 54 minutes. With Alteryx DB as input the workflow took 25 minutes (wow!). The results are slightly different which is a little scary, however, maybe it has to do with the fact that I am limiting how many records and being initiated in some of the files. Also, my thought it that converting CSV to .yxdb jumbled the records a bit and this is why results are different.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @trevorwightman ,

Glad there are already some little improvements made. I think we can still do better!

First, to answer your question regarding the benefits of .yxdb vs .csv - personally I've always found .csv's less reliable, in that you have to be careful with the delimiters and identifying text. Also, sometimes truncation happens on fields that have a lot of verbatim text in it and it's easy to miss if you're not paying attention. If you don't have a lot of text in a single field this may not be a problem. Think the cut-off is 254 characters. This can be extended, but needs to be defined explicitly.

I highly doubt that the .yxdb would be unreliable/give incorrect results, unless not all of the data made it's way into it in the first place (from the .csv). So just do a check that every row & column were transferred into the file in your .csv -> .yxdb workflow - think you should be OK.

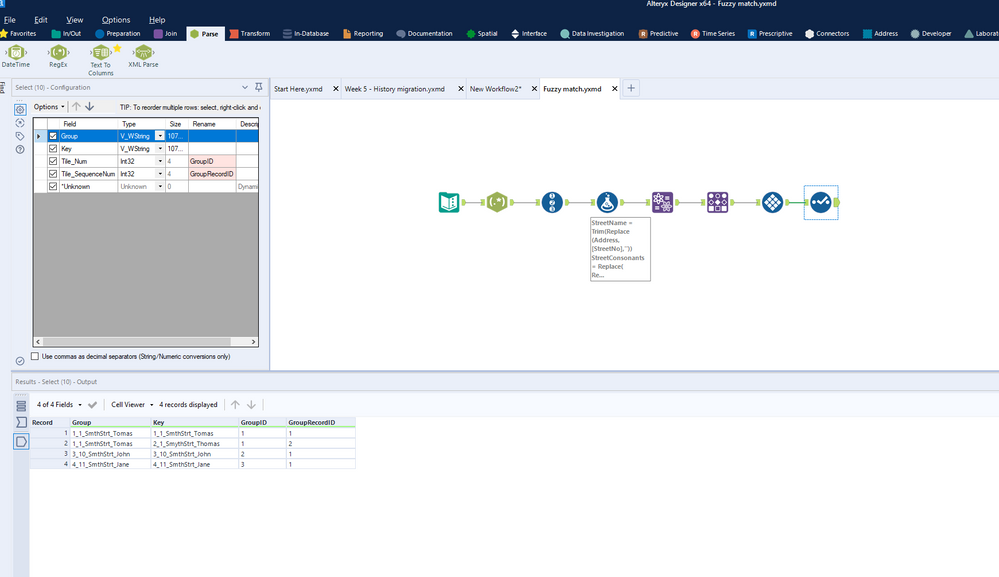

On the fuzzy matching, I may be able to give you a tip to help improve your performance. Not guaranteed, but let's give it a try. I attach a simple example for you to review:

* Rather than matching on multiple fields, create a single field that contains all the values you want to match on

* As your Record ID (unique identifier), also append all these values into a single field. This helps you to see if the match works or not

* On the fuzzy match tool, make sure you output the match scores (if you're not already doing that)

* Add a "Group" tool at the end of your fuzzy match - this is to de-duplicate all your matches and identify those that relate (e.g. A=B and B=C therefore A=C)

* At the end, you can add a Tile tool to give a number to each match

* Oh, and I like to use a little trick - to remove all the vowels in a word I want to match against - that is usually where most typos happen

By the way, I'm assuming you have to fuzzy match, and cannot simply join data together! 🙂

Here is an image of what this would look like:

The performance point is to simplify the number of fields your matching on (so make it 1 that is a combined field ... if you can). The rest is making sure you keep track of what is matching and what is not. Not sure how you're handling that (e.g. non-matches) currently?

Back to the performance enhancement though and wanting to press run & walk away: Look into Crew Macros - they're on the chaosreignswithin.com website and were originally written by one of the Alteryx developers. They're brilliant. The macros you're looking for are called "Runner" and "conditional runner" tools - they allow you to run multiple workflows in sequence. So you could for example:

1. Move all .csv -> .yxdb

2. Do Fuzzy match stage 1 and store it, using the input from step 1

3. Do Fuzzy match stage 2 and store it, using the input from step 2.

etc.

Also, there are "log parse" tools in the Crew Macros, so you can actually store the log results of each run, so you can see how many rows were processed and what errors (if any) happened.

Again, that's a lot of info, so I'll leave it there. Attaching the fuzzy match workflow for you.

Best,

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

BRILLIANT AND VERY HELPFUL!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Glad to hear!

-

AAH

1 -

AAH Welcome

2 -

Academy

24 -

ADAPT

82 -

Add column

1 -

Administration

20 -

Adobe

176 -

Advanced Analytics

1 -

Advent of Code

5 -

Alias Manager

70 -

Alteryx

1 -

Alteryx 2020.1

3 -

Alteryx Academy

3 -

Alteryx Analytics

1 -

Alteryx Analytics Hub

2 -

Alteryx Community Introduction - MSA student at CSUF

1 -

Alteryx Connect

1 -

Alteryx Designer

44 -

Alteryx Engine

1 -

Alteryx Gallery

1 -

Alteryx Hub

1 -

alteryx open source

1 -

Alteryx Post response

1 -

Alteryx Practice

134 -

Alteryx team

1 -

Alteryx Tools

1 -

AlteryxForGood

1 -

Amazon s3

138 -

AMP Engine

190 -

ANALYSTE INNOVATEUR

1 -

Analytic App Support

1 -

Analytic Apps

17 -

Analytic Apps ACT

1 -

Analytics

2 -

Analyzer

17 -

Announcement

4 -

API

1,038 -

App

1 -

App Builder

43 -

Append Fields

1 -

Apps

1,167 -

Archiving process

1 -

ARIMA

1 -

Assigning metadata to CSV

1 -

Authentication

4 -

Automatic Update

1 -

Automating

3 -

Banking

1 -

Base64Encoding

1 -

Basic Table Reporting

1 -

Batch Macro

1,271 -

Beginner

1 -

Behavior Analysis

217 -

Best Practices

2,412 -

BI + Analytics + Data Science

1 -

Book Worm

2 -

Bug

622 -

Bugs & Issues

2 -

Calgary

59 -

CASS

46 -

Cat Person

1 -

Category Documentation

1 -

Category Input Output

2 -

Certification

4 -

Chained App

235 -

Challenge

7 -

Charting

1 -

Clients

3 -

Clustering

1 -

Common Use Cases

3,386 -

Communications

1 -

Community

188 -

Computer Vision

45 -

Concatenate

1 -

Conditional Column

1 -

Conditional statement

1 -

CONNECT AND SOLVE

1 -

Connecting

6 -

Connectors

1,179 -

Content Management

8 -

Contest

6 -

Conversation Starter

17 -

copy

1 -

COVID-19

4 -

Create a new spreadsheet by using exising data set

1 -

Credential Management

3 -

Curious*Little

1 -

Custom Formula Function

1 -

Custom Tools

1,719 -

Dash Board Creation

1 -

Data Analyse

1 -

Data Analysis

2 -

Data Analytics

1 -

Data Challenge

83 -

Data Cleansing

4 -

Data Connection

1 -

Data Investigation

3,059 -

Data Load

1 -

Data Science

38 -

Database Connection

1,898 -

Database Connections

5 -

Datasets

4,572 -

Date

3 -

Date and Time

3 -

date format

2 -

Date selection

2 -

Date Time

2,880 -

Dateformat

1 -

dates

1 -

datetimeparse

2 -

Defect

2 -

Demographic Analysis

172 -

Designer

1 -

Designer Cloud

472 -

Designer Integration

60 -

Developer

3,641 -

Developer Tools

2,915 -

Discussion

2 -

Documentation

452 -

Dog Person

4 -

Download

906 -

Duplicates rows

1 -

Duplicating rows

1 -

Dynamic

1 -

Dynamic Input

1 -

Dynamic Name

1 -

Dynamic Processing

2,535 -

dynamic replace

1 -

dynamically create tables for input files

1 -

Dynamically select column from excel

1 -

Email

742 -

Email Notification

1 -

Email Tool

2 -

Embed

1 -

embedded

1 -

Engine

129 -

Enhancement

3 -

Enhancements

2 -

Error Message

1,976 -

Error Messages

6 -

ETS

1 -

Events

178 -

Excel

1 -

Excel dynamically merge

1 -

Excel Macro

1 -

Excel Users

1 -

Explorer

2 -

Expression

1,693 -

extract data

1 -

Feature Request

1 -

Filter

1 -

filter join

1 -

Financial Services

1 -

Foodie

2 -

Formula

2 -

formula or filter

1 -

Formula Tool

4 -

Formulas

2 -

Fun

4 -

Fuzzy Match

614 -

Fuzzy Matching

1 -

Gallery

588 -

General

93 -

General Suggestion

1 -

Generate Row and Multi-Row Formulas

1 -

Generate Rows

1 -

Getting Started

1 -

Google Analytics

140 -

grouping

1 -

Guidelines

11 -

Hello Everyone !

2 -

Help

4,112 -

How do I colour fields in a row based on a value in another column

1 -

How-To

1 -

Hub 20.4

2 -

I am new to Alteryx.

1 -

identifier

1 -

In Database

854 -

In-Database

1 -

Input

3,711 -

Input data

2 -

Inserting New Rows

1 -

Install

3 -

Installation

305 -

Interface

2 -

Interface Tools

1,644 -

Introduction

5 -

Iterative Macro

950 -

Jira connector

1 -

Join

1,736 -

knowledge base

1 -

Licenses

1 -

Licensing

210 -

List Runner

1 -

Loaders

12 -

Loaders SDK

1 -

Location Optimizer

52 -

Lookup

1 -

Machine Learning

230 -

Macro

2 -

Macros

2,498 -

Mapping

1 -

Marketo

12 -

Marketplace

4 -

matching

1 -

Merging

1 -

MongoDB

66 -

Multiple variable creation

1 -

MultiRowFormula

1 -

Need assistance

1 -

need help :How find a specific string in the all the column of excel and return that clmn

1 -

Need help on Formula Tool

1 -

network

1 -

News

1 -

None of your Business

1 -

Numeric values not appearing

1 -

ODBC

1 -

Off-Topic

14 -

Office of Finance

1 -

Oil & Gas

1 -

Optimization

647 -

Output

4,502 -

Output Data

1 -

package

1 -

Parse

2,099 -

Pattern Matching

1 -

People Person

6 -

percentiles

1 -

Power BI

197 -

practice exercises

1 -

Predictive

2 -

Predictive Analysis

820 -

Predictive Analytics

1 -

Preparation

4,631 -

Prescriptive Analytics

185 -

Publish

230 -

Publishing

2 -

Python

728 -

Qlik

36 -

quartiles

1 -

query editor

1 -

Question

18 -

Questions

1 -

R Tool

452 -

refresh issue

1 -

RegEx

2,106 -

Remove column

1 -

Reporting

2,112 -

Resource

15 -

RestAPI

1 -

Role Management

3 -

Run Command

501 -

Run Workflows

10 -

Runtime

1 -

Salesforce

243 -

Sampling

1 -

Schedule Workflows

3 -

Scheduler

372 -

Scientist

1 -

Search

3 -

Search Feedback

20 -

Server

524 -

Settings

758 -

Setup & Configuration

47 -

Sharepoint

465 -

Sharing

2 -

Sharing & Reuse

1 -

Snowflake

1 -

Spatial

1 -

Spatial Analysis

556 -

Student

9 -

Styling Issue

1 -

Subtotal

1 -

System Administration

1 -

Tableau

462 -

Tables

1 -

Technology

1 -

Text Mining

410 -

Thumbnail

1 -

Thursday Thought

10 -

Time Series

397 -

Time Series Forecasting

1 -

Tips and Tricks

3,781 -

Tool Improvement

1 -

Topic of Interest

40 -

Transformation

3,212 -

Transforming

3 -

Transpose

1 -

Truncating number from a string

1 -

Twitter

24 -

Udacity

85 -

Unique

2 -

Unsure on approach

1 -

Update

1 -

Updates

2 -

Upgrades

1 -

URL

1 -

Use Cases

1 -

User Interface

21 -

User Management

4 -

Video

2 -

VideoID

1 -

Vlookup

1 -

Weekly Challenge

1 -

Weibull Distribution Weibull.Dist

1 -

Word count

1 -

Workflow

8,469 -

Workflows

1 -

YearFrac

1 -

YouTube

1 -

YTD and QTD

1

- « Previous

- Next »